1

Start a Proof of Concept

Before you make any decisions, ensure our solutions fit your needs.

- Guided Proof of Concept: Experience up to 30 days free on OpenMetal infrastructure with no obligations.

- Engineering Support: You aren’t testing in a vacuum. Receive technical guidance from our engineering team to ensure your PoC is architected the way you need from day one.

- Transparent Cost Analysis: We work with you to map your current usage to our fixed-cost model, giving you a clear savings forecast.

2

A Frictionless Transition

We bridge the gap between your current environment and your new cloud.

- Dedicated Migration Planning: We provide architectural guidance to map out your move, minimizing downtime and simplifying your transition.

- Flexible Ramp Periods: Don’t pay double while you move. We structure agreements with flexible ramp periods that scale up as your migration progresses, so your costs match your actual usage.

- Commercial Certainty: Secure your budget with agreements that lock in pricing for up to 5 years, shielding you from market volatility.

3

An Extension of Your Team

We don’t disappear once your deployment is live.

- Direct Engineer Access: Communicate directly with our engineers via your own private Slack channel.

- Collaborative Roadmap: We work hand-in-hand to plan your future hardware needs on your preferred timeline, and will even introduce additional tools and solutions customized to your requirements.

- Risk-Free Guarantee: We stand behind our services with a 30-day money-back guarantee once you become a customer.

Each Hosted Private Cloud Starts With Two Powerful Building Blocks

OpenMetal Private Cloud Core

All hosted private clouds start with our powerful Cloud Core – three hyper-converged commercial-grade servers, spun up as a service for maximum speed and convenience.

Your cloud is powered by OpenStack and Ceph. Robust, mature, API-first, and with zero licensing costs. You will have everything from Compute/VMs and Block Storage to powerful software defined networking to trivial-to-deploy Kubernetes. Plus, Day 2 monitoring is built in to help you move fast with confidence.

Your hosted private cloud can deploy in as little as 45 seconds. This innovation saves time, money, and unlocks new capabilities like 100% self-service ordering.

Dramatically Different Service

Our entire team, from executives to engineers, is always available to you. We will align with your goals, teach you our tech, and support the powerful open source systems behind your hosted private cloud. In fact, this is part of our corporate mission!

We are ready to prove ourselves to you and in addition to our Support Pledge and SLAs, we offer:

- Free Self-Serve Trials and Proof of Concept Clouds. Try before you buy and ensure our platform is a fit for your needs.

- Transparent Pricing. We don’t hide our prices and surprise you with some enterprise-level shock. Know exactly what you’ll pay every month, and be alerted to any unusual spikes. Plus, enjoy fixed bandwidth costs and generous included egress!

Why Choose OpenMetal?

OPEN SOURCE FREEDOM: Ditch vendor lock-in and gain the power of OpenStack + Ceph for ultimate control, transparency, and customization.

EXTRAORDINARY PERFORMANCE: No generic, low-grade hardware here! Optimize your private cloud infrastructure for peak performance and run even the most demanding workloads.

SUPPORT THAT FEELS LIKE AN EXTENSION OF YOUR TEAM: Get responsive, highly-technical support directly inside your personal Slack channel. We speak your language.

COST-EFFECTIVE CLOUD, DONE RIGHT: The big public cloud services become bloated and overpriced as you scale. Pay only for the resources you need and customize everything the way you want it.

EFFORTLESS MIGRATION: Experience a smooth and efficient onboarding process with our expert team by your side. We offer migration assistance and ramp periods to make your move easy.

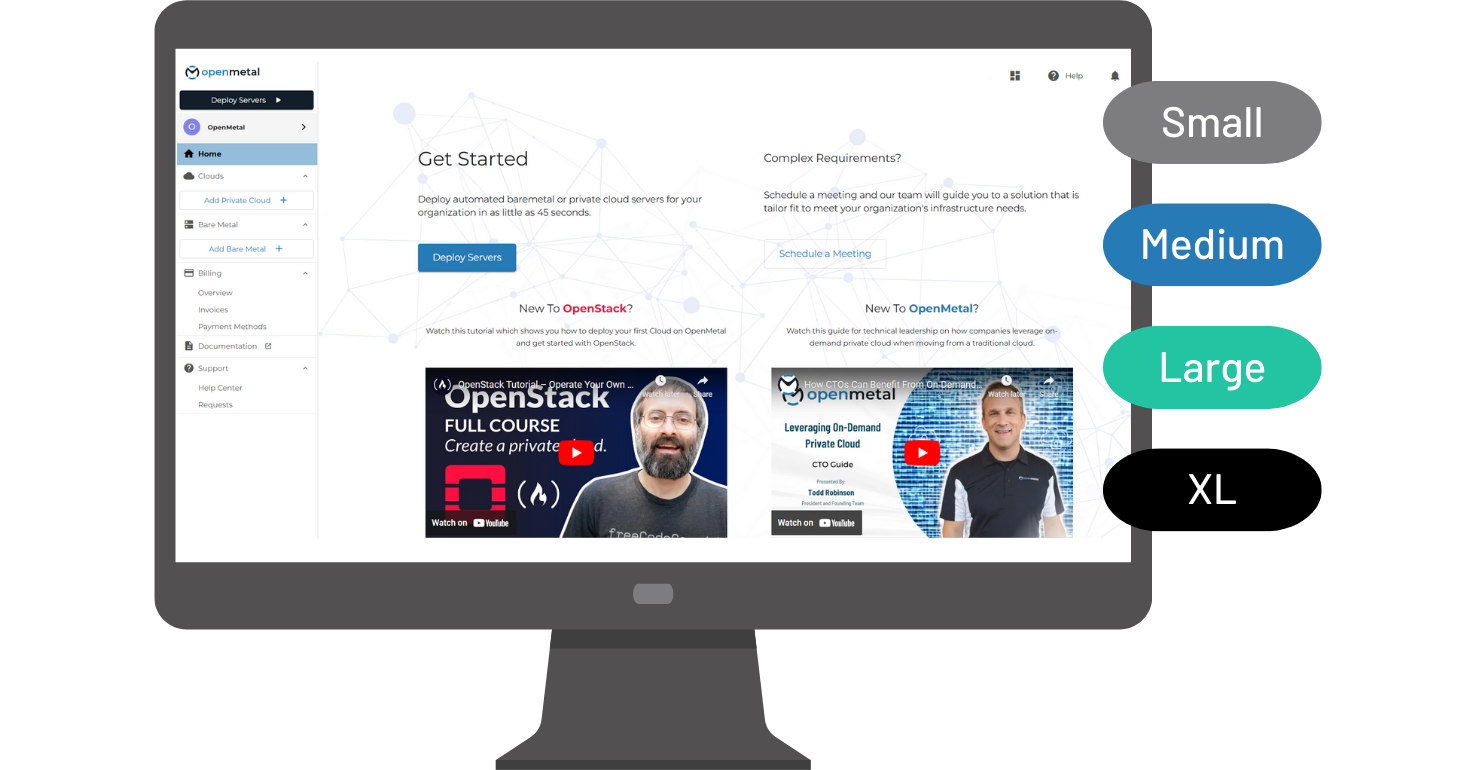

CLOUD MANAGEMENT MADE SIMPLE: Gain complete visibility and control over your infrastructure with our intuitive cloud monitoring tools and web-based management portal.

Direct Access to Experts

“It has to be the direct access on Slack to both the technical and the business team. OpenMetal is the only vendor we have who shares our core value of true white-glove service.”

“The best support team out there. It is like having an extension of our own IT department.”

“Service is Great and Support Seals the Deal.”

“Aside from the obvious cost benefit, the client support and willingness to work with us and innovate solutions differentiates OpenMetal from other cloud providers the most.”

A Product and Team They Can Count On

“Stability, usability, performance, and customer/technical service is off the charts.”

“Great Product, even better technical support.”

“Fantastic product and amazing team behind it.”

“Wide variety of products and ongoing innovations with multi cloud features.”

“Fast to get started, great tech support team!”

Accessibility and Ease of Use

“Easy to integrate with a great open cloud stack.”

“Great product, total control of resources.”

“Easy to use private cloud. Great demo for testing.”

“The support team was easy to deal with and the building of the service was quick and efficient.”

Public Cloud Pricing vs. OpenMetal Private Cloud Savings

Having full control of your OpenMetal Private Cloud hardware means you decide the features, density, approach to storage, vCPU ratio, VM configurations, and more. This translates into your unique approach to your business! Lower your operational costs to boost margins and ROI while staying ahead of the technology curve.

Public Cloud Pricing

(Azure/AWS/GCP)

$205

Average Per Month/VM

+ Egress Fees

Virtual Machine

8 vCPU – 16 GB RAM

OpenMetal Pricing

(OpenStack Cloud)

$43

Average Per Month/VM

Egress Included

30% to 60% Cloud Cost Savings

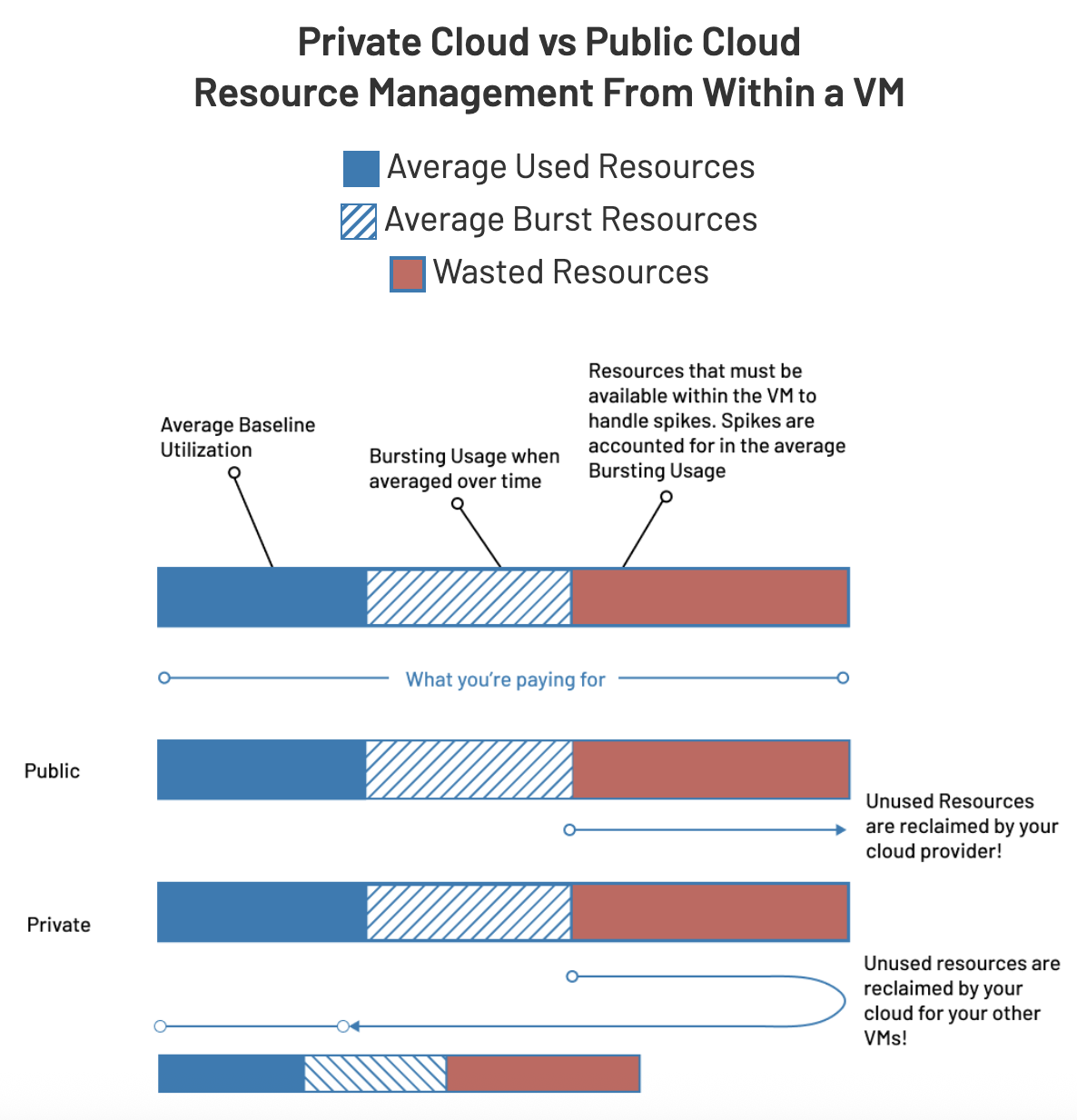

One of the key benefits of your own infrastructure is its cost advantage at scale versus the hyperscaler public clouds. With OpenMetal, your cloud benefits from the fundamental advantage of private infrastructure – you are leasing all the resources of the hardware and not just the virtual resources.

When you provision a VM you can provision the size you need, but during the time that VM is not spiking to consume the top level limit of the VM, those previously wasted resources are dynamically returned to you for your other VMs. You can raise performance, lower staff management time, and lower your cloud costs.

Public clouds are really too expensive. You don’t have to spend that level of investment with a public cloud. The answer is a private cloud. But you need a trusted expert that you can rely on, and that trusted expert is OpenMetal. With OpenMetal you’re going to have huge savings, but you need an expert to navigate the waters because this technology is very different from the public cloud. So that’s why I would tell people to go with OpenMetal.

Tom Fanelli, CEO & Co-Founder @ Convesio

How Convesio Escaped the Public Cloud Trap and Reduced Costs by 50% >>

Hosted Private Cloud and Platform FAQs

Also, if for any reason it is not a fit, you have a self service 30 day money back guarantee or the 30 days free PoC time. No obligations or lock-in.

We believe that our on-demand hosted private clouds are a powerful differentiator in the CTO toolkit that combine the advantages of both public and private cloud hosting. Check out this video from OpenMetal President, Todd Robinson, diving into the cost considerations and target workloads that can empower Chief Technology Officers and other technical executives to harness the potential of private cloud as-a-service for effective cloud cost management.

Unlike many other private cloud providers, we take pride in forming a close partnership with our customers. Of note, accounts are assigned an “Executive Sponsor” from our side. It may be a Director or up to our President. You will have access to a peer that can clear the way for you, deal with any complications on the team or with the cloud, and provide business insights.

With OpenMetal as your hosted private cloud provider, you can rely on transparent pricing with no surprise fees or bills. Egress pricing is fair and dramatically less than public cloud hosting. Check Egress Pricing.

As a fusion of public cloud and private cloud hosting attributes, OpenMetal also brings benefits typically not associated with private cloud. Scale easily, both up and down, just like public cloud hosting, but without the unpredictable costs. You can set budget limits directly inside OpenMetal Central, our top-level control panel.

Achieve your desired performance and cost-saving goals with the tipping point pricing leader.

Simple answer, we have done this before for customers in many different situations. Longer answer – of course, results will differ but they are based on if you are over the “tipping point” where private cloud beats out public cloud. There are three major factors to consider:

- Is your team highly technical? If so, running an OpenMetal hosted private cloud is not much different, skillset wise, to what is needed to maintain a health fleet of VMs, storage, and networking on public cloud. It can actually improve your operational time spent on troubleshooting and architecting as our team is much more available than any top engineers from major cloud providers.

- Public cloud is a good solution when clouds spends are below $10k/month or less. Hosted private cloud is a close cousin to public cloud – spin up and down on demand, transparent pricing – but scale is important to get the most value from private cloud. Talk to our team about your current spend and your goal. If you are below $10k/month we suggest reading our Public vs Private Cloud Cost Tipping Point article.

- Does your team spend a lot of time trying to maximize the costs of their public cloud resources? Or time spent ensuring bills don’t accidentally balloon? Dedicated private clouds are fundamentally more efficient and have fixed costs. This time wasted by your staff will be greatly reduced or gone. They can then focus where they need to focus.

If one or more of the above are true, we have found that, particularly for deployments on public cloud costing over $20k/month, we will get you close to a 50% reduction. Also, if for any reason we are not a fit, you have a self service 30 day money back guarantee or the 30 days free PoC time. No obligations or lock-in.

Private clouds hosted by OpenMetal allow you to deploy applications and store data on a flexible infrastructure without having to invest in hardware, software, and, often for on-prem, additional staff. Because our infrastructure is configured to serve a wide array of organizations, your business will benefit from this large scale. This includes the availability of more resources to provision and on-demand scalability options.

For on-premises deployments, many hardware manufacturers will release Reference Architectures that a skilled system admin and engineering team can follow. This typically requires high level skills in networking, server hardware, the cloud software itself, like OpenStack, a storage software like Ceph, and monitoring. The cost of creation of an on-premises private cloud is significant.

Also, with the decision to adopt an on-premises private cloud, a business must account for costs of maintenance. This includes dealing with hardware failures and other possible disaster incidents. This is one of the most important things to evaluate between a hosted vs on-premises private cloud. OpenMetal offers three levels of management: hardware only, assisted management (most popular), and a custom Managed Private Cloud level.

We highly recommend that a finance-driven member is also on the team to focus on “Cost per VM” and “Cost per GB Storage”. These costs are typically used to represent the overall efficiency of your system and should include all costs, CapEx and OpEx. Here at OpenMetal your account manager can help you with cost models.

- An Account Manager, Account Engineer, and an Executive Sponsor will be assigned from our side

- You will be invited to our Slack for Engineer to Engineer support

- Your Account Manager will collect your goals and we will align our efforts to your success

- You can meet with your support team via Google Meet (or the video system of your choice) up to weekly to help keep the process on schedule

- Migration planning if existing workloads are being brought over

- Discussion on agreements and potential discounts via ramps if moving workloads over time

The use of 3 replicas has typically been the standard for storage systems like Ceph. It means that 3 copies exist at all times in normal operation to prevent data loss in the event of a failure. In Ceph’s lingo, if identical data is stored on 3 OSDs, when one of the OSDs fails, the two remaining replicas can still tolerate one of them failing without loss of data. Depending on the Ceph settings and the storage available, when Ceph detects the failed OSD, it will wait in the “degraded” state for a certain time, then begin a copy process to recover back to 3 replicas. During this wait and/or copy process, the Ceph is not in danger of data loss if another OSD fails.

Two downsides to consider. The first downside to 3 replicas is slower maximum performance as the storage system must write the data 3 times. Your applications may operate under the maximum performance though so maximum performance may not be a factor.

The second downside is cost as with 3 replicas it means that if you need to store 1GB of user data, it will consume 3GB of storage space.

With data center grade SATA SSD and NVMe drives, the mean time between failure (MTBF) is better than traditional spinning drives. Spinning drive reliability is what drove the initial 3 replica standard. Large trustworthy data sets describe a 4X to 6X MTBF advantage to SSDs over HDDs. This advantage has led to many cloud administrators moving to 2 replicas for Ceph when running on data center grade SSDs.

Considerations for 2 replicas:

First, with two replicas, during a failure of one OSD, there is a time when a loss of a second OSD will result in data loss. This time is during the timeout to allow the first OSD to potentially rejoin the cluster and the time needed to create a new replica on a different running OSD. This risk is real but is offset by the very low chance of this occurring and the relative ease or difficulty for you to recover data from a backup.

Storage space is more economical as 1GB only consumes 2GB.

Maximum IOPS may increase as Ceph only needs to write 2 copies before acknowledging the write.

Latency may decrease as Ceph only needs to write 2 copies before acknowledging the write.

We offer two levels of support to allow companies to choose what is best for them. A third, custom level is also available.

All clouds come with the first level of support included within the base prices:

- All hardware, including servers, switches/routers, power systems, cooling systems, and racks are handled by OpenMetal. This includes drive failures, power supply failures, chassis failures, etc. Though very rarely needed, assistance with recovery of any affected cloud services due to hardware failure is also included.

- Procurement, sales taxes, fit for use as a cloud cluster member, etc. are all handled by OpenMetal.

- Provisioning of the initial, known good cloud software made up of Ceph and OpenStack.

- Providing new, known good versions of our cloud software for optional upgrades. OpenMetal may, at its discretion, assist with upgrades free of charge, but upgrades are the responsibility of the customer.

- Support to customer’s operational/systems team for cloud health issues.

The second level of support is termed Assisted Management and has a base plus hardware unit fee. In addition to the first level of support, the following is offered with Assisted Management:

- Named Account Engineer.

- Engineer to Engineer support for cloud.

- Engineer to Engineer advice on key issues or initiatives your team has for workloads on your OpenMetal Cloud.

- Upgrades are handled jointly with OpenMetal assisting with cloud software upgrades.

- Assisting with “cloud health” issues, including that we jointly monitor key health indicators and our 24/7 team will react to issues prior to or with your team.

- Monthly proactive health check and recommendations.

In addition, OpenMetal publishes extensive documentation for our clouds and many System Administration teams prefer to have that level of control with their private cloud hosting.

Skilled Linux System Administrators can learn to maintain an OpenMetal Cloud in about 40 hours using our provided Cloud Administrator Guides and we will give you free time on non-production test clouds for this purpose. Most teams view this new technology as a learning opportunity for their team and as the workload shifts to your hosted private cloud, time on the old systems are reduced accordingly.

We also offer our Assisted Management level of service. This is popular for first time customers and is quite reasonable. It covers most situations, including that we jointly monitor “cloud health” and our 24/7 team will react to issues prior to or with your team.

Your OpenMetal Hosted Private Cloud is “Day 2 ready” and is relatively easy to maintain but does require a solid set of Linux System Administration basics to handle any customizations or “cloud health” work.

For companies without a Linux Admin Ops team, we recommend our Assisted Management level of service. It covers most situations, including that we jointly monitor “cloud health” and our 24/7 team will react to issues prior to or with your team.

You may also consider having your team grow into running the underlying cloud over time. A properly-architected OpenStack Cloud can have very low time commitments to maintain in a healthy state. A junior Linux System Administrator can learn to maintain an OpenMetal Cloud in about 120 hours using our provided Cloud Administrator Guides and we will give you free time on non-production test clouds for this purpose. OpenMetal is unique in offering free time for customers on completely separate on-demand OpenStack Clouds. This new learning opportunity could dramatically change both your staff and your company’s view on private cloud.

Your servers are 100% dedicated to you. The crossover between your OpenMetal Cloud and the overall data center comes at the physical switch level for internet traffic and for IPMI traffic. For internet traffic, you are assigned a set of VLANs within the physical switches. Those VLANs only terminate on your hardware. For administrative purposes, your hardware’s those departments or people. You can set resource limitations that will be enforced by OpenStack. Regardless of if the project is being managed via API or through Horizon, OpenStack will enforce your policies. As OpenStack is an API-first system, there is often more functionality available via the API than within Horizon. For Cloud Administrators, a robust CLI that uses the API is the most popular way to administer OpenStack.

OpenMetal’s Hosted Private Cloud is built on OpenStack, the leading open source standard, providing full API access for automation (via Terraform, Ansible, etc.) and direct control over hardware resources without licensing fees. The platform features cutting-edge software-defined networking that allows you to create thousands of autonomous VPCs with dedicated routers, load balancers, and firewalls, all backed by up to 20 Gbps public internet access per server. IP flexibility is a priority, offering IPv4 by default, optional IPv6, and the ability to bring your own /24 blocks or SWIP existing ones.

For reliability and protection, the system includes robust security measures such as standard DDoS protection (up to 10 Gbps per IP), 24/7 onsite data center personnel, and comprehensive compliance certifications including SOC 2, PCI-DSS, and HIPAA. Data is managed via high-availability Ceph storage that supports scalable block and S3-compatible object storage, ranging from networked triplicate copies to high-performance local NVMe. All of this is backed by a strong network uptime SLA of 99.96%, with historical performance consistently exceeding 99.99%.

First, a little background on Ceph and creating Storage Pools. The following is important.

All servers will have at least one usable drive for data storage, including servers labeled as compute. You have the option to use this drive for LVM based storage, ephemeral storage, or as part of Ceph. Each drive is typically performing only 1 duty and that is our default recommendation*.

For Ceph, if the drive types differ – ie, SATA SSD vs NVMe SSD vs Spinners – you should not join them together within one pool. Ceph can support multiple different performance pools, but you should not mix drive types within a pool. In order to create a pool that can support replication of 2, you will need at least two servers. For a replication of 3, you will need 3 servers. For erasure coding, you typically need 4 or more separate servers.

If you are creating a large storage pool with spinners, we have advice specific to using the NVMe drives as an accelerator for the storage process and as part of the object gateway service. Please check with your account manager for more information.

*Of note, though this is not a common scenario yet, with our high-performance NVMe drives, the IO is often much, much higher than typical applications require so splitting the drive to be both parts of Ceph and as a local high-performance LVM is possible with good results.

With that being said, there are several ways to grow your compute and storage past what is within your Private Cloud Core (PCC).

You can add additional matching or non-matching compute nodes. Keep in mind that during a failure scenario, you will need to rebalance the VMs from that node to nodes of a different VM capacity. Though not required, it is typical practice to keep a cloud as homogeneous as possible for management ease.

You can add additional matching converged servers to your PCC. Typically you will join the SSD with your Ceph as a new OSD, but the drive on the new node can be used as ephemeral storage or as traditional drive storage via LVM. If joined to Ceph, you will see that Ceph will automatically balance existing data onto the new capacity. For compute, once merged with the existing PCC, the new resources will become available inside OpenStack.

You can create a new converged cluster. This allows you to select servers that are different from your PCC servers. You will need to use at least 2 servers for the new Ceph pool, 3 or greater is the most typical. Of note, one Ceph can manage many different pools, but you can also have multiple Ceph clusters if you see that as necessary.

You can create a new storage cloud. This is typically done for large-scale implementations when the economy of scale favors separating compute and storage. This is also done when object storage is a focus of the storage cloud. Our blended storage and large storage have up to 12 large capacity spinners. They are available for this use and others.

As a customer-centric business, we want to provide paths to help you succeed. If you are finding a barrier to your success within our system, please escalate your contact within OpenMetal Central. There we provide direct contact to our product manager, our support manager, and to our company president.

In general, we manage the networks above your OpenMetal Clouds and we supply the hardware and parts replacements as needed for hardware in your OpenMetal Clouds.

OpenMetal Clouds themselves are managed by your team. If your team has not managed OpenStack and Ceph private clouds before, we have several options to be sure you can succeed.

- Complimentary onboarding training matched to your deployment size and our joint agreements

- Self-paced free onboarding guides

- Free test clouds, some limits apply

- Paid additional training, coaching, and live assistance

- Complimentary emergency service – please note this can be limited in the case of overuse. That being said, we are nice people and are driven to see you succeed with an open source alternative to the mega clouds.

In addition, we may maintain a free “cloud in a VM” image you can use for testing and training purposes within your cloud.