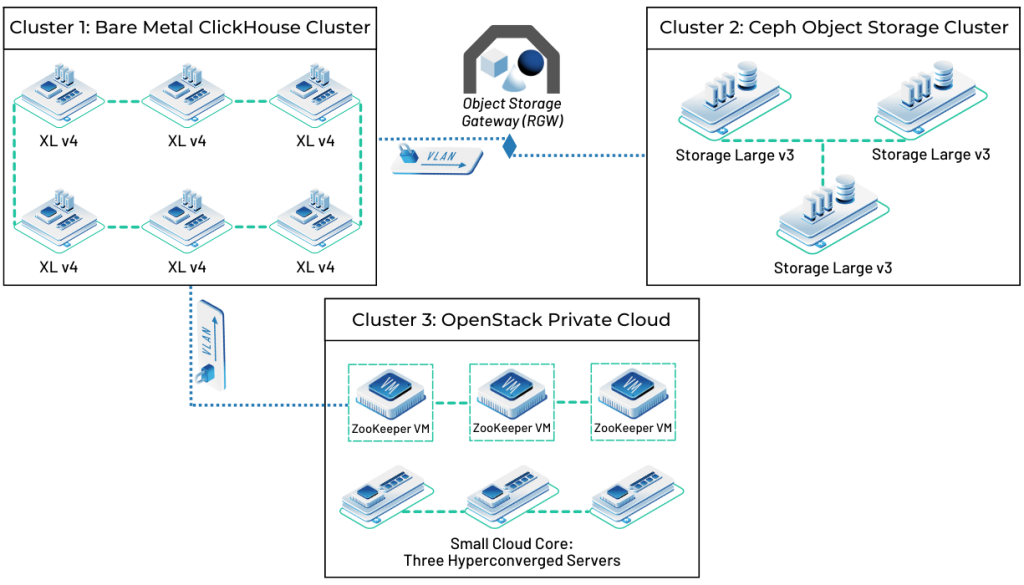

Hosting a Powerful ClickHouse Deployment with a Mix of Bare Metal, OpenStack, and Ceph

This is a real-world architecture of a high-performance ClickHouse cluster, showcasing a combination of bare metal, OpenStack cloud, and Ceph object storage.

The challenge? Ingesting and analyzing huge streams of “hot” real time security event data while controlling the costs of an ever-growing historical set of “cool”, but critical, data.

Why This Architecture Works

This three-tiered architecture is designed for ideal ClickHouse performance and scalability:

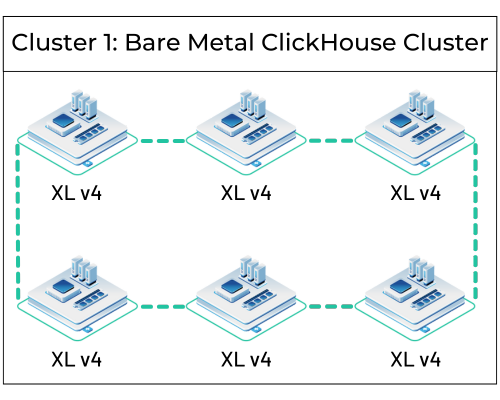

- Bare Metal for ClickHouse: Running ClickHouse directly on bare metal gets rid of the overhead of virtualization. It can then fully tap into all available hardware resources. This is important for achieving the extremely low latency required for real-time security analysis.

- OpenStack for Control and Flexibility: The OpenStack private cloud provides a flexible and manageable environment for supporting services like ZooKeeper. It also hosts additional services like load balancers and supporting applications, all while remaining connected to the bare metal servers.

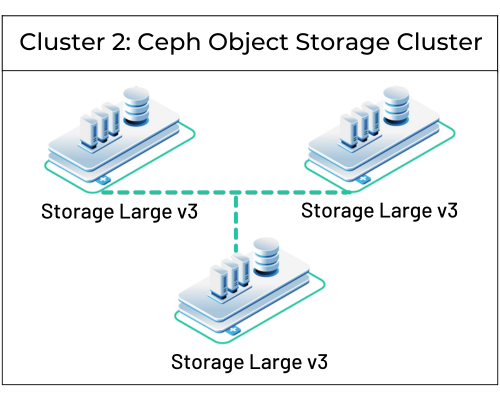

- Ceph for Cost-Effective Scalability: Ceph’s object storage provides a scalable and cost-effective way to store large volumes of historical data. This lets the cybersecurity firm meet compliance requirements and perform long-term trend analysis.

- Hybrid Network: Merging VLANs allows the virtual servers to talk directly to the bare metal for high bandwidth, low latency communication.

This deployment is not a theoretical exercise! It’s a live production system currently supporting a major cybersecurity firm. With this hybrid approach of bare metal, OpenStack, and Ceph, OpenMetal is a great fit to power demanding big data solutions like ClickHouse.

This architecture delivers a powerful platform for real-time analytics, providing insights for the client’s foundational security operations. Being able to mix, match, and connect the right infrastructure for each area ensures that our customer can easily deploy this powerful solution while keeping costs relatively low and performance high.

Interested in ClickHouse on OpenMetal but not sure where to start? Check out our quick start installation guide for ClickHouse on OpenMetal.