In this article

- Understanding Infrastructure Growth Patterns

- XS Tier: Your Learning and Testing Ground

- Small Tier: The Foundation for Production

- Medium Tier: Scaling for Growth

- Large Tier: The Production Workhorse

- XL Tier: Purpose-Built for Specialized Workloads

- XXL Tier: Enterprise-Scale Infrastructure

- The Power of Platform Consistency

- Real-World Migration Patterns

- Making the Right Choice for Your Business Stage

Growing a business means navigating a constant tension: you need infrastructure that supports today’s workloads without boxing you into tomorrow’s constraints. Too often, companies find themselves locked into platforms that work at one scale but require painful migrations when growth demands more capacity or different capabilities.

The answer isn’t simply “throw more resources at it.” Effective capacity planning requires understanding your baseline requirements, anticipating scalability needs, and matching infrastructure choices to both current demands and future trajectories. This becomes exponentially more difficult when moving between tiers means replatforming entirely: rewriting configurations, adapting tooling, and retraining teams.

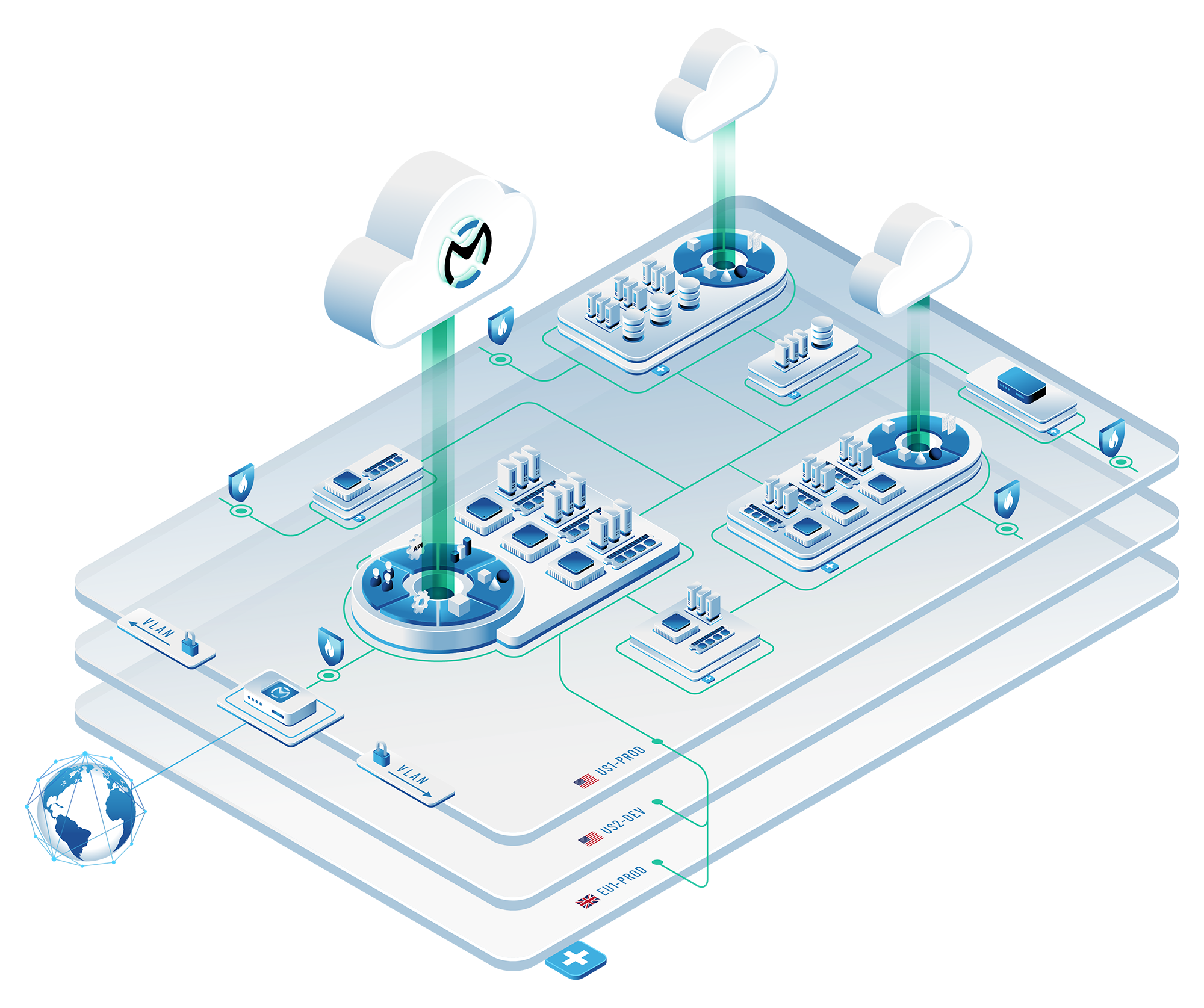

OpenMetal’s approach eliminates this friction by offering six distinct server tiers—XS, Small, Medium, Large, XL, and XXL—all running identical OpenStack and Ceph architectures. Whether you’re spinning up your first proof-of-concept or consolidating enterprise workloads, the underlying platform remains consistent. Storage pools work the same way. Networking configurations translate directly. OpenStack APIs don’t change. And you’re free to integrate bare metal servers and grow your storage clusters as needed.

Your application developed on a Small tier can migrate to Large or XL without architectural changes. No vendor lock-in. No surprise compatibility issues. It’s an all-in-one solution for predictable scaling that matches your business stage.

Understanding Infrastructure Growth Patterns

Before looking at specific tiers, it helps to understand how infrastructure needs typically evolve. Cloud capacity planning isn’t a one-time task but a continuous process involving demand forecasting, performance monitoring, cost optimization, and contingency planning.

Most growing companies follow recognizable patterns. Early-stage startups need environments for testing concepts and validating ideas before committing to production infrastructure. As products gain traction, the focus shifts to minimum viable production configurations that can handle real users without breaking the bank. Growth-stage companies require infrastructure that can absorb traffic spikes, support expanding teams, and maintain performance under increasing loads.

The challenge lies in balancing availability, performance, and cost, three factors that constantly compete for priority. Overprovisioning wastes money on idle resources. Underprovisioning causes performance bottlenecks and potential downtime. Finding the right balance requires both understanding your current baseline and accurately forecasting future demand.

Traditional infrastructure forced companies to guess at future needs and lock in capacity decisions years in advance. Modern cloud platforms offer more flexibility, but this creates new challenges: tracking resource consumption across multiple workloads, adjusting allocations as needs change, and managing costs that can spiral unexpectedly.

OpenMetal’s tier structure addresses these challenges by providing clear upgrade paths matched to typical business stages, all while maintaining platform consistency that eliminates replatforming friction.

XS Tier: Your Learning and Testing Ground

The XS tier serves a specific purpose: giving teams a place to learn OpenStack, test concepts, and validate approaches before committing to production infrastructure. Available only on v1 hardware, XS Cloud Cores start around $600 monthly for a three-server configuration.

This price point makes XS accessible for proof-of-concepts and training environments, but the tier comes with important limitations. OpenStack’s control plane—the management layer that orchestrates your entire cloud—consumes roughly 25-30% of available server resources. On smaller hardware, this leaves limited capacity for actual workloads.

What XS Handles Well

XS excels at four specific use cases. First, it provides an excellent OpenStack learning environment. If you’re training staff on OpenStack operations or studying for certifications, XS offers a complete production-like platform without production costs. You can experiment with networking configurations, storage pools, and instance management without worrying about breaking critical systems.

Second, XS works for proof-of-concept testing. Before committing to larger infrastructure investments, you can validate that OpenStack meets your needs, test application compatibility, and verify operational workflows. The platform you use for testing is architecturally identical to what you’ll run in production, ensuring your PoC findings translate directly.

Third, personal labs and certification study benefit from XS’s low cost and full feature set. Anyone pursuing OpenStack certifications needs hands-on practice with real infrastructure. XS provides this without requiring expensive hardware commitments.

Fourth, short-term development projects that don’t require significant compute resources can run effectively on XS. If you’re building something small or temporary, XS gives you a full OpenStack environment without overprovisioning.

What XS Cannot Do

XS isn’t suitable for production workloads of any kind. The control plane overhead leaves insufficient headroom for running reliable production services. Performance testing also falls outside XS’s capabilities. You can’t accurately gauge how applications will perform at scale when most resources are consumed by infrastructure management.

Long-term development environments typically outgrow XS quickly. As codebases expand and development workflows mature, teams need more compute capacity than XS can provide. At that point, migrating to Small tier becomes necessary.

Small Tier: The Foundation for Production

Small tier represents the minimum viable production configuration for OpenStack infrastructure. Available from v2 hardware onwards, Small servers provide 8 cores, 128GB RAM, and 3.2TB storage per server. A three-server Cloud Core runs approximately $900 monthly.

The jump from XS to Small isn’t just about raw resources. Small tier reduces OpenStack control plane overhead to approximately 10-15% of total capacity, leaving substantially more headroom for workload operations. This efficiency makes Small viable for production use cases that XS simply cannot support.

The Small Tier Sweet Spot

Small tier shines for companies at a specific inflection point: you’ve validated your concept, you have real users, but you’re not yet experiencing high traffic volumes. This tier supports production workloads for low-traffic applications, typically those serving fewer than 50 concurrent users.

For development and staging environments requiring production parity, Small provides the same architectural foundation as larger tiers. Your staging environment on Small tier operates identically to your production environment on Large, ensuring accurate testing without wasting resources on oversized staging infrastructure.

Small SaaS applications find a natural home on Small tier. If you’re running internal tools, admin portals, or customer-facing applications with modest traffic, Small delivers production-grade reliability and high availability at an accessible price point. The three-server configuration provides true redundancy as your application remains available even if one server fails.

CI/CD infrastructure for small teams, typically under 10 developers, runs effectively on Small tier. Build pipelines, testing environments, and deployment automation don’t require massive resources when team sizes remain modest. Small provides enough capacity to run these workflows reliably without the expense of larger hardware.

Microservices architectures with 5-10 services fit comfortably on Small tier. While massive microservices deployments demand more resources, smaller implementations common at early-stage companies work well within Small’s capacity envelope.

The Value Proposition

Small tier’s real value lies in delivering true production-grade infrastructure at startup-friendly pricing. Many companies at this stage face an uncomfortable choice: run production workloads on single-server configurations that lack redundancy, or overspend on infrastructure they don’t yet need.

Small eliminates this choice. The three-server architecture provides genuine high availability. If one server fails, your workloads automatically migrate to surviving nodes. Storage remains accessible through Ceph’s distributed architecture. Your applications stay online.

This matters more than it might seem. Early-stage capacity planning often involves identifying “what we expect to break first”: which service is most likely to fail and require the most operational attention. With Small tier’s built-in redundancy, infrastructure failure drops dramatically lower on that risk list. You can focus engineering time on application development rather than managing infrastructure fragility.

Medium Tier: Scaling for Growth

Medium tier addresses a specific business stage: you’ve achieved product-market fit, traffic is growing consistently, and your team is expanding. Your infrastructure needs more capacity, but you’re not yet operating at enterprise scale. Medium tier delivers the resources needed to support this growth phase.

Medium v4 hardware features dual Intel Xeon Silver 4510 processors, 256GB DDR5 RAM, and 20Gbps networking per server. A three-server configuration starts around $2,400 monthly. Like Small tier, OpenStack control plane operations consume approximately 10-15% of resources, leaving 85-90% available for your workloads.

Network Performance Advantages

Medium tier’s most distinctive feature is its 20Gbps networking which is double the bandwidth available on Small tier. This makes Medium particularly suitable for network-intensive applications. If you’re running video streaming services, file sharing platforms, or CDN origin servers, network bandwidth becomes a primary bottleneck before compute or memory constraints appear.

Infrastructure capacity planning requires tracking key metrics including CPU utilization, memory usage, and crucially, network throughput. For applications moving large volumes of data, network capacity often determines overall performance more than raw compute power.

Medium’s 20Gbps connectivity allows these workloads to operate without constant network saturation. Video transcoding pipelines can ingest and deliver content without bottlenecking. File synchronization services can handle concurrent uploads and downloads from multiple users. Content delivery infrastructure can serve media assets quickly enough to maintain smooth streaming experiences.

Growth-Stage Production Workloads

Beyond network performance, Medium tier supports growing production deployments serving 50-200 concurrent users. This represents the transition point where Small tier’s capacity becomes constraining but Large tier would be overprovisioned.

Medium works well for Series A and B companies scaling beyond initial infrastructure. You’re no longer a tiny startup, but you’re not yet operating at massive scale. You have multiple development teams, more complex applications, and increasing customer counts. Medium provides the resources to support this expansion without forcing you to jump immediately to enterprise-tier pricing.

Medium-scale SaaS platforms with moderate database requirements fit Medium’s capacity envelope. Your database needs more memory than Small provides, but doesn’t yet require the 512GB available on Large servers. Your application server fleet needs more CPU cores to handle increasing request volumes, but not the 32+ cores per server that Large delivers.

For companies running dedicated control planes for 10-20 node clusters, Medium provides enough resources to separate management workloads from production workloads. This architectural pattern improves reliability and makes capacity planning more predictable.

Multi-tenant hosting platforms with moderate isolation needs also benefit from Medium’s balanced specifications. You can run multiple customer workloads with sufficient resource isolation while maintaining cost-effective operations.

Development and Staging at Scale

Medium tier increasingly serves as production-parity development and staging environments for companies running larger production infrastructure. If your production environment operates on Large or XL tier, creating staging environments on identical hardware becomes expensive quickly.

Medium provides enough resources to accurately test production workloads while costing significantly less than matching production hardware exactly. Your staging environment runs the same OpenStack and Ceph architecture, ensuring deployment procedures and operational tooling work identically. You can validate database migrations, test new application versions, and verify system behavior under realistic loads.

This approach to capacity management balances cost efficiency with testing accuracy. You avoid underprovisioning staging environments so severely that tests become meaningless, while also avoiding the expense of exact production mirrors for non-production workloads.

Large Tier: The Production Workhorse

Large tier is OpenMetal’s most popular configuration, and that popularity stems from hitting the sweet spot for most production workloads. Available across all hardware generations, Large configurations feature 512GB RAM per server with storage options ranging from 3.2TB to 12.8TB, depending on your specific needs.

OpenStack overhead remains at 10-15% regardless of tier size. This might seem counterintuitive—you’d expect larger servers to consume proportionally more resources for management operations. However, the control plane overhead stays relatively constant because larger servers typically support proportionally larger workload clusters. The infrastructure management burden doesn’t increase linearly with server size.

The 80% Use Case

Large tier handles approximately 80% of production use cases. This isn’t because it’s the biggest or most powerful option, it’s because most production workloads fit comfortably within Large’s capacity envelope without requiring specialized hardware.

High-performance databases requiring 32+ cores and 512GB RAM run well on Large tier. Whether you’re operating PostgreSQL, MySQL, MongoDB, or other database systems, Large provides sufficient resources for database workloads serving hundreds to thousands of concurrent users. The memory capacity supports extensive caching, reducing disk I/O and improving query performance.

Container orchestration clusters using Kubernetes or OpenShift running 50-200 pods find a natural home on Large tier. Modern application architectures increasingly rely on containerized deployments, and these platforms need substantial resources for both the orchestration layer and the containerized workloads themselves.

Mid-to-large scale SaaS platforms serving 500-5,000 concurrent users operate effectively on Large. This represents most Series B through Series D companies. You’re past the growth stage but not yet operating at massive enterprise scale. Your infrastructure needs are substantial and growing, but they haven’t reached the specialized requirements that demand XL or XXL configurations.

Data analytics pipelines with significant processing requirements benefit from Large’s compute and memory resources. If you’re running batch processing jobs, generating reports, or performing data transformations, Large provides enough capacity to complete these operations in reasonable timeframes.

Consolidation and Multi-Tenant Scenarios

IT service providers increasingly use Large tier for multi-application consolidation. Rather than running separate infrastructure for each customer or application, you can consolidate multiple workloads onto shared Large tier infrastructure while maintaining adequate isolation and resource allocation.

This approach requires careful capacity planning and management, but when executed properly, it improves infrastructure economics quite a bit. You’re utilizing existing capacity more fully while reducing the overhead of managing numerous separate environments.

Backup and disaster recovery infrastructure requiring massive storage particularly benefits from Large tier’s storage configurations. The 12.8TB option provides enough capacity to maintain backup copies of substantial production datasets while keeping costs reasonable compared to specialized storage tiers.

Architectural Flexibility

Large tier’s versatility extends beyond raw capacity. The tier works equally well for dedicated workload deployments, mixed-use environments, and as part of multi-tier architectures where different workload types run on different hardware specifications.

You might run general production workloads on Large while using XL for memory-intensive databases or storage clusters for cold data archival. This tiered approach optimizes both cost and performance, placing each workload on hardware suited to its specific requirements rather than trying to find one-size-fits-all infrastructure.

The platform consistency across tiers makes these multi-tier architectures practical. Your operational tooling works identically whether managing Large, XL, or XXL infrastructure. Network configurations translate directly. Storage pools can span multiple tiers if needed for specific use cases.

XL Tier: Purpose-Built for Specialized Workloads

While Large tier handles most production needs, certain workloads have requirements that extend beyond general-purpose infrastructure. XL tier, available from v2 hardware onwards, targets these specialized use cases with 1TB RAM per server and storage options ranging from 12.8TB to 38.4TB on the XL 6×6.4 configuration.

XL tier runs dual Intel Xeon Gold processors, providing both high core counts and premium CPU performance. This combination of massive memory, substantial storage, and powerful processors addresses workloads where one or more resources become the primary constraint.

Memory-Intensive Production Workloads

In-memory databases and caching systems represent XL’s primary use case. Redis and Memcached clusters handling datasets exceeding 100GB need infrastructure with memory capacity that Large tier cannot provide. These systems store entire datasets in RAM to deliver microsecond-latency responses. Running them on infrastructure with insufficient memory forces disk spillover, destroying the performance characteristics that make in-memory systems valuable.

Large PostgreSQL and MySQL instances with extensive caching also benefit from XL’s memory capacity. While these databases can operate on smaller infrastructure, workloads with hot datasets approaching or exceeding 512GB see dramatic performance improvements when the entire working set fits in memory. Buffer pools and query caches eliminate most disk I/O, reducing query latency from milliseconds to microseconds.

Enterprise resource planning systems like SAP HANA or similar in-memory ERP platforms require hardware designed specifically for their memory profiles. These systems load entire business databases into RAM to deliver real-time analytics and transaction processing. XL provides the memory headroom these demanding applications require.

Storage-Intensive Applications

The XL 6×6.4 configuration’s 38.4TB storage capacity targets a different set of specialized workloads. Media processing and transcoding pipelines need both substantial storage for source and output files and enough memory to buffer operations. Video transcoding is particularly demanding as high-resolution source files can be gigabytes each, and maintaining multiple versions in different formats multiplies storage requirements quickly.

AI and ML model training workloads increasingly require large dataset caching. While final trained models might be relatively compact, training data often measures in hundreds of gigabytes or terabytes. Keeping training datasets on fast local NVMe storage dramatically accelerates training iterations compared to pulling data from network storage or object stores.

High-throughput logging and observability platforms aggregate logs, metrics, and traces from distributed systems. These workloads generate enormous data volumes that must be ingested, indexed, and made available for querying. Fast local storage reduces ingestion bottlenecks while providing the capacity to retain recent data locally before aging it to cheaper cold storage.

Confidential Computing and Advanced Features

XL tier hardware supports Intel SGX (Software Guard Extensions) and TDX (Trust Domain Extensions) for confidential computing workloads. These technologies create encrypted execution environments where sensitive code and data remain protected even from privileged system software.

For organizations handling regulated data or implementing zero-trust architectures, these security features can be requirements rather than nice-to-haves. XL provides the hardware foundation for confidential computing while maintaining the platform consistency that makes OpenMetal practical for production operations.

Consolidated multi-tenant platforms with strict resource guarantees also gravitate toward XL. When you’re providing infrastructure-as-a-service to customers with demanding SLAs, having substantial resource pools makes capacity allocation more predictable and reduces the risk of resource contention affecting performance.

The Specialization Premium

XL tier costs reflect its specialized nature. These configurations are purpose-built for specific workload profiles. If your requirements align with XL’s strengths (massive memory, extensive storage, or confidential computing), the tier delivers value by eliminating architectural compromises.

However, if your workloads don’t specifically need what XL provides, Large tier offers great economics. Effective capacity planning means matching infrastructure characteristics to workload requirements rather than simply choosing the largest available option.

XXL Tier: Enterprise-Scale Infrastructure

XXL tier, available exclusively on v4 hardware, represents OpenMetal’s highest-capacity offering. With 2TB RAM per server, these configurations deliver 6TB total memory across a three-server cluster and 192 total cores. Pricing starts around $10,000 monthly, reflecting XXL’s position as purpose-built enterprise infrastructure.

XXL addresses two distinct scenarios: complete datacenter consolidation for mid-sized organizations and computationally extreme workloads requiring massive parallelization.

Enterprise-Scale Consolidation

For organizations running 200-500+ virtual machines across diverse workload types, XXL provides sufficient capacity to consolidate what traditionally required multiple separate infrastructure clusters. Rather than managing separate environments for different application tiers, business units, or customer segments, you can operate a unified platform that serves all requirements.

This approach to datacenter consolidation delivers operational efficiencies beyond raw cost savings. Unified management reduces the complexity of maintaining multiple separate platforms. Shared resource pools improve overall utilization. When one application’s demand decreases, another can consume freed resources without cross-platform migrations.

Mid-sized organizations often reach a point where on-premises infrastructure becomes increasingly expensive to maintain but public cloud costs have grown to uncomfortable levels. XXL provides a middle path: hosted private cloud infrastructure with enough capacity to handle enterprise workloads while maintaining the architectural control and cost predictability that public clouds often can’t deliver.

Extreme Computational Workloads

HPC simulations requiring 192-core parallelization represent another XXL use case. Scientific computing, financial modeling, and engineering simulations often need massive parallel processing capabilities. XXL’s core count enables these workloads to run without spanning multiple separate systems, reducing orchestration complexity and improving job completion times.

Large-scale AI and ML training workloads benefit from XXL’s combination of massive memory and high core counts. Training multiple models simultaneously or running parameter sweeps across many model variants requires infrastructure that can support numerous concurrent jobs without resource contention killing performance.

Real-time big data analytics using Spark, Hadoop, or similar distributed computing frameworks needs both substantial memory for data processing and enough cores to maintain parallelism across data partitions. XXL provides the resources to run these analytics workloads with acceptable latency while processing data volumes that smaller tiers struggle to handle.

Database and High-Density Scenarios

Massive database consolidation running multiple large databases on a single cluster becomes practical with XXL’s capacity. Rather than maintaining separate infrastructure for each major database, you can consolidate while maintaining adequate isolation and resource allocation for each database instance.

Enterprise ERP systems like SAP or Oracle with high concurrency requirements often need infrastructure that can support hundreds or thousands of simultaneous users. XXL’s combination of memory, compute, and I/O capacity delivers the resources these demanding business systems require.

High-density virtualization for managed service providers and hosting companies represents an additional XXL use case. When your business model involves selling virtual infrastructure to customers, packing more VMs onto fewer physical servers improves unit economics while XXL’s capacity maintains adequate per-VM performance.

The Enterprise Decision

XXL makes sense when you’re consolidating multiple infrastructure deployments, running computationally extreme workloads, or operating at genuine enterprise scale. The tier’s cost reflects its capabilities; you’re paying for infrastructure that can replace what traditionally required multiple separate clusters.

For organizations not operating at this scale, the lower tiers deliver better value. Capacity planning shouldn’t optimize for maximum capacity but rather for matching resources to actual requirements while maintaining headroom for growth.

The Power of Platform Consistency

Every tier described above runs identical OpenStack and Ceph architectures. This consistency eliminates the replatforming friction that traditionally makes infrastructure scaling painful. You can mix and match as many different private cloud cores as needed, and even throw bare metal servers into the mix.

When you develop on Small, test on Medium, and deploy to Large, your applications experience no architectural differences. Storage pools work identically—Ceph’s distributed storage behaves the same way regardless of underlying hardware. Networking configurations translate directly and the software-defined networking layer operates consistently across all tiers. OpenStack APIs don’t change. Automation scripts and management tooling work without modification.

This architectural consistency matters more than raw specifications in many scenarios. The actual challenge in infrastructure scaling often isn’t getting more CPU or memory, it’s managing the complexity of maintaining multiple different platforms with different behaviors, tooling, and operational procedures.

Eliminating Replatforming Friction

Traditional infrastructure scaling forces companies through painful replatforming exercises. You start on one provider’s platform, and as you grow, you discover their next tier uses completely different architectures. What worked in development doesn’t work in production. Networking behaves differently. Storage systems have incompatible features. APIs change between tiers.

Each of these transitions requires engineering time to rewrite configurations, adapt tooling, retrain operators, and troubleshoot compatibility issues. This friction often delays scaling because teams are reluctant to trigger these painful migrations. Infrastructure becomes a barrier to growth rather than an enabler.

OpenMetal’s consistent platform removes these barriers. When you need more capacity, you redeploy workloads to larger hardware. Your automation scripts work without changes. Your monitoring and alerting configurations apply directly. Your operational procedures remain valid. Your team’s expertise translates completely.

Multi-Tier Architectures Without Complexity

Platform consistency enables architectural patterns that would be impractical with heterogeneous infrastructure. You can run general production workloads on Large tier while using XL for memory-intensive databases, and these different tiers work together seamlessly.

Network connectivity between tiers operates identically to connectivity within tiers. Storage can span multiple tiers if your architecture requires it. Management and monitoring tools work uniformly across all hardware. Your operational complexity doesn’t increase proportionally with architectural complexity because the underlying platform remains consistent.

This makes workload-specific optimization practical. Rather than forcing every workload onto identical hardware, you can match each workload to appropriately sized infrastructure while maintaining operational consistency. Cost-sensitive workloads run on smaller tiers. Resource-intensive workloads get larger hardware. Everything remains operationally manageable because the platform doesn’t change.

Business Value of Consistency

The business value of platform consistency extends beyond technical benefits. Infrastructure decisions become more straightforward when you’re not weighing architectural compatibility against capacity needs. You can make scaling decisions based primarily on resource requirements and cost rather than getting trapped in complex evaluations of platform differences.

Time-to-market improves when new workloads don’t require learning new platforms or rewriting deployment procedures. Your team’s expertise compounds over time rather than fragmenting across multiple different systems.

Risk decreases when your development, staging, and production environments operate on the same platform. Testing becomes more meaningful when your staging environment architecturally matches production rather than approximating it. Deployment failures decrease when you’re not translating configurations between different platforms.

Real-World Migration Patterns

Understanding how companies typically move between tiers helps inform your own capacity planning. While every business is unique, certain patterns recur frequently enough to provide useful guidance.

The Startup Journey

Startups typically follow a progression from XS through Small to Medium and eventually Large. This pattern reflects the natural evolution from proof-of-concept through MVP launch into growth and scale.

The journey usually begins with XS for PoC work. You’re validating that OpenStack meets your needs, testing application compatibility, and learning operational procedures. You might spend weeks or months at this stage, depending on application complexity and team OpenStack experience.

Once your PoC succeeds, you migrate to Small for MVP launch and initial production deployment. Small provides genuine high availability at startup-friendly pricing. You’re serving real users with production-grade infrastructure while keeping costs reasonable during the early revenue phase when every dollar counts.

As your user base grows and traffic increases, you move to Medium tier. This typically happens as you achieve product-market fit and begin scaling your team. Medium’s additional capacity supports both your growing application workloads and your expanding development and staging needs.

The jump to Large often coincides with Series A or B funding and serious scaling efforts. You’re no longer a tiny startup! You have substantial user bases, multiple product lines, or expanding geographic presence. Large provides the capacity to support continued growth while maintaining architectural consistency with your earlier infrastructure.

The Enterprise Pattern

Enterprise customers often follow a different path, typically starting with Medium for development and staging, moving to Large for production, and selectively deploying XL/XXL for specialized workloads.

This pattern reflects enterprise buying behavior and requirements. Enterprises rarely start with minimal infrastructure, they typically have substantial budgets and established requirements. Starting at Medium tier provides enough capacity for realistic development and staging environments while proving out the platform before committing to large production deployments.

Once the platform is validated, production workloads migrate to Large. Most enterprise applications fit comfortably within Large’s capacity envelope. The three-server architecture provides high availability, and Large’s specifications handle typical enterprise workload profiles.

XL tier gets deployed selectively for workloads with specialized requirements. An in-memory database might run on XL while general application infrastructure runs on Large. Media processing pipelines might require XL’s storage capacity while web frontends operate on Medium. This tiered approach optimizes cost while matching each workload to appropriate infrastructure.

Cost Optimization Patterns

Companies sometimes migrate in reverse, moving from Large to Medium as they optimize infrastructure spending. This pattern emerges when earlier infrastructure decisions resulted in overprovisioning or when application optimizations reduce resource requirements.

If your production environment runs on Large but utilization metrics show you’re only consuming 40-50% of available resources, rightsizing to Medium can substantially reduce costs while maintaining adequate headroom for growth. The consistent platform means this migration carries minimal risk. You’re not changing architectures, just relocating workloads to appropriately sized hardware.

Some companies maintain multiple tiers simultaneously, using Large for steady-state production capacity and keeping Medium infrastructure available for peak demands. This approach treats infrastructure as a portfolio. Different tiers serve different purposes, and the consistent platform makes managing this complexity practical.

Workload Separation Patterns

Mature infrastructures often spread across multiple tiers based on workload characteristics. You might run general workloads on Large, memory-intensive databases on XL, and maintain storage clusters on Large tier configured specifically for cold data archival.

This separation pattern optimizes both cost and performance. Each workload gets infrastructure suited to its specific requirements rather than compromising by running everything on one tier. Managing multiple tiers remains operationally simple because the underlying technology stack doesn’t change.

Scaling strategies vary based on workload characteristics, but the consistent platform means you can make these decisions based primarily on resource requirements rather than getting caught in complex architectural tradeoffs.

Making the Right Choice for Your Business Stage

Selecting the appropriate tier requires understanding both your current requirements and your growth trajectory. Capacity planning should balance today’s needs with tomorrow’s demands while avoiding overprovisioning that wastes resources and budget.

Start by assessing your baseline requirements. How many CPU cores do your applications need during typical operations? How much memory do your databases and caching layers consume? What storage capacity do your workloads require? Understanding these baseline metrics provides the foundation for tier selection.

Next, evaluate scalability needs. How much do your resource requirements fluctuate between peak and quiet periods? How quickly is your user base growing? What new capabilities or products are you planning to launch? Answering these questions helps you understand whether your baseline represents your actual capacity needs or whether you need to plan for substantial growth.

Consider your team’s operational capacity. Smaller tiers work well when you have limited staff and need simple operational procedures. Larger, more complex deployments might warrant larger tiers even if current workloads don’t fully utilize capacity. The additional headroom provides margin for operational error and reduces the frequency of capacity planning exercises.

Factor in cost constraints honestly. While OpenMetal provides excellent value across all tiers, infrastructure spending must align with business reality. If you’re an early-stage startup burning through limited runway, Small tier’s production capabilities at under $1,000 monthly might be essential for survival. If you’re a funded growth-stage company, Medium or Large tier becomes practical.

Remember that tiers aren’t permanent decisions. The consistent platform means you can migrate between tiers as requirements change. Start with the tier that meets your current needs while providing modest headroom for growth, knowing you can upgrade when circumstances warrant it.

For companies unsure about their requirements, OpenMetal offers a cloud deployment calculator that helps match workload characteristics to appropriate tiers. This tool considers factors including expected user counts, application types, and performance requirements to suggest suitable starting configurations. We’re also of course happy to speak with you and help match your requirements to the best hardware! Feel free to contact us anytime. If you’re just getting ramped up, our Startup eXcelerator Program may also be of interest.

The fundamental principle remains simple: choose the smallest tier that comfortably meets your requirements while providing headroom for growth. You can always migrate to larger infrastructure as needs evolve, and the platform consistency means these migrations won’t derail your engineering roadmap or require painful replatforming efforts.

Schedule a Consultation

Get a deeper assessment and discuss your unique requirements.

Read More on the OpenMetal Blog