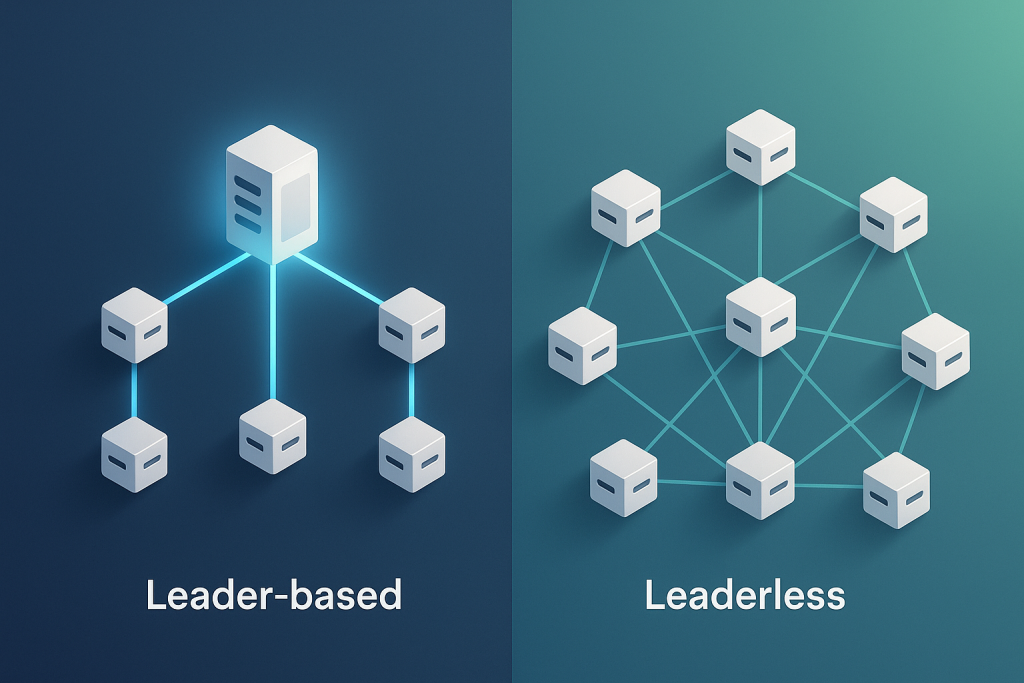

Choosing between leader-based and leaderless replication can shape your system’s performance, consistency, and availability. Here’s the basic rundown of each:

- Leader-Based Replication: A single leader coordinates all writes, ensuring strong consistency. It’s ideal for applications like financial systems that require strict data accuracy. However, it may face downtime if the leader fails.

- Leaderless Replication: Any node can handle writes, prioritizing availability and fault tolerance. It’s perfect for high-availability systems like social media platforms but often sacrifices immediate consistency.

Quick Comparison

| Aspect | Leader-Based Replication | Leaderless Replication |

|---|---|---|

| Write Coordination | Centralized through a leader | Decentralized using quorums |

| Consistency Model | Strong (synchronous) or eventual (async) | Typically eventual |

| Failure Impact | Leader failure affects writes | Operates as long as quorum is met |

| Performance | High read throughput, limited write scaling | Balanced scaling for reads and writes |

| Examples | PostgreSQL, MySQL, MongoDB | DynamoDB, Cassandra, Riak |

Bottom Line: In most cases, go with leader-based for strict consistency and leaderless for high availability. Your choice depends on your system’s needs for performance, fault tolerance, and data accuracy.

Architecture Differences

The way leader-based and leaderless replication manage data writes across distributed systems sets them apart. Each approach has its own structure for handling replicated data, which directly affects consistency and fault tolerance. These differences form the foundation for the performance and reliability characteristics we’ll explore later.

Leader-Based Replication Architecture

Leader-based replication operates with a clear hierarchy, where one node is designated as the leader to oversee all write operations. This setup, often called active/passive or master–slave replication, establishes a structured chain of command within the system.

In this model, all write operations are directed to the leader. The leader processes these writes, updates its local storage, and then sends the changes to the follower nodes through a stream of data updates. This centralized approach ensures that updates are orderly and consistent across all replicas.

Follower nodes, on the other hand, are mainly responsible for handling read requests. By offloading reads to followers, the leader can focus solely on write coordination and synchronization.

Well-known database systems like PostgreSQL, MySQL, and SQL Server‘s AlwaysOn Availability Groups rely on this leader-based architecture.

Leaderless Replication Architecture

Leaderless replication takes a completely different approach by eliminating the need for a centralized coordinator. Instead, it distributes write responsibilities across multiple nodes, allowing any node to handle both read and write operations directly.

In this decentralized setup, clients write to multiple replicas at the same time, often using a quorum-based system to ensure that a sufficient number of nodes acknowledge the write. This design relies on consensus mechanisms and conflict resolution strategies to maintain data integrity.

Examples of leaderless systems include Amazon’s DynamoDB, Apache Cassandra, and Riak. These systems emphasize high availability and fault tolerance by avoiding single points of failure or bottlenecks.

However, leaderless systems require more sophisticated client-side logic to manage issues like conflicting writes or unavailable nodes. Techniques such as version vectors are commonly used to track concurrent changes and resolve inconsistencies, supporting an eventual consistency model.

Architecture Comparison Table

| Aspect | Leader-Based Replication | Leaderless Replication |

|---|---|---|

| Write Coordination | Centralized through a single leader | Decentralized using quorums |

| Node Roles | Leader handles writes; followers serve reads | All nodes handle both reads and writes |

| Client Interaction | Clients write to the leader only | Clients write to multiple replicas |

| Failure Impact | Leader failure affects write availability | Individual node failures have minimal impact |

| Consistency Model | Strong consistency is achievable | Typically eventual consistency |

| Complexity | Simpler initial setup | More complex due to conflict resolution |

| Single Point of Failure | Yes (leader node) | No |

| Examples | PostgreSQL, MySQL, SQL Server | DynamoDB, Cassandra, Riak |

These architectural differences highlight the trade-offs between consistency, availability, and performance. Leader-based systems are ideal for scenarios requiring strict consistency and straightforward management, while leaderless architectures shine in environments where high availability and fault tolerance are top priorities.

Consistency and Availability

Balancing data consistency and system availability is a core challenge in replication systems, especially during network partitions. When these partitions occur, architects must decide whether to prioritize strict data consistency or maintain high availability.

Consistency in Leader-Based Replication

Leader-based systems maintain consistency by channeling all write operations through a central coordinator, or leader. In synchronous leader-based replication, the leader waits for acknowledgment from all or a majority of followers before confirming a write. This ensures that all nodes reflect the same data, providing read-after-write consistency – clients can immediately see their own updates once committed. However, this approach prioritizes consistency over availability during network issues. For instance, systems like MongoDB (in strict consistency mode) block reads or writes on out-of-sync nodes to maintain data accuracy, even if it means reduced availability during a partition.

On the other hand, asynchronous leader-based replication allows the leader to confirm writes before followers acknowledge them. This improves availability but introduces a risk of temporary inconsistencies if the leader fails before updates propagate fully.

Consistency in Leaderless Replication

Leaderless systems take a different approach, allowing any node to handle writes and relying on quorum-based mechanisms to resolve conflicts. These systems emphasize eventual consistency – data across nodes aligns over time, even if temporary discrepancies arise. In this model, any node can accept writes, boosting availability. A quorum ensures that the sum of write (w) and read (r) acknowledgments exceeds the total number of replicas, striking a balance between consistency and availability.

Systems like Amazon DynamoDB embrace eventual consistency to maintain high availability. While this can result in stale data temporarily, conflicts are later resolved using techniques like versioning. Similarly, systems such as Apache Cassandra and Riak use repair and reconciliation methods to harmonize data over time, as seen in updates from March 2023. Adjusting quorum values is a critical design choice: lower w and r values reduce latency and enhance availability but may delay consistency, while higher values strengthen consistency at the expense of availability during node failures.

Consistency vs Availability Comparison

| Aspect | Leader-Based Replication | Leaderless Replication |

|---|---|---|

| Consistency Model | Strong consistency (synchronous) or eventual (asynchronous) | Primarily eventual consistency |

| CAP Theorem Position | CP (Consistency + Partition Tolerance) in sync mode | AP (Availability + Partition Tolerance) |

| Write Acknowledgment | Leader confirms after follower acknowledgment | Multiple nodes acknowledge via quorum |

| Read Guarantees | Read-after-write consistency in sync mode | May return stale data temporarily |

| Network Partition Behavior | May become unavailable to ensure strict consistency | Stays available, resolves conflicts later |

| Conflict Resolution | Avoided through ordered writes via leader | Resolved later using versioning or timestamps |

| Typical Use Cases | Financial systems, ACID transactions | Social media, content delivery, IoT data |

| Examples | MongoDB (strict mode) | DynamoDB, Cassandra |

The choice between these replication models comes down to the application’s priorities. Systems handling critical financial data often lean toward leader-based replication for its strict consistency. Meanwhile, applications like social media feeds or IoT platforms favor leaderless replication for its ability to stay operational even during network disruptions. Each approach has its strengths, and the decision depends on balancing the need for consistency with the demand for availability.

Failure Handling and Recovery

System reliability hinges on how failures are managed, especially when balancing consistency and availability. When nodes crash or network connections falter, the replication model you choose determines recovery efficiency and data accessibility. Let’s explore how different replication models handle these challenges.

Failure Handling in Leader-Based Systems

In leader-based systems, follower failures are relatively easy to handle. A failed follower reconnects to the leader and retrieves missed updates from the leader’s transaction log. This process, called catch-up recovery, allows the follower to get back on track without disrupting the system’s overall availability.

However, leader failures are more complicated. When the leader goes down, write operations come to a halt until a new leader is elected and clients adjust accordingly. If there are unreplicated writes, they might be lost. Automatic failover mechanisms can also introduce risks, such as split-brain scenarios during network partitions, which may lead to data corruption. Timeout settings play a big role here: short timeouts may cause unnecessary failovers during brief network interruptions, while long timeouts can delay recovery from genuine failures.

Failure Handling in Leaderless Systems

Leaderless systems are designed to avoid a single point of failure. Instead of depending on a central leader, these systems use quorum-based operations, where a write is successful as long as a minimum number of nodes acknowledge it.

When nodes fail, leaderless systems employ two key mechanisms to maintain data consistency without requiring manual intervention. First, read repair identifies and fixes inconsistencies during regular read operations by comparing replicas and updating outdated copies with the most recent version. Second, anti-entropy processes periodically synchronize replicas to ensure consistency. For temporary node failures, these systems use techniques like sloppy quorums and hinted handoff. When a specific node is unreachable, writes are temporarily stored on available nodes and later forwarded to the original node once it recovers.

“In a distributed system, failures aren’t a possibility – they’re a certainty.” – Ashish Pratap Singh, AlgoMaster.io

This decentralized approach makes leaderless systems particularly appealing for applications that prioritize availability over strict data consistency.

Failure Handling Comparison

| Failure Aspect | Leader-Based Replication | Leaderless Replication |

|---|---|---|

| Single Node Failure | Follower: Quick catch-up recovery | Operates as long as quorum is met |

| Leader/Coordinator Failure | Requires failover, causing potential downtime | No leader; avoids failover altogether |

| Multiple Node Failures | System may become unavailable if leader fails | Operates as long as quorum is maintained |

| Network Partitions | Risk of split-brain scenarios | Tolerates partitions with quorum |

| Recovery Mechanism | Manual or automatic failover | Read repair and anti-entropy |

| Data Loss Risk | Possible during failover | Minimal with proper quorum settings |

| Downtime During Recovery | Yes, during leader election | None; system continues operating |

| Complexity of Failure Handling | High: requires fine-tuned timeout and election logic | Lower: distributed self-healing |

Performance and Scalability

After understanding the roles of consistency and failure handling, it’s time to dive into how replication impacts performance and scalability. The way a system replicates data – whether through a leader-based or leaderless model – has a direct influence on how it handles workloads. Each approach is tailored to different traffic patterns, and knowing their strengths and weaknesses can help you design a system that fits your requirements.

Write Performance in Both Models

When it comes to writing data, leader-based and leaderless systems take very different paths. In leader-based replication, all write operations funnel through a single leader node. While this setup simplifies coordination, it creates a bottleneck – write throughput is tied to the leader’s capacity, no matter how many follower nodes you add. Synchronous replication in this model also introduces latency since the leader must ensure changes are propagated to followers before confirming the write. Asynchronous replication can speed things up, but it comes with the risk of data inconsistency if the leader fails before updates reach the followers.

Leaderless systems, on the other hand, let any node handle write operations. This distributed approach means multiple nodes can process writes simultaneously, which can significantly boost throughput compared to a single-leader system. Direct writes to multiple nodes reduce coordination delays, but they can lead to temporary inconsistencies that need reconciliation later. Alternatively, systems that require nodes to coordinate before finalizing writes can ensure stronger consistency, though at the cost of increased latency.

Read Performance Optimization

In leader-based systems, read requests are distributed among follower nodes. This setup allows the system to scale read performance effectively by adding more followers, while the leader remains focused on handling writes. For read-heavy applications, this architecture is particularly efficient.

Conversely, leaderless systems distribute both read and write operations across all nodes. While this ensures a balanced load, it often results in lower read throughput compared to leader-based systems with dedicated followers. However, leaderless replication offers more predictable performance since it avoids single points of failure, like a leader node becoming unavailable.

Leader-based designs shine in scenarios where high read performance is critical, while leaderless systems are better suited for use cases requiring balanced scaling of both reads and writes. These differences in performance directly inform scalability strategies, as summarized in the table below.

Performance Characteristics Comparison

| Performance Aspect | Leader-Based Replication | Leaderless Replication |

|---|---|---|

| Write Throughput | Limited by single leader | Distributed across nodes |

| Read Throughput | High with multiple followers | Lower, nodes handle both reads and writes |

| Write Latency | Higher with synchronous replication; lower with asynchronous | Lower with direct writes, higher with coordination |

| Read Latency | Low from local followers | Consistent across all nodes |

| Horizontal Scaling | Limited for writes, excellent for reads | Evenly scales reads and writes |

| Consistency Impact | Strong (synchronous) or eventual (asynchronous) | Tunable with quorum settings |

| Network Overhead | Lower inter-node communication | Higher due to quorum operations |

| Client Complexity | Simple single write endpoint | More complex, with multiple endpoints |

Both replication models have their place. Leader-based replication is ideal for systems that prioritize strong consistency and high read performance. Meanwhile, leaderless replication stands out in environments where availability and scalability are top priorities. The best choice depends on your system’s performance goals, consistency requirements, and the complexity you’re willing to manage.

Private Cloud Use Cases

Private clouds excel when paired with tailored replication strategies: leader-based replication for strict data consistency and leaderless replication for high availability. These approaches can fully tap into the advantages of private cloud environments, such as enhanced performance and reliable failure management. Let’s look further into how each replication model aligns with private cloud requirements.

Leader-Based Replication in Private Clouds

Leader-based replication is a great fit for private clouds that require precise data consistency and real-time updates. It’s particularly useful in areas like financial systems, content management platforms, and database clusters where ensuring orderly updates and immediate consistency is critical.

Take database management, for example. PostgreSQL clusters running in private clouds often rely on leader-based replication to maintain ACID (Atomicity, Consistency, Isolation, Durability) properties. This setup distributes read operations across multiple follower nodes, delivering the high read throughput analytics teams need while ensuring compliance through strict consistency. Although leader failures may cause temporary downtime, private clouds can minimize disruption with robust failover mechanisms, automated monitoring, and dedicated network connections.

Leaderless Replication in Private Clouds

Leaderless replication shines in private clouds that prioritize high availability and horizontal scalability. It’s an excellent choice for use cases like object storage, distributed databases, and log aggregation, as it allows for concurrent updates and ensures fault tolerance.

Log aggregation systems, for instance, are a natural match for leaderless replication. When collecting metrics or security logs from hundreds of servers, the system must remain operational even if some nodes fail. The built-in fault tolerance ensures critical monitoring data keeps flowing, even during hardware failures. However, this approach comes with challenges, such as managing conflict resolution and maintaining data integrity across nodes, which often requires dedicated IT expertise.

OpenMetal’s Role in Private Cloud Deployments

OpenMetal’s infrastructure, built on OpenStack and Ceph, supports both leader-based and leaderless replication strategies. Ceph’s native leaderless replication ensures continuous operation even when nodes fail, while dedicated servers and software-defined networking support leader-based management. This flexibility allows organizations to deploy mixed setups – for example, using PostgreSQL for financial data and Cassandra for session management.

One standout advantage of OpenMetal’s private cloud is its fully dedicated infrastructure, which eliminates “noisy neighbor” issues that can disrupt replication timing in shared environments. Reliable network latency is crucial, whether it’s for synchronous replication in leader-based systems or quorum operations in leaderless setups.

To help organizations make informed decisions, OpenMetal offers proof-of-concept trials. These trials let teams test both replication models with real workloads, helping them identify the best approach to meet their specific needs for consistency, availability, and performance.

Wrapping Up: Leader-Based vs Leaderless Replication

Let’s bring together the key contrasts between leader-based and leaderless replication models, for a clearer understanding of their roles in distributed systems.

Key Differences at a Glance

Leader-based replication relies on a central leader to manage all write operations, ensuring strong consistency. However, this approach introduces the risk of a single point of failure. On the other hand, leaderless replication spreads write operations across multiple nodes, using quorum consensus to maintain availability, though it sacrifices immediate consistency. Systems like PostgreSQL, MySQL, and MongoDB often adopt leader-based replication for scenarios where strict data integrity is important, such as financial transactions requiring sequential updates.

The architecture of these models shapes their performance. Leader-based systems excel in read-heavy environments, offering strong consistency but may encounter bottlenecks during write operations due to the reliance on a single leader. Leaderless systems distribute the workload more effectively, avoiding central bottlenecks, but must handle conflict resolution when updates occur simultaneously.

Picking the Right Approach

The choice between these replication models depends on the balance between consistency, availability, and performance. Applications like financial systems, compliance-driven platforms, or content management tools benefit from the strong consistency of leader-based replication, even if occasional downtime occurs during leader failovers.

In contrast, leaderless replication is often the go-to for high-traffic web applications, real-time analytics, and content delivery networks. These systems prioritize availability and can tolerate the eventual consistency trade-off, provided conflicts are resolved effectively.

When deciding, consider your application’s needs: opt for leader-based replication when strict consistency is non-negotiable, and choose leaderless replication for environments demanding high availability and scalability. The expertise and resources of your team also play a critical role in this decision.

OpenMetal’s Private Cloud Advantage

OpenMetal’s private cloud infrastructure offers a practical way to harness the strengths of both replication strategies. Built on OpenStack and Ceph, it provides a flexible platform that supports diverse deployment needs. Ceph’s leaderless design ensures uninterrupted storage operations, even during hardware failures, while the platform’s dedicated networking and compute resources enable reliable leader-based database operations.

With fully dedicated infrastructure, OpenMetal eliminates interference, ensuring consistent replication performance. Plus, our proof-of-concept trials let organizations test replication models with real workloads, helping teams find the perfect balance between consistency, availability, and performance before committing to a long-term solution.

FAQs

What should you consider when deciding between leader-based and leaderless replication in distributed systems?

When choosing between leader-based and leaderless replication for a distributed system, weigh several factors:

- Consistency: Leader-based replication offers strong consistency, ensuring all nodes reflect the latest data. On the other hand, leaderless replication typically provides eventual consistency, which might require extra mechanisms to achieve stricter guarantees.

- Fault Tolerance: Leaderless systems excel in fault tolerance since they don’t rely on a single leader. This means operations can continue even if some nodes go offline. In contrast, leader-based systems depend on the leader, making it a potential single point of failure.

- Performance: Leader-based systems may experience higher latency due to the need for leader coordination. Meanwhile, leaderless systems often deliver lower latency and improved write throughput by distributing writes across multiple nodes.

- Operational Complexity: Managing leaderless replication can be more challenging because it involves handling conflict resolution and maintaining data consistency across nodes.

The choice between these approaches should depend on your application’s specific priorities, such as whether it values real-time data access, high fault tolerance, or ease of management.

What are the key differences in how leader-based and leaderless replication handle data consistency and availability during network partitions?

When it comes to managing data consistency and availability, leader-based and leaderless replication models take distinct approaches, especially during network partitions.

In a leader-based replication model, a single leader node handles all updates. This ensures that changes are applied in a specific, consistent order across the system. While this method guarantees strong consistency, it comes with a trade-off: reduced availability. If the leader node becomes unreachable during a network partition, the system may pause updates entirely until the leader is back online. This can lead to potential downtime and delays.

On the other hand, leaderless replication distributes the responsibility for updates across multiple nodes. This setup boosts availability, even in the face of network partitions, as updates can be accepted by any participating node. To maintain consistency, leaderless systems rely on quorum-based mechanisms, where a majority of nodes must agree on updates. However, this approach can sometimes result in temporary inconsistencies across nodes until all updates are reconciled and consensus is achieved.

Both models offer unique advantages and challenges, making them suitable for different use cases depending on the priority given to consistency or availability.

When is leaderless replication more beneficial than leader-based replication in private cloud environments?

Leaderless replication shines in private cloud setups that demand high availability and fault tolerance. With the ability for any node to manage both read and write operations, this method removes single points of failure. Even if some nodes go offline, the system keeps running smoothly, ensuring reliability.

This approach is also a great fit for geographically distributed systems, where network latency and delays often pose challenges. Leaderless replication handles these delays more efficiently, keeping the system accessible across various locations. On top of that, it supports scalability, making it easy to add new nodes without interrupting ongoing processes – a perfect solution for workloads that are constantly evolving and expanding.

Interested in OpenMetal Cloud?

Schedule a Consultation

Get a deeper assessment and discuss your unique requirements.

Read More on the OpenMetal Blog