In this article

- The Root Access Advantage

- Why Most Providers Don’t Offer Deep Storage Control

- Understanding Ceph’s Architecture for Performance Tuning

- Performance Improvements Through Custom Configuration

- Integration Benefits and High-Performance Networking

- Disaster Recovery and Multi-Site Capabilities

- Working with OpenMetal’s Ceph Expertise

- When Root Access Makes the Biggest Difference

- Moving Beyond One-Size-Fits-All Storage

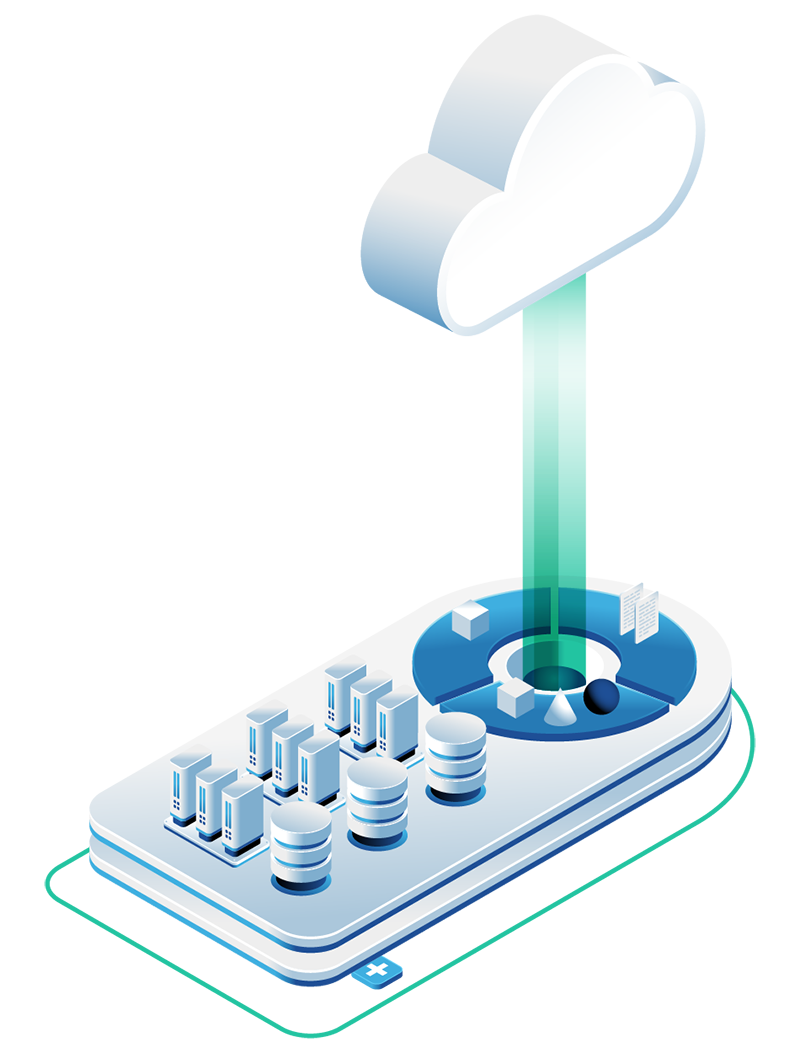

In most cloud environments, your storage operates as a black box. You can provision volumes and configure basic settings, but you can’t dig into the underlying mechanics that determine how your data is stored, replicated, or accessed. This limitation becomes a major bottleneck when you need to support varying storage workloads, from high-transaction databases requiring ultra-low latency to large-scale archival object stores that prioritize capacity over speed.

The reality is that these workloads require fundamentally different storage configurations and performance characteristics. Yet with most public cloud providers, you’re stuck using a one-size-fits-all storage solution that you can’t modify to match your specific requirements. This approach leaves performance benefits on the table and often forces you to accept suboptimal configurations.

The Root Access Advantage

With OpenMetal, you get something most providers won’t offer: a Ceph enterprise storage cluster with full root access. This means your engineers can architect storage solutions for their exact specifications rather than working within the constraints of a generic, pre-configured system.

Root access to your Ceph cluster opens up possibilities that simply aren’t available in traditional cloud environments. You can modify replication methods, tune caching mechanisms, adjust placement group configurations, and implement custom crush maps that align with your specific infrastructure topology and performance requirements.

Why Most Providers Don’t Offer Deep Storage Control

The majority of cloud providers operate on a shared infrastructure model where deep customization would create operational complexity and potential security risks. They need to maintain standardized configurations across thousands of customers, which naturally leads to the lowest-common-denominator approach to storage performance.

Additionally, providing root access requires a different level of support and expertise. It’s easier for providers to offer a simplified storage interface than to support customers who need to tune underlying storage parameters. This is where OpenMetal’s approach differs. Our team has been both using and deploying Ceph in customer environments for many years, so you can lean on our expertise to tune Ceph configurations and gain even more performance benefits.

Understanding Ceph’s Architecture for Performance Tuning

Ceph is an open source, software-defined storage platform that provides a unified storage layer capable of handling object, block, and file storage from a single cluster. The architecture is fully distributed and designed for high reliability and scalability with no single point of failure.

This distributed nature is what makes Ceph so tunable. Unlike traditional storage arrays with fixed performance characteristics, Ceph allows you to adjust how data is distributed across your cluster, how it’s replicated, and how different storage pools are configured for different workload types.

OpenMetal’s Ceph deployments are provisioned with a recent stable release of Ceph (Quincy), though users can work with the OpenMetal team to select different versions based on their specific requirements. This version flexibility alone provides performance advantages, as different Ceph releases include optimizations for specific workload types.

Performance Improvements Through Custom Configuration

Replication Level Optimization

With root access, you can configure replication levels based on your specific data protection and performance requirements. While traditional cloud storage typically uses fixed replication settings, you can tune these based on workload criticality and performance needs.

For maximum usable disk space, many deployments benefit from Replica 2 configurations. OpenMetal supplies data center grade SATA SSD and NVMe drives with a mean time between failures of 2 million hours—roughly 6 times more reliable than traditional HDDs. This reliability difference allows for more aggressive replication strategies that prioritize performance and capacity utilization. For more detailed information about Ceph replication, see our guide on Ceph replication and consistency models.

The usable disk space improvements are significant. For example, with OpenMetal’s HC Standard configuration, Replica 2 provides 4.8TB usable compared to 3.2TB usable with Replica 3 – a 50% increase in usable capacity.

NVMe Integration and Caching Strategies

OpenMetal’s Ceph deployments include NVMe drives that can function as fast storage tiers or caching layers for spinning disks. NVMe drives represent top-tier performance capabilities that many other providers don’t offer in their storage clusters.

With root access, you can configure sophisticated caching hierarchies that automatically promote frequently accessed data to NVMe storage while keeping less active data on higher-capacity SATA drives. This tiering can dramatically improve performance for mixed workloads without requiring you to provision expensive all-NVMe configurations for your entire dataset. Learn more about the performance benefits in our guide to all-NVMe Ceph cluster performance.

Erasure Coding and Compression

Root access enables you to implement erasure coding schemes that provide better storage efficiency than simple replication while maintaining data durability. You can also configure on-the-fly compression that reduces storage requirements for specific data types without impacting application performance.

These features require deep integration with the storage system – capabilities that are simply not available when you’re working through abstracted cloud storage APIs.

Integration Benefits and High-Performance Networking

OpenMetal’s Ceph storage clusters can be purchased as standalone products or integrated with OpenMetal’s hosted private clouds or bare metal servers. This integration allows for unified management and high-performance private networking between storage clusters and other infrastructure components. Whether you rely more on block storage or object storage, the integration provides significant performance advantages.

The performance benefits extend beyond just storage tuning. OpenMetal’s infrastructure provides advanced, high-speed networking capabilities that can significantly improve storage performance, particularly for distributed applications that need to access data across multiple nodes simultaneously.

S3 Compatibility Without Limitations

Ceph’s S3-compatible API allows for integration with tools and applications that use Amazon S3, but unlike managed S3 services, you maintain control over the underlying storage characteristics. You can tune the object gateway performance, adjust how data is distributed across storage devices, and implement custom storage policies that align with your application requirements. For a detailed comparison, read our analysis of Ceph vs S3 storage.

This S3 compatibility means you get the benefits of a standard API while maintaining the performance advantages of a fully tunable storage system.

Disaster Recovery and Multi-Site Capabilities

Root access also enables sophisticated disaster recovery configurations. Ceph clusters support replication to and from other Ceph clusters, and with full administrative control, you can configure these relationships to meet specific recovery time objectives and recovery point objectives.

You can implement cross-site replication strategies, configure automated failover procedures, and tune replication bandwidth to balance disaster recovery requirements with production performance impact.

Working with OpenMetal’s Ceph Expertise

While root access provides the capability to tune your storage system, you don’t have to navigate these configurations alone. The OpenMetal team brings years of experience deploying and optimizing Ceph in production environments.

While root access provides the capability to tune your storage system, you don’t have to navigate these configurations alone. The OpenMetal team brings years of experience deploying and optimizing Ceph in production environments.

This expertise becomes particularly valuable when you’re implementing complex configurations like custom crush maps, advanced erasure coding schemes, or multi-tier storage hierarchies. Having access to engineers who understand both the theoretical aspects of distributed storage and the practical implications of specific configuration choices can accelerate your performance optimization efforts.

When Root Access Makes the Biggest Difference

Root access to your Ceph cluster provides the most significant benefits in several scenarios:

High-performance databases that require consistent, low-latency storage with specific IOPS characteristics can benefit from custom placement group configurations and dedicated storage pools with optimized settings. For specific tuning guidance, see our tutorial on how to tune Ceph for block storage performance.

Mixed workload environments where you need to support both high-performance transactional systems and high-throughput analytical workloads can use root access to create specialized storage pools with different performance characteristics. Understanding CephFS metadata management becomes particularly important for file storage workloads in these environments.

Compliance-heavy environments that require specific data placement, encryption, or access controls can implement custom configurations that meet regulatory requirements while maintaining performance.

Large-scale object storage implementations that need to optimize for specific access patterns, data lifecycle policies, or integration requirements can tune gateway configurations and implement custom storage classes.

Moving Beyond One-Size-Fits-All Storage

The limitation of generic cloud storage comes back to the inability to evolve your storage architecture as your requirements change. With root access to your Ceph cluster, you can implement changes as your workloads evolve, your data patterns shift, or your performance requirements increase.

This flexibility becomes particularly important as organizations move toward more complex, data-intensive applications. Machine learning workloads, real-time analytics, and modern application architectures all have storage requirements that benefit from custom tuning rather than generic configurations.

OpenMetal’s approach of providing enterprise Ceph storage with full administrative control addresses these limitations directly. Instead of accepting the constraints of managed storage services, you can architect storage solutions that align with your specific technical and business requirements.

The combination of Ceph’s distributed architecture, OpenMetal’s high-performance infrastructure, and full root access creates storage solutions that can be tuned for your exact specifications, something that’s simply not available from traditional cloud providers who prioritize standardization over customization. To explore pricing options for your specific requirements, visit our storage cluster pricing page.

Schedule a Consultation

Get a deeper assessment and discuss your unique requirements.

Read More on the OpenMetal Blog