Mission-critical applications, ranging from e-commerce platforms to financial systems, demand uninterrupted access and performance. High availability (HA) is a critical factor in ensuring that these applications always remain operational and accessible for the businesses that rely on them.

HA refers to the ability of a system to continue functioning without experiencing significant disruption in the event of hardware, software, or network failures. For mission-critical applications, even brief periods of downtime can have severe consequences – financial losses, damage to reputation, and disruption of essential services being just a few.

Understanding High Availability Concepts

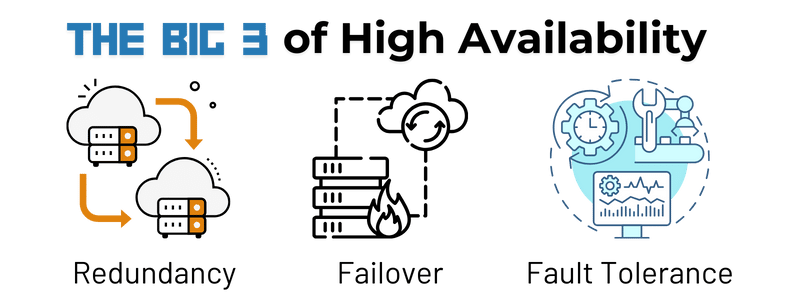

Several key areas make up the backbone of high availability systems:

- Redundancy: Having multiple components or systems in place to provide backup if one fails. This involves having many components, such as servers, storage devices, or network connections, available to provide backup in case of failures.

- Failover: The process of automatically switching to a backup system when the primary system fails or becomes unavailable.

- Fault Tolerance: The ability of a system to continue operating even when individual components fail.

HA architectures combine these concepts to create systems that are resilient to failures and can maintain continuous operation. Something that is obviously very important for mission-critical applications!

High Availability Solutions and Technologies

To achieve high availability, businesses can use various solutions and technologies:

Redundancy

Hardware Redundancy

RAID (Redundant Array of Independent Disks):

Combines multiple physical hard drives into a single logical volume, providing data redundancy and fault tolerance.

RAIDs contribute to hardware redundancy through:

- Data Duplication: Some RAID levels, like RAID 1, involve mirroring data across multiple drives. This means that each drive contains an identical copy of the data. If one drive fails, the data can be recovered from the remaining drive(s).

- Parity Information: Other RAID levels, like RAID 5 and RAID 6, use parity information to reconstruct data in case of a drive failure. Parity is a mathematical calculation based on the data on the other drives.

- Data Striping: RAID also involves striping data across multiple drives, which can improve performance by distributing I/O operations. However, striping alone does not provide redundancy.

Specific RAID Levels and their redundancy:

- RAID 1 (Mirroring): Mirrors data across all drives. If one drive fails, the data can be recovered from another.

- RAID 5: Uses parity information to reconstruct data if one drive fails. This provides a good balance of performance and redundancy.

- RAID 6: Similar to RAID 5, but uses double parity, allowing for the recovery of data even if two drives fail. This provides the highest level of redundancy among common RAID levels.

Hot Spares:

Spare hardware components that are kept in a standby state, ready to replace a failed component without interrupting the system’s operation. This helps to ensure system continuity and minimize downtime since failed components can be rapidly replaced.

Hot spares can also be used for preventive maintenance. A spare component can be replaced during off-peak hours or scheduled maintenance windows, reducing the risk of unexpected failures.

Common examples of hot spares include:

- Spare servers: A spare server can be used to replace a failed server in a cluster or virtualized environment.

- Spare storage drives: A spare storage drive can be used to replace a failed drive in a RAID array.

- Spare network switches: A spare network switch can be used to replace a failed switch in a network infrastructure.

- Spare power supplies: A spare power supply can be used to replace a failed power supply in a server or other device.

Software Redundancy

Replication:

Creating copies of data across multiple systems or in different locations to ensure availability in case of failures. If one system or location fails, the data or application can still be accessed from the remaining copies.

There are two main types of replication:

- Asynchronous Replication: In asynchronous replication, data changes are propagated to the replica systems at a later time. This can introduce a delay between the changes being made on the primary system and the updates being reflected on the replica systems.

- Synchronous Replication: In synchronous replication, data changes are propagated to the replica systems in real time. This ensures that the data on all systems is consistent, but it can also impact performance.

Replication can be used in various scenarios, including:

- Database replication: Replicating databases to provide high availability and disaster recovery.

- File system replication: Replicating files to ensure data availability and redundancy.

- Application replication: Replicating applications to distribute load and improve fault tolerance.

Clustering:

Grouping multiple systems or components together to provide a single, unified service.

There are two main types of clustering:

- Shared-Nothing Clustering: Each system has its own independent storage. This can improve scalability and fault tolerance, but it can also make it more difficult to manage data consistency.

- Shared-Storage Clustering: The systems share a common storage system. This can simplify data management but can make the system more vulnerable to failures.

Failover

Manual Failover

Manually switching to a backup system in case of a failure. Compared to automatic failover, this process provides complete control over the failover process, flexibility to adapt to different situations, and is cost-effective for smaller systems or businesses with limited resources. However it can be subject to human error, is time-consuming, and is slower than automatic failover which isn’t ideal for rapid recovery.

Tools commonly used in manual failover include:

- Network Management Systems: Network management systems (NMS) can provide a centralized view of network components and help administrators identify failures. They may also offer tools to initiate manual failovers for network devices like routers or switches.

- Storage Management Software: Storage management software can monitor the health of storage devices and provide tools to initiate manual failovers. For example, RAID controllers often have options for manual failover to a hot spare.

- Custom Scripts: Administrators can create custom scripts to automate certain aspects of the manual failover process, such as checking the status of components, initiating failover procedures, and verifying the backup system’s functionality.

- Command-Line Interfaces: Many systems and devices provide command-line interfaces (CLIs) that can be used to manually initiate failovers. For example, administrators can use the CLI of a database server to manually switch to a backup instance.

Automatic Failover

Automatically switching to a backup system without human intervention. Systems are continuously monitored using hardware-based health checks, software agents, or network management protocols. If an issue is detected, the monitoring system detects it and triggers the failover process, switching to a backup component.

Automatic failover has the benefits of faster recovery, better reliability, and a reduced need for human intervention or oversight. However implementing an automatic failover system can be quite complex, cost more for the necessary management hardware and software, and carries the potential for false positives.

Popular automatic failover tools include:

- HAProxy: A high-performance load balancer that can also be used for high availability. HAProxy can automatically detect failures and redirect traffic to backup servers.

- Keepalived: A service that provides high availability for TCP and UDP services. It can monitor the health of servers and automatically failover to backup servers.

- Pacemaker: A high availability clustering solution that can be used to manage clusters of servers and automatically failover to backup nodes.

- Corosync: A cluster synchronization and messaging protocol that is often used in conjunction with Pacemaker to provide high availability for clusters.

- SBD (Storage Bonding Device): A Linux kernel module that provides high availability for storage devices. It can automatically failover to a backup storage device if the primary device fails.

- ZFS (Zettabyte File System): A file system that includes built-in high availability features, such as mirroring and RAID. ZFS can automatically detect and recover from failures.

Planned Failover

Pre-scheduled failover events test the backup system and ensure readiness. Testing your failover systems can improve the resilience of your system. You’ll also be able to find and fix any potential issues before they affect a true failure scenario.

Some tools used in planned failovers are:

Automation Tools:

- Ansible: A popular configuration management tool that can automate various tasks related to planned failovers, including switching traffic to backup systems and updating configurations.

- Puppet: Another configuration management tool that can be used to automate planned failover procedures.

- Chef: A configuration management platform that can automate tasks such as deploying applications, managing infrastructure, and executing planned failovers.

Orchestration Tools:

- OpenStack: A cloud computing platform that provides orchestration capabilities for managing infrastructure and applications. It can be used to automate planned failovers for various components within a cloud environment.

- Kubernetes: A container orchestration platform that can automate the deployment and management of applications across multiple servers. It can also be used to execute planned failovers for containerized applications.

Monitoring Tools:

- Nagios: A popular network and system monitoring tool that can be used to monitor the health of systems and trigger planned failovers when necessary.

- Zabbix: Another network and system monitoring tool that can be used for planned failover automation.

Database Replication Tools:

- Oracle Data Guard: A high availability solution for Oracle databases that includes tools for planned failovers.

- Microsoft SQL Server AlwaysOn: A high availability solution for SQL Server that includes tools for planned failovers.

Custom Scripts:

Organizations can develop custom scripts to automate specific tasks related to planned failovers, such as switching traffic or updating configurations.

Fault Tolerance

Distributed Systems

Systems that consist of multiple, interconnected components that work together to achieve a common goal. These components can be located in different physical locations and communicate with each other using a network. In a distributed system, components can be isolated from each other, so a failure in one component is less likely to affect other components. This can help to prevent cascading failures and improve overall system resilience.

Examples of distributed systems that contribute to fault tolerance include:

- Distributed databases: Databases that are distributed across multiple nodes to improve performance, scalability, and fault tolerance.

- Content delivery networks (CDNs): Networks of servers that are distributed across the globe to deliver content to users quickly and reliably.

- Distributed file systems: File systems that are distributed across multiple nodes to provide high availability and fault tolerance.

Fault-Tolerant Databases

Databases that can continue to operate even if a component fails, using techniques like replication and transaction logging.

Examples of fault-tolerant databases include:

- Oracle RAC: A cluster-based database that provides high availability and scalability.

- Microsoft SQL Server AlwaysOn: A high availability and disaster recovery solution for SQL Server.

- MySQL Group Replication: A replication solution for MySQL that provides high availability and fault tolerance.

OpenStack, Bare Metal, and Ceph for High Availability

Businesses have no shortage of choices when it comes to high availability solutions. We think a mix of OpenStack, bare metal servers, and Ceph storage allows the perfect levels of flexibility, control, power, and cost-effectiveness for just about any organization.

OpenStack

OpenStack is a popular open source cloud computing platform that offers a range of features to support high availability, including:

- Orchestration and Automation: OpenStack provides a powerful orchestration layer that can automate many tasks, such as provisioning, scaling, and managing resources. This automation can help to reduce human error and improve the reliability of HA systems.

- Fault Tolerance: OpenStack includes built-in fault tolerance features, such as high availability clusters and redundant components. This helps to ensure that the system can continue to operate even if one or more components fail.

- Scalability: OpenStack is highly scalable, allowing systems to be easily scaled up or down as needed. This can help to prevent system failures due to insufficient capacity or overload.

- Flexibility: OpenStack is highly flexible, allowing organizations to customize their infrastructure to meet their specific needs. This can help to ensure that the system is optimized for high availability.

- Community Support: OpenStack has a large and active community of developers and users. This provides access to a wealth of resources, including documentation, tutorials, and support forums.

Specific OpenStack components that contribute to high availability:

- Nova: The compute service in OpenStack, which can be configured to use high availability clusters.

- Neutron: The networking service in OpenStack, which can be configured to use redundant network components.

- Cinder: The block storage service in OpenStack, which can be configured to use redundant storage arrays.

- Swift: The object storage service in OpenStack, which can be configured to use redundant storage nodes.

Bare Metal Servers

Advantages of using bare metal servers for high availability systems include:

- Predictable Performance: Bare metal servers offer predictable performance, as there are no other virtual machines or applications sharing the hardware resources. This can be crucial for HA systems that require consistent and reliable performance.

- Control Over Hardware: With bare metal servers, you have full control over the hardware configuration. This allows you to optimize the system for specific workloads and ensure that it meets the requirements for high availability.

- Reduced Latency: Bare metal servers can often offer lower latency than virtual machines, which can be important for applications that require real-time processing or low latency communication.

- Security: Bare metal servers can provide a higher level of security than virtual machines, as there is no virtualization layer that could be compromised. This can be important for HA systems that handle sensitive data.

- Integration with HA Solutions: Bare metal servers can be easily integrated with other HA solutions, such as clustering, replication, and failover mechanisms. This lets you build highly-available systems that are tailored to your specific needs.

However, it’s important to note that bare metal servers can also have some drawbacks:

- Higher Costs: Bare metal servers can be more expensive than virtual machines, especially for smaller deployments.

- Management Overhead: Managing bare metal servers can require more technical expertise and administrative overhead than managing virtual machines.

Ceph Storage Clusters

Ceph is a distributed object, block, and file storage system that provides high availability and scalability for storage. Key features include:

- Fault Tolerance: Ceph’s distributed architecture and self-healing capabilities make it highly fault-tolerant. It can automatically recover from failures such as node failures, disk failures, or network outages.

- Scalability: Ceph is designed to scale horizontally, meaning that you can add or remove storage nodes as needed to meet your capacity requirements. This makes it ideal for large-scale HA systems.

- Data Redundancy: Ceph uses replication and erasure coding to ensure data redundancy. This means that your data is stored on multiple nodes, providing protection against data loss.

- Performance: Ceph can provide high performance, even for large-scale storage clusters. This is due to its efficient architecture and use of parallel processing.

- Self-Healing: Ceph has self-healing capabilities, meaning it can automatically detect and repair failures. This helps to minimize downtime and ensure data integrity.

- Integration with OpenStack: Ceph is tightly integrated with OpenStack, making it easy to deploy and manage within an OpenStack environment. This can simplify the deployment and management of HA systems.

Combining OpenStack, bare metal servers, and Ceph storage can create a powerful, highly available infrastructure for your mission-critical applications

- OpenStack provides a flexible and scalable platform for managing and orchestrating cloud resources, including bare metal servers and Ceph storage clusters.

- Bare metal servers offer predictable performance, control over hardware, and reduced latency, making them ideal for critical applications.

- Ceph provides a distributed and self-healing storage system that can ensure high availability and data durability.

By combining these technologies, organizations can build highly resilient and scalable infrastructure that can withstand failures and ensure continuous service delivery.

OpenStack can be used to manage and orchestrate the entire infrastructure, including the bare metal servers and Ceph storage clusters. This can help reduce the cost risks of not using bare metal servers efficiently as they are more expensive than virtual machines.

Bare metal servers can provide the foundation for critical applications, while Ceph can ensure that data is stored reliably and can be recovered in case of failures.

By combining these technologies, organizations can create a robust and resilient infrastructure able to withstand failures and ensure continuous service delivery.

Want to explore our on-demand hosted private cloud powered by OpenStack for your high-availability infrastructure needs?

Questions? Contact us.

Read More on the OpenMetal Blog

How Hosted Private Clouds Can Be Used for Remote Virtual Development Environments

In this blog post, we’ll talk about some advantages of virtualized environments for remote development teams, explore how hosted private clouds are a great match for virtualized development, and explore a real-world case study.

OpenStack For High Performance Computing

HPC workloads have unique infrastructure needs due to their intense computational demands. High computational power is essential, as HPC tasks often involve complex simulations, data analysis, and large-scale parallel processing. These workloads also handle vast datasets, requiring both significant storage capacity and high throughput for efficient data management.

The Growing Bare Metal Cloud Market: A Surge Driven by AI, ML, and High-Performance Computing

The bare metal cloud market is poised for significant growth in the coming years, fueled by the rapid advancements in artificial intelligence (AI) and machine learning (ML), as well as the increasing demand for high-performance computing (HPC).