Deciding on the optimal infrastructure for your OLAP databases?

OpenMetal’s bare metal dedicated infrastructure fits both performance and cost requirements.

Engines like ClickHouse, StarRocks, and Apache Druid have transformed what teams can do with analytical data. Sub-second queries across billions of rows are no longer aspirational — they’re expected. But most teams spend weeks evaluating which OLAP engine to deploy and almost no time evaluating what to deploy it on.

That’s a problem, because the infrastructure underneath your OLAP cluster is the invisible variable that determines whether your queries return in 200 milliseconds or two seconds — and whether that performance holds under load or degrades unpredictably. One cybersecurity firm building a production ClickHouse cluster for real-time security analytics chose dedicated bare metal servers for exactly this reason: their workload demanded consistent performance that shared infrastructure couldn’t deliver.

This article explains why OLAP databases and dedicated servers are a natural fit — architecturally, financially, and operationally.

Key Takeaways

- OLAP workloads demand sustained CPU throughput, high memory bandwidth, and consistent NVMe performance — characteristics that shared cloud infrastructure is structurally unable to guarantee because of hypervisor scheduling, NUMA abstraction, and provisioned I/O caps.

- Dedicated bare metal servers expose the full hardware to the OLAP engine: real NUMA topology for memory pinning, uninterrupted CPU scheduling for vectorized scans, and NVMe drives that deliver rated throughput without burst credit limits.

- The cost math favors dedicated infrastructure at OLAP scale. A 3-node ClickHouse cluster on OpenMetal XL v4 servers costs approximately $5,514/month with a 1-year contract — compared to approximately $24,048/month on-demand or ~$15,600/month at estimated reserved pricing for comparable AWS i4i.metal instances, before egress and support costs.

- Real production workloads validate this approach. A cybersecurity firm runs a ClickHouse cluster on OpenMetal bare metal with tiered storage (NVMe for hot data, Ceph S3 for historical data), processing real-time security analytics with continuous ingestion.

- When managed OLAP services make sense (small scale, variable workloads, rapid experimentation), use them. When your workloads are sustained, your data volumes are measured in terabytes, and your query SLAs matter, dedicated infrastructure is the better foundation.

What Makes OLAP Workloads Different

OLAP and OLTP workloads place fundamentally different demands on infrastructure.

- OLTP transactions are short-lived: a single row insert, a point lookup, a quick update. Each operation touches a small amount of data and completes in milliseconds. The infrastructure challenge for OLTP is handling many of these small operations concurrently with low latency.

- OLAP is the opposite. A single analytical query might scan an entire column across hundreds of millions of rows, aggregate the results with a GROUP BY, join against another large table, and return a summary. That query can run for seconds or minutes, consuming sustained CPU, memory bandwidth, and storage throughput for its entire duration.

This distinction matters because infrastructure optimized for OLTP — fast response to many small requests — is structurally mismatched with what OLAP engines actually need.

| Characteristic | OLTP | OLAP |

|---|---|---|

| Query duration | Milliseconds | Seconds to minutes |

| Data access pattern | Point lookups, small row ranges | Full column scans, large range reads |

| CPU demand | Burst (many short tasks) | Sustained (long, parallel scans) |

| Memory demand | Small per-query footprint | Large intermediate datasets (hash joins, aggregations) |

| Storage pattern | Random reads/writes, small I/O | Sequential reads, large I/O |

| Network demand | Low per-query | High for distributed shuffles and replication |

Columnar Scans and Sustained Throughput

OLAP databases store data in columnar format. When a query asks for the average of a single column across 500 million rows, the engine reads just that column sequentially from storage, decompresses it, and processes it through vectorized execution — operating on batches of values simultaneously rather than one row at a time. This pattern demands sustained, uninterrupted CPU throughput and memory bandwidth across all available cores for the duration of the scan. Burst capacity is irrelevant; what matters is how fast the hardware can sustain a parallel read across every core for seconds at a time.

Large Aggregations and Memory Pressure

Operations like GROUP BY, JOIN, and window functions materialize large intermediate datasets in memory. A hash join between two large tables can easily consume tens or hundreds of gigabytes of RAM before returning a result. When memory runs short, the engine spills to disk — and query times jump by an order of magnitude.

How Shared Infrastructure Undermines OLAP Performance

Cloud VMs and shared hosting environments are designed to efficiently multiplex many tenants onto the same physical hardware. That works well for OLTP and general-purpose workloads, where each tenant’s resource usage is bursty and brief. For OLAP, the design assumptions of shared infrastructure create specific problems.

The Noisy Neighbor Problem for Long-Running Queries

On shared infrastructure, CPU time is allocated by a hypervisor scheduler that balances demand across all tenants on the same physical host. An OLTP query that completes in 5 milliseconds is largely unaffected by brief scheduling interruptions. But an OLAP query scanning columns for 10 seconds straight is disrupted by every preemption. Each interruption stalls the vectorized execution pipeline, adds latency, and — critically — makes query times unpredictable. For teams running SLA-bound dashboards, this unpredictability shows up as tail latency variance in p99 response times.

Memory Bandwidth Contention and Virtualization Overhead

Columnar OLAP engines are often memory-bandwidth-bound rather than compute-bound. The scan throughput depends on how fast data moves from RAM through the memory bus to the CPU. Hypervisors add overhead to this path by abstracting the physical memory topology. On a dual-socket server, the NUMA (Non-Uniform Memory Access) topology determines which memory banks are local to which CPU socket. OLAP engines like ClickHouse are NUMA-aware: they pin memory allocations to the local socket to avoid the latency penalty of cross-socket access. But on a cloud VM, the hypervisor abstracts this topology. vCPUs can be assigned across physical sockets without the guest OS knowing, silently degrading memory-bandwidth-dependent columnar scan throughput.

I/O Variance on Shared Storage

NVMe performance on cloud VMs is typically provisioned with IOPS and throughput caps. Many cloud providers use a burst-credit model: you get high performance for a brief period, then throttling kicks in. OLAP columnar scans are the worst case for this model — they generate sustained, sequential reads across terabytes of data. Once burst credits are exhausted, scan performance degrades until credits regenerate. On dedicated NVMe, there are no provisioned limits. The drives deliver their rated throughput continuously.

What OLAP Databases Need from Infrastructure

Translating the workload characteristics above into hardware requirements produces a specific profile. This is where dedicated servers built for data-intensive workloads — like OpenMetal’s XL v4 bare metal servers — map directly to what OLAP engines demand.

| Resource | OLAP Demand | What XL v4 Dedicated Servers Deliver |

|---|---|---|

| CPU | Sustained multi-core throughput for vectorized execution | 64 physical cores (dual Intel Xeon Gold 6530, 5th Gen Emerald Rapids) with no hypervisor scheduling overhead. 160 MB L3 cache per socket keeps column data cache-resident during aggregation passes. |

| Memory | High bandwidth and large capacity for intermediate results | 1 TB DDR5-5600 across 8 channels per socket. Full NUMA topology exposed, so OLAP engines can pin memory to local sockets. For workloads with massive hash joins or high concurrency, the XXL v4 provides 2 TB DDR5 out of the box. |

| Storage | NVMe sequential read throughput for columnar scans | 4x 6.4 TB Micron 7450 MAX NVMe (25.6 TB total) delivering up to 6,800 MB/s sequential reads and 1M random read IOPS — with no provisioned caps or burst credits. 3 DWPD endurance handles continuous ingestion alongside analytical queries. |

| Network | 20+ Gbps east-west for distributed shuffle and replication | 20 Gbps dedicated private bandwidth between bare metal nodes in the same deployment. No shared fabric, no contention from other tenants. |

The 5th Generation Intel Xeon processors in these servers represent a meaningful generational improvement for OLAP specifically. The jump from 60 MB to 160 MB of L3 cache per socket means substantially more column data stays cache-resident during aggregation passes — a direct benefit for the scan-heavy access patterns that define analytical workloads. DDR5 memory provides higher bandwidth per channel than DDR4, and PCIe 5.0 ensures the storage bus won’t bottleneck as NVMe drives get faster.

For teams that need more local storage capacity or are running in-memory OLAP workloads with especially large intermediate datasets, the XXL v4 configuration extends to 38.4 TB of NVMe (6x 6.4 TB) and 2 TB of DDR5, while sharing the same dual Xeon Gold 6530 CPU platform.

Network Architecture and Distributed OLAP

When you run a distributed OLAP engine like a ClickHouse cluster or StarRocks, queries don’t execute on a single node. The query coordinator breaks each query into stages, distributes partial scans across shards, and then shuffles intermediate results between nodes for joins and final aggregation. These shuffle operations can move gigabytes of data between nodes for a single query.

This east-west traffic pattern is where network architecture matters. On cloud VMs, private network bandwidth is shared with other tenants on the same physical host, and cross-availability-zone traffic incurs both latency and per-GB charges. During peak query concurrency — say, a morning dashboard refresh hitting the cluster with dozens of simultaneous queries — network contention can become the bottleneck that determines overall query latency.

With dedicated bare metal servers connected by 20 Gbps private bandwidth within the same deployment, shuffle operations run on a dedicated fabric with no contention. The network bandwidth is yours, consistently, regardless of what other customers’ workloads look like. For a production deployment like the cybersecurity firm running ClickHouse on OpenMetal bare metal, the architecture also supports merged VLANs between bare metal nodes and OpenStack-hosted supporting services (like ZooKeeper), giving the entire cluster high-bandwidth, low-latency internal communication.

The Cost Tipping Point for OLAP Infrastructure

OLAP workloads have a cost profile that makes the economics of dedicated infrastructure particularly favorable. Unlike OLTP workloads that might scale up during business hours and down at night, most OLAP clusters run continuously: they ingest data around the clock, serve dashboards during business hours, and run batch ETL or materialized view refreshes overnight. There’s no “off” period where hourly billing saves money.

Here’s how a 3-node ClickHouse cluster compares between OpenMetal’s XL v4 bare metal servers and the closest equivalent AWS instance, the i4i.metal (64 cores, 1 TB RAM, local NVMe).

Scenario: 10 TB compressed dataset (~50-100 TB logical), continuous query workload, 5 TB/month of egress serving BI tools.

| Component | OpenMetal (3x XL v4, 1-yr) | AWS (3x i4i.metal, On-Demand) | AWS (3x i4i.metal, 1-yr Reserved est. |

|---|---|---|---|

| Compute | 3 × $1,838.16 = **$5,514** | 3 × $8,016 = **$24,048** | 3 × ~$5,200 = **~$15,600** |

| Egress (5 TB/mo) | $0 (within allotment) | ~$450 | ~$450 |

| Support | Infrastructure support included, with direct access to senior technical staff | Additional, no direct access to engineers | Additional, no direct access to engineers |

| **Monthly estimate** | **~$5,514** | **~$24,498+** | **~$16,050+** |

| **Annual estimate** | **~$66,174** | **~$293,976+** | **~$192,600+** |

*OpenMetal pricing confirmed February 2026 (1-year contract). AWS on-demand pricing based on published rates for US East (Virginia) as of early 2026 — verify at aws.amazon.com/ec2/pricing for current figures. AWS reserved estimates based on typical 1-year all-upfront discount ranges. Egress assumes $0.09/GB after the first 100 GB on AWS. Actual costs vary by configuration, region, and commitment term.*

Beyond the raw compute cost, a few additional factors compound in favor of fixed-cost infrastructure at OLAP scale.

The OpenMetal XL v4 runs 5th Gen Intel Emerald Rapids processors with DDR5 memory.

The AWS i4i.metal runs 3rd Gen Intel Ice Lake with DDR4 — two CPU generations older, with a smaller L3 cache and lower memory bandwidth.

On paper, the OpenMetal configuration is the newer, faster hardware at a lower price point.

It’s worth being specific about where AWS has advantages in this comparison. The i4i.metal offers up to 75 Gbps peak network bandwidth versus 20 Gbps on the XL v4 — relevant for extremely network-intensive distributed queries. AWS also provides integrated ecosystem services like S3 for storage tiering, Glue for ETL, and IAM for access control that aren’t part of a bare metal deployment. And AWS 3-year reserved pricing can close the cost gap further, though it also requires a longer commitment. For a deeper look at the reserved instance vs. bare metal cost calculus, we’ve covered that comparison in detail.

A Note about Data Gravity and Egress Fees

OLAP databases typically sit at the center of a data ecosystem: they ingest from event streams, application databases, data lakes, and third-party APIs, and they serve output to BI tools, dashboards, data science notebooks, and downstream services. Every one of those downstream consumers generates egress when the OLAP cluster is hosted in a public cloud.

At $0.09 per GB, 5 TB of monthly egress costs approximately $450 on AWS. That number scales linearly with the number of consumers and the volume of analytical results they pull. On OpenMetal, egress is included within generous bandwidth allotments, with 95th percentile billing above the limit — a pricing model designed for data-intensive workloads rather than penalizing them.

The Tipping Point for the Most Value

ChistaDATA’s published analysis found that running ClickHouse analytical workloads on bare metal costs roughly one-fourth of equivalent AWS infrastructure. Our pricing validates that finding: at the 50TB scale, the 3-node XL v4 cluster runs at 46% of the hyperscaler on-demand cost — that’s before negotiating reserved instance discounts which come with their own lock-in tradeoffs.

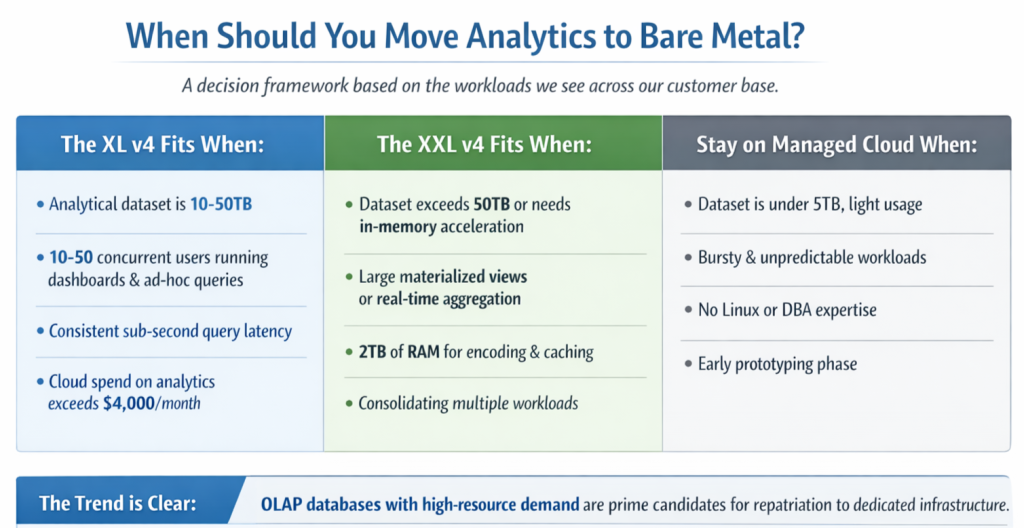

The cost tipping point? For most teams, it starts around 10TB of analytical data. Below that, managed cloud services may make sense — especially if your team is small and usage patterns are unpredictable. Above it, every additional terabyte widens the gap in bare metal’s favor.

Which OLAP Engines Fit XL v4 and XXL v4 Hardware?

Not all OLAP databases use hardware the same way. Here’s how the leading open-source engines map to OpenMetal Bare Metal Dedicated Servers.

ClickHouse is the most natural fit for the XL v4. Its MergeTree engine family performs sequential reads across sorted, compressed columnar data — exactly the access pattern that Micron 7450 NVMe drives handle best. With 1TB of RAM, ClickHouse keeps hot partitions and dictionary-encoded columns in memory for sub-second queries on datasets that would require distributed caching on cloud VMs with half the RAM. We’ve published a detailed architecture guide for ClickHouse deployment on OpenMetal bare metal and OpenStack infrastructure.

Apache Druid benefits from the multi-drive configuration. Historical nodes store immutable segments on disk and serve them via memory-mapped I/O. Running 4-6 NVMe drives in a JBOD configuration gives Druid’s historical processes parallel read paths across segments. The 1TB of RAM on the XL v4 allows broker nodes to maintain large query result caches.

StarRocks couples compute and storage tightly, which makes the XXL v4’s 2TB of RAM particularly valuable. StarRocks stores materialized views and frequently accessed data in memory for acceleration. Teams running large materialized views over 100TB+ datasets will find the XXL v4’s memory capacity a direct performance multiplier.

Apache Doris shares a similar architecture with StarRocks but emphasizes high-concurrency mixed workloads. The 64 physical cores on both the XL v4 and XXL v4 handle concurrent analytical and point queries without the thread-scheduling overhead of virtualized environments.

When OpenMetal Dedicated Servers Are the Right Choice for OLAP workloads

Dedicated infrastructure like OpenMetal Bare Metal Dedicated Servers fit OLAP workloads best when the following conditions are true: the cluster runs continuously rather than in short bursts, data volumes are measured in terabytes or more, query consistency matters for SLA-bound dashboards and reporting, compliance or data isolation requirements demand single-tenant infrastructure, or the team has crossed the cost tipping point where cloud bills exceed what fixed-cost infrastructure would cost.

Managed cloud OLAP services like ClickHouse Cloud, Snowflake, and BigQuery remain the right choice for teams that are still experimenting with analytical workloads, have variable or unpredictable query volumes, need to get started quickly without managing infrastructure, or are working at a scale where the managed service premium is small relative to the operational simplicity it provides. For teams that want dedicated infrastructure with orchestration, OpenMetal also offers Hosted Private Cloud — OpenStack-powered, deployed in 45 seconds, with the same underlying hardware.

The most effective pattern we see is: teams start with managed OLAP services, grow into predictable workloads and cost pressure, and then evaluate self-hosted OLAP on dedicated infrastructure as the next step. The infrastructure decision follows the workload maturity.

The Bottom Line

Running OLAP databases on bare metal isn’t about rejecting the cloud. It’s about putting the right workload on the right infrastructure. Columnar analytics engines need dedicated CPU cores, large memory pools, and uncontested NVMe throughput. OpenMetal’s XL v4 and XXL v4 Bare Metal Servers deliver all three — at 46-54% of the cost of comparable hyperscaler instances.

For a 3-node ClickHouse cluster at the 50TB scale, that’s $78,000+ in annual savings on infrastructure alone.

If your analytics infrastructure costs have crossed the tipping point, we’d like to show you the numbers for your specific workload. Talk to an infrastructure engineer about running your OLAP databases on OpenMetal dedicated hardware.

FAQ

What is an OLAP database?

OLAP (Online Analytical Processing) databases are designed for analytical queries over large datasets. Unlike OLTP databases that handle individual transactions, OLAP engines like ClickHouse, StarRocks, Apache Druid, and DuckDB use columnar storage and vectorized execution to scan and aggregate billions of rows for reporting, dashboards, and ad hoc analysis.

Why do OLAP databases need dedicated servers?

OLAP queries consume sustained CPU, memory bandwidth, and storage throughput for seconds or minutes at a time. Shared cloud infrastructure introduces scheduling interruptions, memory bandwidth contention, and provisioned I/O caps that create unpredictable query latency. Dedicated servers eliminate these variables by giving the OLAP engine exclusive access to the underlying hardware.

What hardware specs matter most for OLAP performance?

The most important specs for OLAP are sustained multi-core CPU throughput (for vectorized columnar scans), memory bandwidth and capacity (for intermediate aggregation results and hash joins), NVMe sequential read throughput without provisioned caps (for scanning terabyte-scale datasets), and east-west network bandwidth (for distributed shuffle operations in clustered deployments).

How do dedicated servers compare to cloud OLAP services in cost?

For always-on OLAP workloads, dedicated servers offer substantial cost advantages. A 3-node ClickHouse cluster on OpenMetal XL v4 bare metal servers costs approximately $5,514/month (1-year contract), compared to approximately $24,048/month on-demand or ~$15,600/month at estimated reserved pricing for comparable AWS i4i.metal instances. Managed cloud OLAP services (Snowflake, ClickHouse Cloud) add further premiums through compute-time or data-scanned pricing models. The trade-off is operational: dedicated servers require teams to manage the OS and database, while managed services handle that for a premium.

Can I run ClickHouse or StarRocks on bare metal?

Yes. Both ClickHouse and StarRocks run natively on bare metal Linux servers with no virtualization required. In fact, bare metal is the recommended deployment model for performance-sensitive production clusters. OpenMetal showcases a production ClickHouse deployment for a cybersecurity firm running on 6 bare metal nodes with hybrid NVMe and Ceph storage tiering.

More Bare Metal Content