In this article

- Understanding Ceph Replication: What It Actually Does

- Performance Impact: The Real Numbers

- What Replication Protects (And What It Doesn’t)

- Why You Need a Separate Storage Cluster

- How OpenMetal Changes the Backup Economics

- Picking the Right Setup for Your Workload

- Getting It Set Up

- What This Actually Costs

- Mistakes to Avoid

- The Bottom Line

- Ready to Get Started?

If you’re setting up Ceph storage, you’ll face a big decision early on: replica 2 or replica 3? This choice affects everything from how much usable storage you get to how fast your writes perform to how many disk failures you can handle.

But here’s the thing most people get wrong: neither replica 2 nor replica 3 gives you actual backup. Yes, you read that right. Replication keeps your storage available when hardware fails, but it won’t save you from accidental deletions, corruption, ransomware, or a dozen other ways data disappears.

In this article, we’ll break down what each replication option actually does, compare the real-world performance differences, and explain why you need a separate backup cluster no matter which you choose. We’ll also show you how OpenMetal’s fixed-price model makes proper backup architecture affordable, something that’s tough with traditional cloud providers.

Understanding Ceph Replication: What It Actually Does

Think of Ceph replication like this: when you write data, the system automatically creates multiple copies and spreads them across different storage drives (OSDs). If a drive fails, you’ve still got other copies ready to go. Your applications keep running, and you don’t lose data.

Dual Replication (Replica 2)

With replica 2, Ceph keeps two copies of everything across separate OSDs. Here’s what you get:

- 50% usable capacity from your raw storage (basically, you can use half)

- Survives one disk failure without breaking a sweat

- About 35% faster writes compared to triple replication

- Less hardware needed for the same amount of usable space

The typical setup uses size=2 and min_size=2, which means both copies need to confirm a write before Ceph tells your application “yep, that’s saved”. If one disk dies, the cluster keeps working but enters what’s called a degraded state while it creates new copies.

Triple Replication (Replica 3)

Replica 3 keeps three copies of your data:

- 33% usable capacity from raw storage (you get about a third)

- Can lose two disks at once before you’re in trouble

- The industry standard for production environments

- Keeps accepting writes even when one disk is down (if you set

min_size=2)

Most people run size=3 and min_size=2, which means writes can continue with just two copies available, but you’re always maintaining three copies under normal conditions.

Performance Impact: The Real Numbers

The performance difference between replica 2 and replica 3 is significant. Micron ran tests on an all-NVMe Ceph cluster and the results are pretty clear:

Write Performance:

- Replica 2: ~363,000 IOPS at 5.3ms average latency

- Replica 3: ~237,000 IOPS at 8.1ms average latency

- You lose about 35% of your write performance moving from replica 2 to replica 3

Read Performance:

- Reads perform basically the same with either option

- Ceph can grab data from any available copy, so more replicas don’t slow you down

Mixed Workloads:

- A realistic 70/30 read/write mix sees about 25% fewer IOPS with replica 3

- Write latency jumps by more than 50% with triple replication

Why the difference? It comes down to how replication works. Every write has to go to multiple disks and get confirmed by each one before Ceph says “done”. With replica 3, that’s three network trips, three disk writes, and three confirmations. It adds up.

This matters even more with OpenMetal’s all-NVMe infrastructure (Micron 7450 MAX or 7500 MAX drives). These drives are so fast that the network overhead from replication becomes your bottleneck, not the disks themselves.

What Replication Protects (And What It Doesn’t)

Let’s talk about what replication actually does for you. It’s excellent at handling:

Hardware Failures

- A disk dies? No problem, you’ve got other copies

- An entire server goes down? Covered

- Network links fail? Your data’s still accessible

- Power supply dies? The cluster keeps running

Temporary Unavailability

- Taking servers offline for maintenance? Go ahead

- Brief network hiccups? No big deal

- Rolling software upgrades? Easy

What Replication Can’t Save You From

Here’s the part that trips people up: replication gives you high availability, not backup protection. It won’t help with:

Data Corruption:

- Bad data that gets copied to all replicas

- Software bugs that trash everything

- Filesystem corruption affecting multiple disks

Human Error:

- Accidentally deleted a pool? It’s gone from all replicas

- Fat-fingered a configuration change? All copies affected

- Ran the wrong script? Oops, everywhere at once

Security Problems:

- Ransomware encrypts what it can see – that’s all your replicas

- Someone with compromised credentials deletes data

- An attack on the storage system itself

Catastrophic Failures:

- Your datacenter has a really bad day

- Multiple failures at once exceed what your replica count handles

- Configuration mistakes that break everything

Software Issues:

- Ceph bugs affecting the whole cluster

- Upgrades that go sideways

- Split-brain scenarios when things go really wrong

This is a well-established truth in the Ceph community: replication protects against hardware failures, but backup is what saves you from everything else.

Why You Need a Separate Storage Cluster

This is non-negotiable for production: you need a completely separate cluster for backups. Here’s what that actually gets you:

Real Off-Cluster Backup

Your backup data lives on completely different hardware. If something catastrophic happens to your primary cluster like corruption, compromise, or even total failure your backup cluster is fine. They’re independent.

Point-in-Time Recovery

With a separate backup cluster, you can take snapshots that capture your data at specific moments. Accidentally deleted something an hour ago? Restore from the snapshot before the mistake. Data got corrupted? Roll back to yesterday.

Protection from Your Own Mistakes

We all make them. Maybe you run a delete command on the wrong pool. Maybe a script goes haywire. With a separate backup cluster, you can recover what the primary cluster just destroyed.

Compliance Requirements

HIPAA, GDPR, SOC 2 all explicitly require backups that are separate from production. Not just “separate pools,” but actually separate infrastructure.

Why Hyperscalers Make This Expensive

Here’s where traditional cloud providers make proper backup architecture painful:

Egress Costs Kill You:

- AWS charges $0.09/GB to move data out

- Restoring a 10TB backup? That’s $900 just in transfer fees

- Every backup sync generates more egress charges

Storage Adds Up Fast:

- Per-GB pricing compounds with the backup storage you need

- Block storage costs multiply with replication

- No way to optimize by using different hardware for backup

Unpredictable Bills:

- Usage-based pricing makes budgeting nearly impossible

- Backup operations create surprise costs

- Need to restore everything? Hope you budgeted for it…

How OpenMetal Changes the Backup Economics

OpenMetal’s fixed monthly pricing flips the script on backup architecture:

You Know What You’ll Pay

Deploy a complete separate Ceph cluster for backups at a fixed monthly cost. Unlike hyperscalers where backup infrastructure adds unpredictable variable costs, you know exactly what your budget is.

Egress That Makes Sense

OpenMetal measures egress at the 95th percentile and charges $375 per Gbps needed if you go over your allotment. Those temporary spikes when you’re syncing backups or doing a restore? They don’t trigger outrageous fees. Most backup operations stay within what’s included, and even overages cost way less than per-GB pricing.

Mix and Match Hardware

With fixed pricing, you can optimize each cluster independently. Your production cluster might need maximum performance, while your backup cluster prioritizes capacity. Here are some example architectures:

Example 1: Fast Production, Efficient Backup

- Primary cluster: 3x Medium V4 servers with replica 3

- 19.2TB raw, 6.4TB usable

- Full speed, production-ready

- Backup cluster: 3x Medium V4 servers with 4+2 erasure coding

- 19.2TB raw, 12.8TB usable

- Room for multiple snapshot generations

- Lower performance is fine for backups

Example 2: Performance Primary, Solid Backup

- Primary cluster: 3x Medium V4 servers with replica 2

- 19.2TB raw, 9.6TB usable

- Better write performance

- Backup cluster: 3x Small servers with replica 3

- Lower-cost hardware for backup-only use

- Triple replication keeps your backups safe

- Can put it in a different datacenter

Example 3: Big Storage with History

- Primary cluster: 3x Large V4 servers with replica 2

- 60TB raw, 30TB usable

- High performance for active workloads

- Backup cluster: 3x Large V4 servers with 6+3 erasure coding

- 60TB raw, 40TB usable

- Keep daily snapshots, weekly retention

- Monthly and yearly snapshots for compliance

Deploy Fast, Scale Easy

OpenMetal’s 45-second deployment means you can spin up backup clusters instantly. This lets you:

- Test different backup architectures quickly

- Set up disaster recovery environments on demand

- Add capacity when you need it

Geographic Separation That’s Easy

OpenMetal has data centers in California, Virginia, Netherlands, and Singapore. Put your primary cluster in one location and your backup in another:

- Protection against regional failures

- Meet data sovereignty requirements

- Faster disaster recovery

Picking the Right Setup for Your Workload

The best replication strategy depends on what you’re actually running. Here’s a practical guide:

Development and Staging

Go with: Replica 2 plus a backup cluster using erasure coding

Dev and staging environments care more about cost than absolute maximum resilience. Replica 2 gives you:

- 50% usable capacity means less hardware

- Faster writes help your CI/CD pipelines

- Good enough protection for non-production stuff

Pair it with a backup cluster running erasure coding (4+2 or 6+3) to keep costs down while maintaining the ability to recover from mistakes.

You’ll save: 30-50% compared to running everything with replica 3, and you still have proper backups.

Standard Production Workloads

Go with: Replica 3 plus a backup cluster

For most production applications, replica 3 is still the standard for good reasons:

- Handles two disk failures at once

- Keeps running during maintenance

- Gives you confidence when hardware acts up

Add a separate backup cluster with either replica 3 (for critical stuff) or erasure coding (to save money on the backup tier). Schedule regular snapshots of your volumes and filesystems.

Production on a Budget

Go with: Replica 2 with a mandatory replica 3 backup cluster

If storage costs are killing you but you need production reliability:

- Primary cluster with replica 2 maximizes usable space

- Mandatory backup cluster with replica 3 keeps your data safe

- More frequent snapshots compensate for lower primary resilience

The trade-off: you get 50% more usable capacity but might see brief unavailability if multiple disks fail at once. The replica 3 backup cluster ensures you can always recover.

Important: This needs solid monitoring and fast backup sync. Any problems with the primary cluster should trigger immediate backup verification.

Compliance-Heavy Industries (Healthcare, Finance, Legal)

Go with: Replica 3 with geo-separated replica 3 backup

Regulations often have specific requirements:

- Primary with replica 3 meets availability mandates

- Geographic backup separation satisfies disaster recovery rules

- Both clusters with replica 3 keeps audit trails intact

- Encryption everywhere for both clusters

For HIPAA, GDPR, or SOC 2, put your backup cluster in a different OpenMetal datacenter. This checks most regulatory boxes for geographic separation.

High-Performance Workloads (Databases, AI/ML)

Go with: Mixed replica counts by pool type

Ceph lets you use different replication for different pools in the same cluster:

- High-performance pools with replica 2 for database workloads

- Replica 3 for less performance-sensitive data

- Database replication (like MySQL async replication) adds another layer

- Backup cluster captures everything

This takes careful planning. You need to understand which workloads benefit from the extra performance and which ones need the extra protection.

Getting It Set Up

Once you’ve picked your strategy, here’s how to actually configure it:

Setting Your Replication Factor

For a new pool with replica 3:

ceph osd pool create production-pool 128 128 replicated

ceph osd pool set production-pool size 3

ceph osd pool set production-pool min_size 2For replica 2:

ceph osd pool create staging-pool 128 128 replicated

ceph osd pool set staging-pool size 2

ceph osd pool set staging-pool min_size 2Heads up: With min_size=2 on a replica 2 pool, if you lose one copy, writes stop until you’re back to two copies. This prevents data loss but can affect availability. For replica 3 pools, min_size=2 lets things keep running when one disk is down.

Setting Up Your Backup Cluster

Your backup cluster should be on completely separate hardware:

- Deploy a new Cloud Core in the same or different datacenter

- Pick your backup replication strategy (replica 3 or erasure coding)

- Set up backup sync using RBD mirroring, CephFS snapshots, or RGW sync

- Configure retention policies so you keep multiple snapshots

- Test your restores regularly—a backup you can’t restore is worthless

Snapshot and Backup Strategies

For RBD block storage:

# Create a snapshot on primary

rbd snap create production-pool/volume-name@backup-$(date +%Y%m%d-%H%M)

# Copy it to backup cluster

rbd export production-pool/volume-name@backup-$(date +%Y%m%d-%H%M) - | \

rbd import - backup-pool/volume-name --cluster backup-clusterFor CephFS:

# Create a CephFS snapshot

ceph fs subvolume snapshot create <fs-name> <subvol> <snap-name>For S3/Swift via RADOS Gateway:

- Set up RGW multi-site replication to your backup cluster

- Use bucket versioning for point-in-time recovery

- Set lifecycle policies for automatic archival

Monitoring Both Clusters

Keep an eye on:

- OSD failures before they become capacity problems

- Rebalancing progress when you’re recovering from failures

- Backup sync status to make sure backups are current

- Capacity trends so you know when you need more space

OpenMetal provides monitoring tools built into your private cloud. You can also use standard Ceph monitoring with Prometheus, Grafana, and the Ceph Manager dashboard.

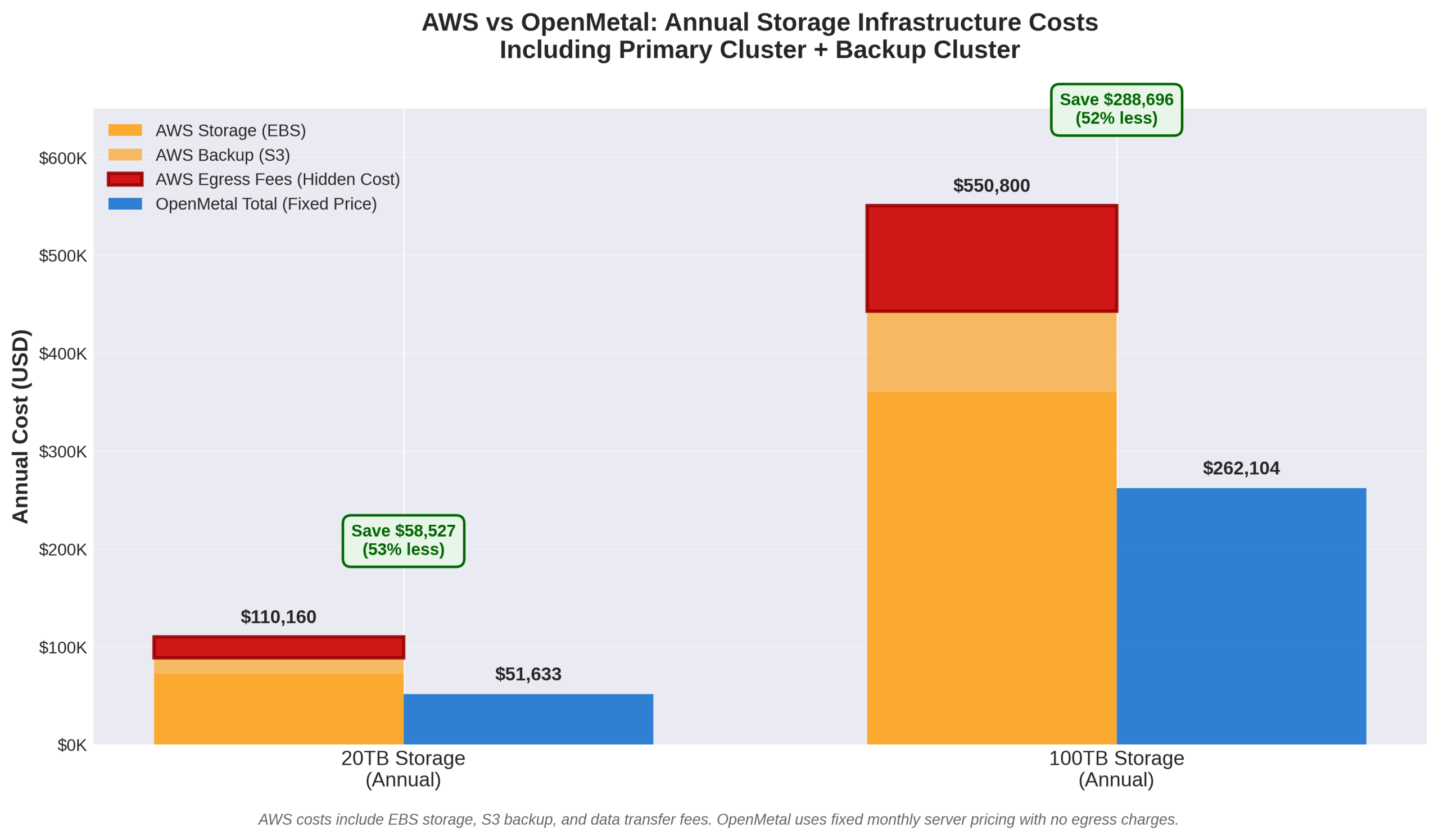

What This Actually Costs

Let’s look at real numbers for a 20TB production storage setup:

AWS Approach

- Primary storage: 60TB EBS volumes @ $0.10/GB = $6,000/month

- Backup: 60TB S3 @ $0.023/GB = $1,380/month

- Monthly backup sync: 20TB transfer @ $0.09/GB = $1,800/month

- Monthly: $9,180

- Yearly: $110,160

OpenMetal Approach

Using OpenMetal’s fixed monthly server pricing:

Primary cluster: 3x Large v4 servers (replica 3)

- 3 servers @ $938.88/month each = $2,816.64/month

- Storage: 6x 6.4TB NVMe per server = 19.2TB raw per server, 57.6TB raw total

- With replica 3: 19.2TB usable capacity

- 512GB RAM, dual Xeon Gold 6526Y CPUs per server

- 20Gbps private bandwidth per server

Backup cluster: 3x Medium v4 servers (4+2 erasure coding)

- 3 servers @ $495.36/month each = $1,486.08/month

- Storage: 6x 6.4TB NVMe per server = 19.2TB raw per server, 57.6TB raw total

- With 4+2 erasure coding: 38.4TB usable (enough for multiple snapshot generations)

- 256GB RAM, dual Xeon Silver 4510 CPUs per server

Total OpenMetal Monthly Cost: $4,302.72

Total OpenMetal Yearly Cost: $51,632.64

You save: $58,527 per year (53% reduction)

Key advantages beyond just cost:

- No egress charges for backup sync or restoration

- Fixed costs that don’t spike during recovery scenarios

- All-NVMe storage on both clusters for faster backups and restores

- 20Gbps private networking per server for the primary cluster

- Deploy backup cluster in different datacenter (VA, CA, Netherlands, Singapore) at same price

The Economic Advantage Grows with Scale

As your storage needs increase, the savings multiply. Here’s a 100TB usable capacity comparison:

AWS at 100TB usable:

- Primary: 300TB EBS @ $0.10/GB = $30,000/month

- Backup: 300TB S3 @ $0.023/GB = $6,900/month

- Monthly sync: 100TB transfer @ $0.09/GB = $9,000/month

- Total: $45,900/month ($550,800 annually)

OpenMetal at 100TB usable:

- Primary: 6x XL v4 servers (replica 3) @ $1,589.76 each = $9,538.56/month

- Total: 153.6TB raw (6 servers × 4x 6.4TB NVMe), 51.2TB usable

- Primary: 3x XXL v4 servers (replica 3) @ $2,223.36 each = $6,670.08/month

- Total: 115.2TB raw (3 servers × 6x 6.4TB NVMe), 38.4TB usable

- Combined primary usable: 89.6TB (slightly over 100TB with replica 3)

- Backup: 6x Large v4 servers (4+2 erasure coding) @ $938.88 each = $5,633.28/month

- Total: 115.2TB raw, 76.8TB usable (enough for multiple snapshots)

Total OpenMetal: ~$21,842/month ($262,104 annually)

You save: $288,696 per year (52% reduction)

The larger you scale, the more dramatic the savings become all while maintaining all-NVMe performance and eliminating egress-related surprises.

Mistakes to Avoid

Don’t Treat Replicas as Backup

The biggest mistake: thinking more replicas means you don’t need backups. Even with replica 5, you’re not protected from deletions, corruption, or cluster-wide failures.

Don’t Use min_size=1 with Replica 2

Setting min_size=1 creates risks. If you lose one copy and the remaining copy has problems during recovery, you could lose data. Always use min_size=2 for replica 2 setups.

Don’t Fill Your Cluster Too Full

Both replica 2 and replica 3 need room to rebalance after failures. A three-node cluster at 70% capacity can’t redistribute data when a node goes down. Keep 30-40% free as breathing room.

Don’t Skip Backup Testing

A backup you can’t restore is just expensive storage. Test your restoration process regularly. Time how long it takes and make sure it actually works.

Don’t Ignore Network Capacity

Replication lives and dies by network performance. OpenMetal’s dual 10Gbps private links (20Gbps total per server) ensure replication and backups don’t bottleneck. Skimping on network infrastructure kills your performance.

Don’t Put Backups in the Same Datacenter

Backup cluster in the same datacenter as your primary? That defeats half the purpose. Regional failures, power outages, or disasters can take out both at once.

The Bottom Line

Here’s what you need to remember: replica count and backup architecture are two different things.

Replication = Staying Online When Hardware Fails

- Replica 2: More space and speed, handles single disk failures

- Replica 3: Industry standard, handles two disk failures

Separate Backup Cluster = Protection Against Everything Else

- Corruption, accidental deletions, admin mistakes

- Ransomware and security incidents

- Cluster-wide failures

- Meeting compliance requirements

OpenMetal makes it actually affordable to do backups right. Fixed monthly pricing means you can run both primary and backup clusters without the variable costs that kill you on hyperscalers. No egress fees that turn restoration into a budget disaster.

For most companies, the best setup is:

- Primary cluster with replica 3 (or replica 2 if you need the capacity)

- Backup cluster in a different datacenter with replica 3 or erasure coding

- Regular snapshots synced to backup with proper retention

- Tested restore procedures so you know backups actually work

- Monitoring on both clusters to catch problems early

Ready to Get Started?

Want to set up proper Ceph replication and backup architecture? Here’s how OpenMetal makes it easy:

- Try it free – Test different replication strategies without committing to anything

- Deploy in 45 seconds – Spin up backup clusters fast so you can experiment

- Get real engineering help – Talk to our engineers in dedicated Slack channels

- Know what you’ll pay – Fixed transparent pricing with no surprise costs

Pick replica 2 or replica 3 for your primary cluster. Either way, OpenMetal’s infrastructure lets you afford real backups with a completely separate cluster. That’s the kind of protection that actually works when things go wrong.

Schedule a Consultation

Get a deeper assessment and discuss your unique requirements.

Read More on the OpenMetal Blog