In this article

- Understanding Healthcare Data Lake Requirements

- Why Ceph Storage for Healthcare Data Lakes

- Building Your HIPAA-Compliant Architecture

- Implementing Security and Access Controls

- Medical Imaging Storage at Scale

- Analytics and AI/ML Integration

- Monitoring, Auditing, and Compliance Reporting

- Cost Management for Healthcare Data Lakes

- Getting Started

Healthcare organizations face an unprecedented challenge: managing massive volumes of medical imaging, electronic health records, and real-time patient monitoring data while maintaining strict regulatory compliance. According to recent studies, 95% of non-federal acute care hospitals now maintain certified Electronic Health Record (EHR) technology, with 88% routinely sharing patient care summaries with external organizations and 84% actively engaged in electronic case reporting. This digital transformation demands infrastructure that can handle petabyte-scale storage while meeting HIPAA’s stringent security requirements.

A HIPAA-compliant data lake provides the foundation for modern healthcare data management, enabling organizations to store diverse data types—from DICOM medical images to unstructured clinical notes—in a unified, secure environment. This guide explores how OpenMetal’s Ceph-based infrastructure delivers the storage architecture, security controls, and cost predictability healthcare organizations need to build compliant data lakes at scale.

Understanding Healthcare Data Lake Requirements

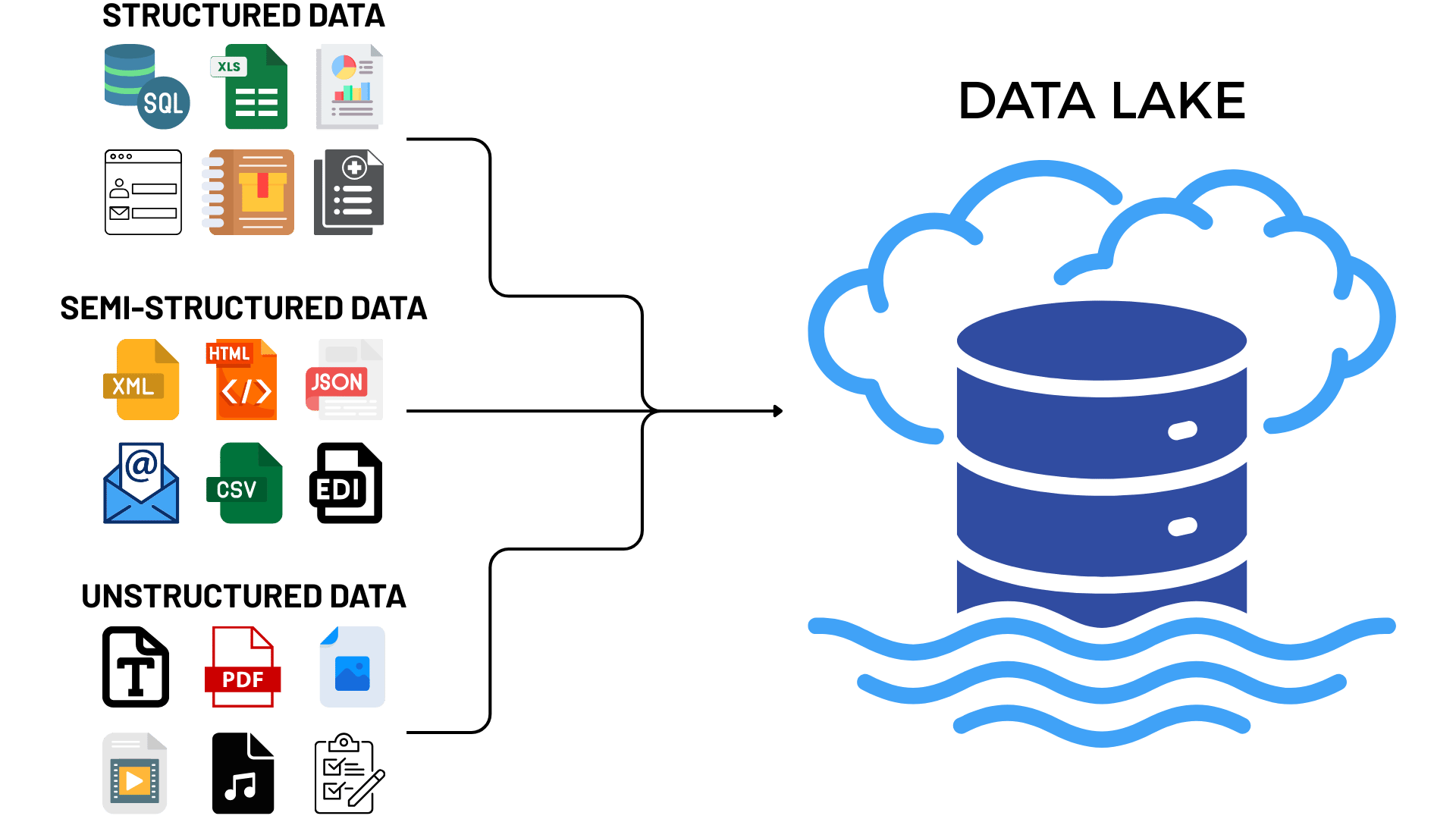

A HIPAA-compliant data lake is a secure, scalable, and governed environment designed to meet regulatory requirements, combining flexible storage for diverse healthcare data types with the strict security controls mandated by federal law.

The Challenge of Healthcare Data Volume and Variety

Healthcare organizations struggle with managing the velocity of data generation, with 82% of reviewed studies noting challenges in real-time data processing and integration, while security and privacy concerns were prominent in 89% of analyzed cases.

The data variety challenge is particularly acute. Research indicates that 78% of healthcare organizations reported difficulties in standardizing data formats across different systems, while 71% struggled with data cleaning and validation processes, and 63% face challenges in managing unstructured data.

Medical imaging represents one of the largest storage consumers in healthcare environments. A single CT scan generates 300-500 MB of data, while 3D mammography produces 1-2 GB per exam. As patient populations grow and imaging technology advances, healthcare organizations routinely find themselves managing petabyte-scale deployments. Traditional storage systems struggle to provide the scalability, performance, and cost-effectiveness required for these workloads.

Beyond imaging, healthcare data lakes must accommodate:

- Structured data: Electronic health records, billing systems, lab results, pharmacy orders

- Semi-structured data: HL7 and FHIR messages, clinical decision support outputs, device logs

- Unstructured data: Clinical notes, research datasets, genomic sequences, patient-generated health data

Key components of a HIPAA-compliant data lake include a data ingestion layer that ensures data from various sources like EHRs and medical devices is securely ingested, a storage layer supporting encryption at rest with restricted access, robust data governance with policies for managing data access and retention, comprehensive data security including encryption and monitoring, and analytics tools that maintain compliance with HIPAA’s minimum necessary rule.

HIPAA Compliance: Beyond Basic Security

HIPAA compliance isn’t simply about encrypting data at rest, it requires a comprehensive approach to protecting Protected Health Information (PHI) throughout its entire lifecycle. Healthcare organizations must implement specific security controls at each cloud infrastructure layer, including physical, network, host, application, and data levels, with particular emphasis on protecting Electronic Health Records and Personal Health Information through sophisticated access control mechanisms and encryption protocols.

The Health Care and Public Health Sector Cybersecurity Framework identifies five core functions that form the foundation of a comprehensive security strategy: Identify, Protect, Detect, Respond, and Recover. Healthcare organizations must implement risk assessment procedures for all critical assets, maintain detailed asset inventories, establish clear data classification schemes, implement access control systems based on the principle of least privilege, enable continuous monitoring of system activities, conduct regular security assessments, and maintain comprehensive audit logs.

The financial stakes are significant. The healthcare sector continues to bear the highest cost burden of data breaches across all industries, with the average total cost reaching $10.93 million per breach, representing a large increase from previous years.

Why Ceph Storage for Healthcare Data Lakes

Ceph’s distributed storage architecture solves a fundamental challenge in healthcare data management: providing a single platform that delivers block, object, and file storage simultaneously. This unified storage layer eliminates the complexity and cost of managing separate storage systems for different data types.

Unified Storage for Diverse Healthcare Workloads

A single Ceph cluster on OpenMetal infrastructure provides three storage interfaces:

Block Storage (RBD) powers DICOM medical imaging workloads, providing the low-latency access PACS systems require for rapid image retrieval. Each volume can be attached directly to virtual machines running imaging applications, with performance characteristics suitable for active clinical use.

Object Storage (RADOS Gateway) delivers S3-compatible storage for long-term archival, integrating seamlessly with vendor-neutral archives and third-party analytics platforms. The S3 API compatibility means healthcare organizations can use standard tools and libraries without custom integration work.

File Storage (CephFS) handles unstructured clinical notes, research data, and shared file systems where multiple applications need concurrent access to the same data. CephFS provides POSIX-compliant file system semantics, making it straightforward to migrate existing file-based workflows.

This architectural unification means your infrastructure team manages a single storage platform rather than juggling block storage arrays, object stores, and NAS devices. The operational simplicity translates directly to reduced complexity in compliance audits, as all storage operates under consistent security policies and access controls.

Scalability That Matches Healthcare Growth

Ceph’s architecture scales horizontally by adding storage nodes to the cluster. There’s no proprietary controller bottleneck limiting performance or capacity. As your imaging archives grow from hundreds of terabytes to multiple petabytes, you simply add additional storage servers to the cluster.

OpenMetal customers routinely operate petabyte-scale Ceph deployments, with the flexibility to mix NVMe-based servers for performance-sensitive workloads alongside high-capacity HDD configurations for cost-effective long-term retention. This mixed deployment model enables sophisticated tiering strategies where frequently accessed images reside on fast storage while older studies automatically migrate to economical capacity-optimized nodes.

Ceph’s Data Integrity Features for PHI

HIPAA requires maintaining the integrity of ePHI and implementing mechanisms to verify that data hasn’t been altered or destroyed in an unauthorized manner. Ceph addresses these requirements through:

Replication ensures data durability by maintaining multiple copies across different physical servers. Healthcare organizations typically configure size=3 replication for production PHI, meaning three complete copies of every object exist across the cluster. If a disk fails or a server goes offline, data remains accessible from other replicas while Ceph automatically rebuilds the missing copy.

Erasure coding provides a more storage-efficient alternative for archival data, using mathematical techniques to split data into fragments with calculated redundancy. An 8+3 erasure coding scheme, for example, divides data into 8 data fragments and 3 parity fragments, tolerating the loss of any 3 fragments while using only 37.5% overhead compared to 200% for three-way replication.

Scrubbing continuously verifies data integrity by comparing checksums across replicas, detecting bit rot or corruption before it impacts clinical operations. Ceph performs lightweight scrubs daily and deep scrubs weekly by default, ensuring any integrity issues are identified and corrected automatically.

Full Root Access for Custom HIPAA Configurations

Unlike managed cloud storage services where you’re confined to the provider’s security controls, OpenMetal provides full root access to your Ceph clusters. This control is important for healthcare organizations that need to implement specific security configurations to meet their HIPAA risk assessments.

You control:

- Encryption at rest using AES-256, with the ability to implement key rotation schedules that match your organization’s security policies

- Custom replication rules defining how many copies of PHI exist and where those copies are physically located

- Network segmentation using dedicated VLANs to isolate PHI workloads from other systems

- Access policies at the bucket, object, and user levels, with granular permissions matching your organizational hierarchy

This flexibility extends to compliance tooling. You can deploy your organization’s preferred security information and event management (SIEM) systems, intrusion detection solutions, and compliance scanning tools directly within your infrastructure rather than relying solely on provider-level abstractions.

Building Your HIPAA-Compliant Architecture

Data lakes are complex ecosystems consisting of multiple layers working in harmony to store, process, and analyze information, including data ingestion, storage, processing, cataloging and governance, query and analytics, and security and access control.

Architecture Overview

A production-ready healthcare data lake on OpenMetal combines several architectural components:

- Storage Layer: Ceph cluster with mixed NVMe and HDD nodes, providing the unified block, object, and file storage foundation

- Compute Layer: OpenStack virtual machines running analytics workloads, PACS components, and application servers

- Network Layer: Dedicated 20Gbps private networking with VLAN segmentation isolating PHI traffic

- Security Layer: OpenStack Keystone for identity management, Barbican for key management, Neutron security groups for network-level access control

- Audit Layer: Centralized logging infrastructure capturing every API call, data access, and administrative action

Data Ingestion Strategies

The data ingestion layer serves as the entry point for all incoming data, gathering information from various sources through batch processes or real-time streaming.

For medical imaging, DICOM receivers integrate with Ceph’s S3-compatible object storage through standard APIs. PACS systems can write directly to RADOS Gateway endpoints, eliminating the need for intermediate staging storage. The object store automatically handles metadata extraction and tagging, making images searchable and retrievable based on patient identifiers, study dates, and modality types.

Clinical data from EHR systems typically arrives through scheduled ETL pipelines. Apache NiFi running on OpenStack instances can orchestrate data extraction from HL7 interfaces, transform messages into FHIR resources, and write to Ceph object storage for long-term retention. The same pipelines can feed structured data into analytics databases for real-time querying.

Real-time patient monitoring generates continuous data streams from ICU devices, wearables, and remote monitoring systems. Apache Kafka clusters deployed on OpenStack provide the message buffering and streaming infrastructure to ingest this data, with consumers writing to both Ceph object storage for archival and real-time analytics platforms for immediate processing.

Storage Tier Design

Implementing a multi-tier storage strategy requires understanding access patterns for different data types:

Hot tier (NVMe-based Ceph nodes): Active imaging studies from the past 30-90 days, frequently accessed EHR data, real-time analytics datasets. Sub-millisecond latency for immediate clinical access.

Warm tier (SSD-based or mixed Ceph nodes): Imaging studies from 90 days to 2 years, historical clinical data for population health analytics, research datasets in active use. Optimizes cost while maintaining reasonable access times.

Cold tier (HDD-based Ceph nodes with erasure coding): Imaging archives beyond 2 years, completed research studies, long-term compliance data. Prioritizes storage efficiency over access speed, meeting legal retention requirements at minimal cost.

Ceph’s cache tiering automatically promotes frequently accessed objects from cold storage to faster tiers, while aging cold data moves to economical HDD arrays. This automation ensures clinical users always experience appropriate performance regardless of an image’s age.

Integration with OpenStack Services

OpenStack integration transforms Ceph from simple storage into a comprehensive cloud platform with the security and compliance features healthcare organizations require.

Keystone provides the identity management foundation with role-based access control (RBAC) for granular PHI access permissions. You define roles matching your organizational structure—radiologist, referring physician, research analyst—and assign permissions accordingly. Keystone federates with existing Active Directory or LDAP systems, maintaining a single source of truth for user identities.

Barbican manages encryption keys separately from encrypted data, meeting HIPAA’s requirement for independent key management. When Ceph encrypts data at rest, Barbican stores and rotates the encryption keys, ensuring that compromising the storage system alone doesn’t expose PHI.

Neutron enforces network-level access controls through security groups that function as automated firewalls. You define rules specifying which systems can communicate with your Ceph storage, which networks can reach analytics workloads, and how data flows between components. These hardware-enforced controls provide defense in depth beyond application-level authentication.

Heat automates infrastructure deployment through templates defining your entire stack as code. This automation ensures consistent security configurations across development, staging, and production environments, reducing the risk of misconfiguration-related breaches.

Implementing Security and Access Controls

Healthcare organizations must implement specific measures including access control systems based on the principle of least privilege, continuous monitoring of system activities, regular security assessments, formal incident response procedures, comprehensive audit logs, secure configuration management practices, and detailed documentation of security controls.

Encryption at Rest and in Transit

All data written to Ceph storage undergoes AES-256 encryption before reaching physical disks. This encryption happens transparently—applications write data normally, and Ceph handles encryption and decryption automatically based on your security policies.

For data in transit, TLS 1.3 protects all communication channels:

- Client applications to RADOS Gateway endpoints

- OpenStack API calls between services

- Replication traffic between Ceph nodes

- Admin SSH sessions to infrastructure components

The unmetered 20Gbps private networking between OpenMetal servers means encryption doesn’t introduce prohibitive performance penalties. Data moves between systems at line speed while maintaining end-to-end encryption.

Role-Based Access Control

Implementing least-privilege access requires mapping your organizational roles to technical permissions. A typical healthcare data lake includes:

Clinical Users receive read-only access to imaging and clinical data relevant to their patients. PACS integrations enforce these restrictions automatically, displaying only studies the user is authorized to view based on their relationship with the patient.

Research Personnel access de-identified datasets through restricted S3 buckets. They can download data for analysis but cannot modify source records or access identifiable PHI.

Administrative Staff manage billing and operational data without accessing clinical information. Their permissions extend to administrative systems but not to clinical data repositories.

IT Operations have elevated privileges for system maintenance but operate under the principle of least privilege—they can manage infrastructure without routinely accessing PHI.

Audit and Compliance personnel receive read-only access to logs and audit trails, enabling oversight without operational system access.

OpenStack Keystone enables these permission structures through project isolation and role assignments, while Ceph bucket policies enforce object-level access controls.

Network Segmentation and Security Groups

VLAN segmentation creates isolated network paths for different traffic types. OpenMetal’s infrastructure supports dedicated VLANs that separate:

- PHI storage traffic from general network traffic

- Clinical application traffic from research workloads

- Management interfaces from production systems

- Backup and replication from primary data paths

Neutron security groups provide stateful firewall rules at the virtual machine level. Default-deny policies ensure that only explicitly permitted traffic reaches your instances. You define rules like:

- PACS servers can reach Ceph RADOS Gateway on port 443

- Analytics clusters can access object storage on S3 endpoints

- Monitoring systems can poll metrics from all infrastructure

- Management stations can SSH to administrative interfaces

These controls operate at the hardware layer through OpenStack’s integration with physical networking equipment, providing performance and security that software-only solutions can’t match.

Audit Logging and Activity Monitoring

Healthcare organizations must maintain audit logs that record and examine system activity, including user logins, file accesses, and security incidents, with mechanisms to authenticate ePHI and verify that it has not been altered or destroyed in an unauthorized manner.

Every interaction with your healthcare data lake generates audit events:

- Authentication attempts: Successful and failed login attempts, session duration, source IP addresses

- Data access: Object reads and writes, API calls, query execution

- Administrative actions: Configuration changes, user permission modifications, system updates

- Security events: Failed authentication attempts, unauthorized access attempts, security group changes

These logs flow to centralized infrastructure where SIEM tools analyze events for compliance violations or security incidents. The audit trail provides the documentation required for HIPAA compliance audits, demonstrating that you monitor access to PHI and can identify unauthorized access attempts.

OpenMetal infrastructure maintains audit logs independently of the systems they monitor. Even if an attacker compromises an application server, they cannot modify audit records documenting their activities.

Medical Imaging Storage at Scale

Medical imaging presents unique storage challenges that Ceph’s architecture addresses directly. Understanding these workloads helps optimize your data lake configuration.

DICOM Storage Requirements

Picture Archiving and Communication Systems (PACS) traditionally relied on expensive, proprietary storage arrays. Ceph provides a standards-based alternative that integrates through S3 APIs, which most modern PACS solutions support natively.

The RADOS Gateway presents an S3-compatible endpoint where PACS systems write DICOM objects. Each study becomes an S3 object with metadata tags for patient identifiers, study dates, modalities, and referring physicians. This object model maps naturally to DICOM’s structure while enabling efficient searching and retrieval.

For organizations migrating from traditional PACS storage, Ceph’s S3 interface simplifies the transition. Your existing PACS software requires only configuration changes to point at new storage endpoints—no application rewrites or complex data migration procedures.

Performance Considerations for Active Archives

Active imaging archives require balancing performance with cost. Radiologists expect sub-second image retrieval, while administrators seek to minimize storage expenses.

OpenMetal’s NVMe storage servers deliver the performance characteristics active archives demand. Sub-millisecond latency for small object reads ensures that PACS workstations display images immediately. High IOPS capability handles the parallel access patterns that occur when multiple radiologists retrieve different studies simultaneously.

For peak performance scenarios—trauma centers, busy radiology departments, teleradiology services—configure Ceph pools with NVMe-only storage. The cost premium for NVMe becomes worthwhile when clinical workflows depend on immediate image access.

Cost-Effective Long-Term Retention

HIPAA doesn’t specify retention periods for medical imaging, but state laws, Medicare conditions of participation, and liability concerns typically drive 7-10 year retention policies. Some organizations retain imaging indefinitely.

HDD-based Ceph nodes with erasure coding provide economical long-term storage. High-capacity drives (14-18TB) maximize density while erasure coding reduces the storage overhead compared to replication. An 8+3 erasure coding scheme stores 11 fragments across 11 drives, providing durability similar to three-way replication but using 37.5% overhead instead of 200%.

Ceph’s lifecycle policies can automatically transition older studies from replicated hot storage to erasure-coded cold storage. After 90 days, imaging studies move to economical archival storage automatically, balancing access requirements with cost optimization.

Cache Tiering for Hybrid Storage

Ceph cache tiering creates an automated hybrid storage system where frequently accessed data remains on fast storage while cold data resides on economical storage.

Configure a cache pool using NVMe or SSD storage in front of your HDD-based archival pool. Ceph monitors access patterns and automatically:

- Promotes frequently accessed objects from HDD to SSD/NVMe

- Evicts rarely accessed objects from cache back to archival storage

- Flushes modified objects to backing storage for durability

This automation happens transparently. When a radiologist requests a year-old imaging study, Ceph retrieves it from HDD storage. If that study gets accessed repeatedly (perhaps for a complex surgical planning case), Ceph automatically promotes it to fast storage for subsequent retrievals. Once the case concludes and access frequency drops, Ceph evicts the data back to archival storage.

The result: frequently accessed imaging resides on fast storage automatically, regardless of the study’s age, while rarely accessed studies consume economical storage—all without manual intervention or complex tiering policies.

Analytics and AI/ML Integration

HIPAA-compliant data lakes enable real-time analytics for personalized treatment plans and proactive care management, accelerate medical research and the development of new treatments through access to diverse datasets, and provide regulatory readiness to ensure organizations are always prepared for audits.

Integrating Healthcare Analytics Platforms

Ceph’s S3 compatibility enables direct integration with modern analytics frameworks. Apache Spark running on OpenStack clusters can read data directly from object storage, process it in parallel across multiple nodes, and write results back to Ceph all without complex data movement or transformation.

Population health analytics platforms connect to Ceph storage through standard S3 libraries. Your data scientists can access clinical datasets using Python boto3, R’s aws.s3 package, or analytics platforms’ built-in S3 connectors. The same storage infrastructure that archives DICOM images also powers sophisticated machine learning workflows.

For organizations using cloud analytics platforms like Databricks or Snowflake, OpenMetal’s infrastructure can replicate selected datasets to cloud storage for external processing while maintaining the master copy in your compliant data lake. This hybrid approach enables cloud analytics capabilities without moving all PHI to public cloud environments.

De-Identification Pipelines for Research Data

HIPAA’s Safe Harbor method provides a path for using PHI in research by removing 18 identifiers. Automated de-identification pipelines transform source data into research-ready datasets:

Extract identifiable data from Ceph object storage through S3 APIs.

Transform by removing direct identifiers (names, addresses, dates beyond year, etc.) and suppressing geographic information smaller than state level.

Load de-identified datasets into separate S3 buckets with different access controls, making them available to research personnel.

These pipelines run as scheduled jobs on OpenStack compute instances, processing new data automatically and maintaining clear separation between identified and de-identified datasets.

Confidential Computing for AI/ML on Sensitive Data

Intel Trust Domain Extensions (TDX) available on OpenMetal’s XL and XXL V4 servers create hardware-isolated enclaves for processing PHI during AI model training. The CPU encrypts memory contents, protecting sensitive patient data from unauthorized access even by hypervisor administrators or infrastructure operators.

This capability addresses a fundamental challenge in healthcare AI: how to train models on real patient data without exposing that data to model developers. TDX enables:

- Federated learning where model training happens within encrypted enclaves, with only model updates (not raw patient data) leaving the secure environment

- Secure multi-party computation allowing multiple organizations to collaboratively train models on combined datasets without sharing raw data

- Compliance-friendly AI development where data science teams can develop models without direct access to identifiable PHI

For healthcare organizations developing AI-driven clinical decision support, diagnostic imaging analysis, or predictive models for patient outcomes, confidential computing provides a path to leverage sensitive data while maintaining HIPAA compliance.

Monitoring, Auditing, and Compliance Reporting

Compliance reporting frameworks must align with established federal standards for protecting patient information, including implementing essential security measures, maintaining detailed audit trails of all system access attempts, regularly reviewing logs for potential security incidents, establishing and maintaining security incident procedures, implementing automated systems for tracking and documenting security incidents, and regularly testing incident response procedures.

Real-Time Monitoring Infrastructure

Comprehensive monitoring tracks the health and performance of your data lake infrastructure while capturing the security events required for HIPAA compliance.

Infrastructure monitoring uses tools like Prometheus to collect metrics from all system components:

- Ceph cluster health: storage utilization, OSD status, I/O performance, network throughput

- OpenStack services: API response times, resource allocation, quota usage

- Compute resources: CPU, memory, disk I/O on analytics workloads

- Network metrics: bandwidth utilization, packet loss, latency between components

Grafana dashboards provide real-time visibility into infrastructure health, alerting operations teams when metrics exceed thresholds that might indicate problems.

Security monitoring captures authentication attempts, authorization decisions, and data access events:

- User authentication: login attempts, session creation, credential changes

- API access: calls to OpenStack services, S3 operations against Ceph storage

- Network activity: connections between components, blocked traffic from security groups

- System changes: configuration modifications, user permission updates

These security events flow to your SIEM system where correlation rules identify patterns that might indicate security incidents or policy violations.

Audit Trail Requirements

HIPAA’s audit trail requirements extend beyond simple access logging. You need comprehensive records demonstrating:

- Who accessed PHI—user identity verified through authentication

- What data they accessed—specific objects, patients, or datasets

- When access occurred—timestamps for all operations

- Where access originated—source IP addresses, client applications

- Why access was appropriate—context like clinical relationship or research authorization

- How data was used—read operations, modifications, copies made

Your data lake generates audit events at multiple layers:

OpenStack Keystone logs authentication and authorization decisions. Every time a user attempts to access a resource, Keystone records the attempt, whether it succeeded, and what permissions were evaluated.

Ceph RADOS Gateway logs S3 API operations. Each GET, PUT, or DELETE operation against object storage generates an audit event including user identity, object identifier, and operation result.

Application-level logging captures business context. Your PACS system records which clinician accessed which patient’s images, providing the clinical context that technical logs alone can’t capture.

Centralizing these multi-layered logs into a unified audit repository enables comprehensive compliance reporting. When auditors request evidence that only authorized personnel accessed a specific patient’s records, you can provide complete documentation spanning authentication, authorization, and data access.

Compliance Reporting Automation

Manual compliance reporting doesn’t scale. Automated reporting tools generate the documentation auditors require:

Access reports list all personnel who accessed specific PHI during defined timeframes, filtered by patient identifiers or data categories.

Security incident summaries aggregate failed authentication attempts, authorization violations, and security policy breaches, demonstrating your security monitoring capabilities.

Configuration compliance checks verify that security controls remain properly configured—encryption enabled, security groups enforcing network isolation, audit logging active.

Risk assessment documentation tracks identified vulnerabilities, remediation actions, and ongoing security measures.

These automated reports run on regular schedules, maintaining continuous compliance evidence rather than scrambling when audits occur.

Cost Management for Healthcare Data Lakes

Healthcare organizations often struggle with cloud storage costs that escalate unpredictably as data volumes grow. OpenMetal’s infrastructure addresses these concerns through fixed-cost pricing that eliminates storage surprises.

Fixed Monthly Pricing Model

Unlike public cloud providers that charge per-GB storage and per-API-call access fees, OpenMetal charges per physical server. You pay a fixed monthly amount for each storage server, compute node, and network resource in your infrastructure.

This pricing model provides important advantages for healthcare data lakes:

Predictable costs as imaging archives grow. Adding 500TB of medical imaging to your Ceph cluster doesn’t change your monthly bill; the infrastructure you’ve provisioned handles the additional data.

No API access charges for DICOM retrieval. PACS systems generating millions of S3 GET requests don’t incur per-request fees. Clinical users can access images freely without cost concerns.

Unlimited internal data transfer between components. Moving data from Ceph storage to Spark analytics clusters to visualization tools involves zero data transfer charges.

For healthcare SaaS providers serving multiple hospital systems, this cost structure simplifies financial planning. Revenue models can focus on the value delivered to customers rather than reconciling variable infrastructure costs each month.

95th Percentile Bandwidth Pricing

While internal data transfer is unmetered, external bandwidth (internet traffic and connections to external systems) uses 95th percentile pricing with generous included allowances.

This pricing model measures your bandwidth usage continuously and charges based on the 95th percentile—effectively ignoring the top 5% of usage spikes. Occasional bursts for disaster recovery testing, large research dataset downloads, or traffic surges don’t inflate your bills.

The included bandwidth allowances mean typical operational traffic—daily backups, routine replication, normal user access—often falls within prepaid allocations. Only organizations with sustained high-bandwidth requirements pay for additional transfer.

Total Cost of Ownership

Evaluating data lake costs requires looking beyond just storage pricing. OpenMetal’s infrastructure model impacts total cost of ownership through several factors:

No egress fees for data leaving your infrastructure. Moving datasets to cloud analytics platforms, transferring imaging to external specialists, or migrating to different systems doesn’t incur per-GB egress charges.

Lower licensing costs for bring-your-own-license software. Rather than paying cloud provider markups, you license software at standard rates and run it on your infrastructure.

Reduced operational complexity from managing a unified Ceph storage platform instead of separate block, object, and file systems. Fewer storage systems mean less management overhead and smaller operations teams.

Predictable capacity planning where adding storage capacity involves known costs for additional servers rather than variable per-GB pricing that escalates with usage.

For organizations spending $100K-$1M+ annually on compliant infrastructure, these factors often deliver 30-50% savings compared to public cloud alternatives while providing better performance and complete control over security configurations.

Getting Started

Building a HIPAA-compliant healthcare data lake requires careful planning and systematic implementation. This section provides a practical roadmap for healthcare organizations ready to deploy production infrastructure.

Assessment and Planning Phase

Begin by documenting your current data landscape:

Data inventory: Catalog existing data sources (PACS systems, EHR databases, department systems), data types, volumes, growth rates, and retention requirements.

Compliance requirements: Document HIPAA obligations, state regulations, payer requirements, and internal policies governing PHI handling.

Access patterns: Analyze how different user groups access data—frequency, volume, latency requirements, concurrent users.

Integration points: Identify systems that will connect to the data lake, APIs and protocols required, authentication methods, network connectivity.

This assessment provides the foundation for architecture decisions. For example, heavy DICOM retrieval workloads justify NVMe storage investment, while primarily archival workloads might prioritize HDD capacity.

Architecture Design

Design your data lake architecture based on workload requirements:

Storage configuration: Determine hot/warm/cold tier sizing, NVMe vs. SSD vs. HDD ratios, replication vs. erasure coding strategies, and total capacity requirements with 3-year growth projection.

Compute requirements: Calculate virtual machines for application servers, analytics workloads, ETL processing, and monitoring infrastructure.

Network design: Plan VLAN segmentation, security group policies, bandwidth requirements, and redundancy for critical paths.

Security architecture: Define Keystone roles and projects, Barbican key management policies, encryption requirements, and audit log retention.

OpenMetal’s solutions architects can review your requirements and recommend specific infrastructure configurations optimized for your workload mix.

Proof of Concept Development

Testing your architecture with real workloads before full deployment identifies issues and validates performance expectations:

Deploy core infrastructure: Stand up a small-scale Ceph cluster, configure OpenStack services, implement security controls, and establish monitoring.

Integrate key systems: Connect your PACS to Ceph object storage, migrate sample imaging data, test retrieval performance, and validate access controls.

Run analytics workloads: Deploy your analytics platform, process test datasets, measure query performance, and verify results accuracy.

Validate compliance controls: Test audit logging, verify encryption, validate access restrictions, and simulate compliance reporting.

This proof of concept phase typically takes 4-8 weeks and provides confidence before moving production workloads.

Production Deployment

Production deployment follows a phased approach that minimizes risk:

Phase 1 – Infrastructure buildout: Deploy full production hardware, configure all storage tiers, implement complete security stack, and establish monitoring and alerting.

Phase 2 – Data migration: Migrate archival imaging data first (lowest risk), then historical clinical data, finally current production data. Maintain parallel operation during transition.

Phase 3 – Application cutover: Point applications to new storage, verify functionality, monitor performance, address issues before proceeding to next application.

Phase 4 – Optimization: Tune cache configurations, adjust tiering policies, optimize query patterns, and refine security policies based on actual usage.

Phased deployment spreads risk across multiple stages while providing opportunities to validate each component before proceeding.

Ongoing Operations

Operating a healthcare data lake requires attention to several areas:

Capacity management: Monitor storage utilization trends, plan capacity additions before exhausting space, optimize data lifecycle policies, and retire obsolete data.

Performance optimization: Track query performance trends, adjust cache and tiering configurations, optimize data layouts for access patterns, and upgrade infrastructure as needed.

Security maintenance: Review and update access controls, rotate encryption keys on defined schedules, patch systems promptly, and conduct regular security assessments.

Compliance activities: Generate audit reports, respond to requests from compliance teams, maintain documentation, and adapt to regulatory changes.

Most organizations find that healthcare data lake operations require less ongoing effort than managing multiple disparate storage systems, despite the sophisticated capabilities.

Conclusion

Building a HIPAA-compliant healthcare data lake requires infrastructure that balances the competing demands of massive scale, strict security requirements, diverse data types, and cost predictability. OpenMetal’s Ceph-based infrastructure delivers this balance through unified storage architecture, comprehensive security controls, and fixed-cost pricing that eliminates the unpredictable expenses plaguing healthcare organizations.

The platform provides the foundation for modern healthcare data management—from petabyte-scale medical imaging archives to real-time analytics powering clinical decision support. Ceph’s unified storage layer handles block, object, and file workloads simultaneously, while OpenStack integration delivers the audit logging, key management, and access controls HIPAA requires.

For healthcare organizations evaluating infrastructure options, OpenMetal offers a viable alternative to public cloud providers. You receive complete control over security configurations, predictable costs that don’t escalate with data growth, and infrastructure designed to meet compliance requirements from day one. Whether you’re a hospital system consolidating imaging archives, a healthcare SaaS provider serving multiple organizations, or a digital health startup building analytics platforms, OpenMetal provides the infrastructure foundation your applications require.

Ready to explore how OpenMetal can power your healthcare data lake? Start a free trial to experience the platform firsthand, or contact our solutions team to discuss your specific requirements.

Interested in OpenMetal Cloud?

Schedule a Consultation

Get a deeper assessment and discuss your unique requirements.

Read More on the OpenMetal Blog