Setting the Stage: Performance Dynamics of CPUs and GPUs in AI

Before delving into the intricacies of CPU and GPU performance in AI, it’s important to acknowledge that when it comes to raw performance metrics, GPUs often lead the pack. Their architecture is specifically tailored for the high-speed, parallel processing demands of AI tasks. However, this doesn’t always translate into a one-size-fits-all solution.

In this article, we’ll explore scenarios where CPUs might not only compete but potentially gain an upper hand, focusing on cost, efficiency, and application-specific performance.

Training vs. Inference

In the world of AI, the distinction between training models and inference is crucial. Training models is a resource-intensive process, involving learning and refining from vast datasets, where GPUs excel due to their parallel processing power.

Inference, on the other hand, is about using these trained models to make predictions or decisions. While GPUs can speed up this process, CPUs are increasingly becoming viable for inference, especially in scenarios where the cost and resource efficiency are paramount.

This contrast underscores the need to choose the right hardware based on the specific phase of AI model development.

Benchmarking OpenMetal Hardware

We’ll start by diving into our test results, and then we’ll explore the reasons behind the observed outcomes.

We’ve evaluated four diverse AI models to measure their inference speeds across three different processing units:

- NVIDIA’s A100 series

- AMD’s EPYC 7272

- Intel’s 4th Generation Intel Xeon Gold 5416S equipped with AMX optimizations

Our analysis covers both the raw performance and a cost-performance comparison. While we anticipate the A100 leading in raw performance, the true intrigue lies in the cost-efficiency ratio, potentially highlighting interesting insights.

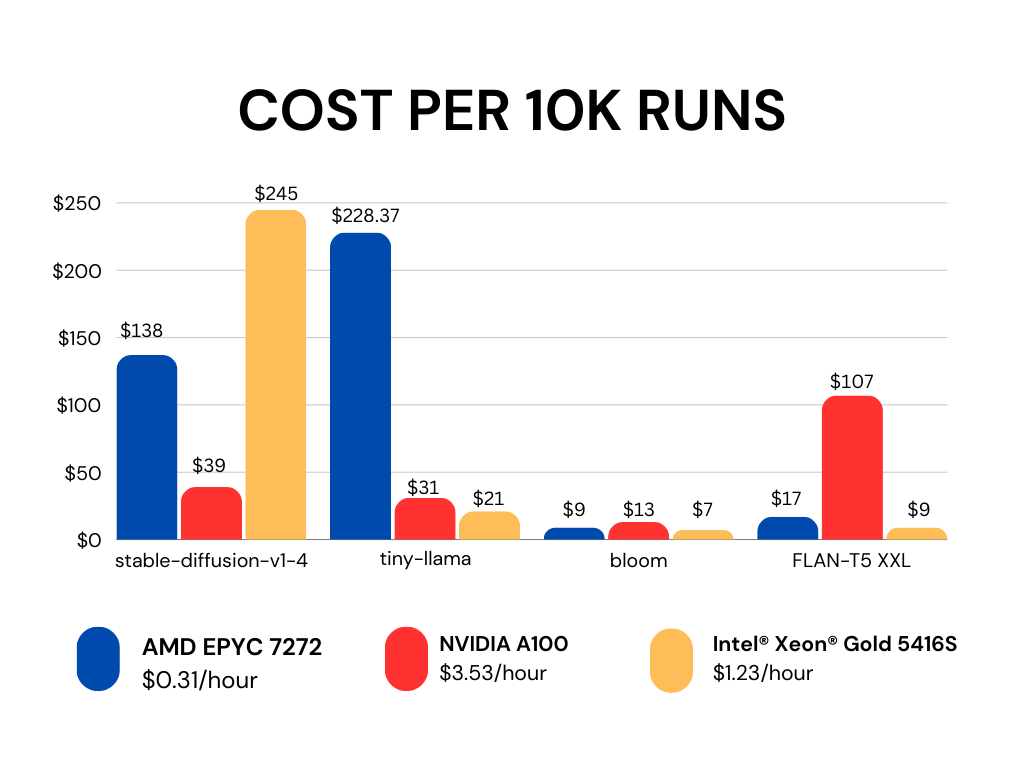

Unit Cost

The cost of units can vary significantly based on whether you’re utilizing instances, purchasing hardware, or using OpenMetal’s servers. Additionally, the overall cost escalates when you factor in the necessary supporting hardware for these processing units. For these tests, we’ll be using OpenMetal’s hourly rates.

AI Models

For our tests, we chose a range of popular models from Hugging Face, known for its machine learning (ML) and data science community and platform. Each processor was given the same inputs across all test runs to ensure a consistent and fair evaluation.

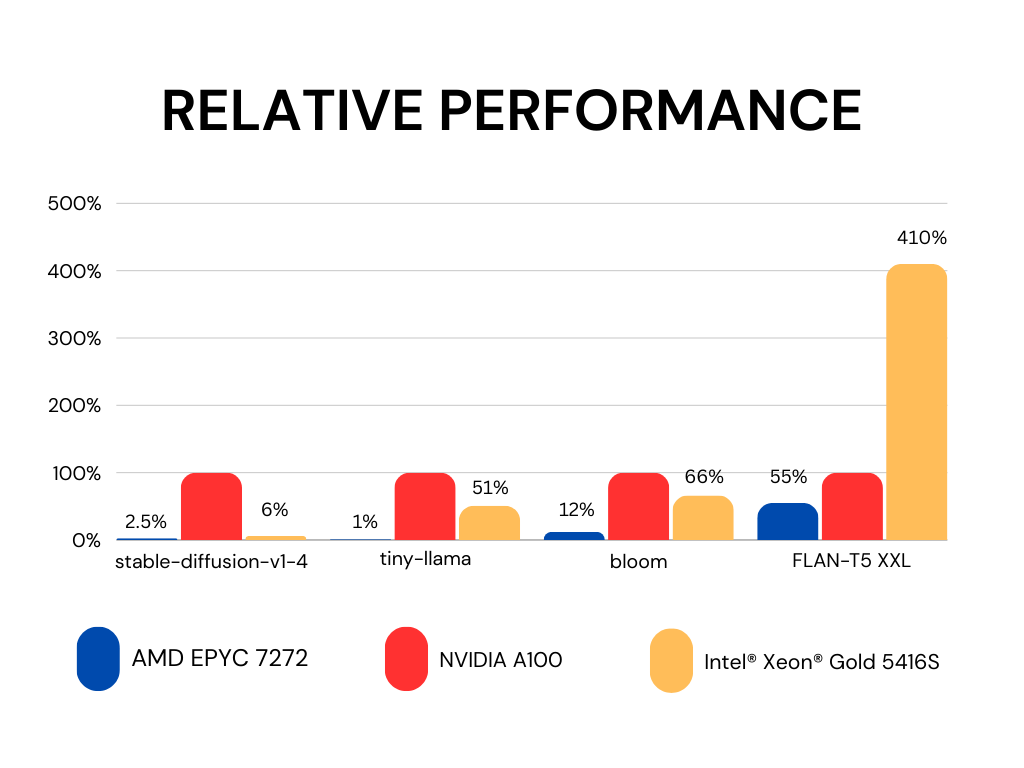

Average speed of single inference run relative to an NVIDIA A100

Using OpenMetal hourly rates

Average Execution Time (seconds)

| stable-diffusion-v1-4 | tiny-llama | bloom | FLAN-T5 XXL | |

|---|---|---|---|---|

| AMD EPYC 7272 | 160 | 265.2 | 10.9 | 19.7 |

| NVIDIA A100 | 4 | 3.2 | 1.3 | 10.9 |

| Intel® Xeon® Gold 5416S | 72 | 6.3 | 2.0 | 2.7 |

Our testing reveals that, in most cases, Nvidia’s A100 surpasses the performance of the latest generation CPUs. However, this lead isn’t as pronounced compared to the older EPYC model. The Intel XEON, with its 2x – 10x performance improvement over the older EPYC, surpasses the A100 in cost vs. performance in almost all categories.

Choosing the right hardware for your AI use case involves testing and optimizing your model with different hardware options. Our findings show:

- Performance Variation: Some models perform exceptionally well on CPUs, while others may face significant delays.

- Real-Time vs Background Processing: Determine if your application requires real-time responses or if tasks can be executed in the background for later review.

- Budget Considerations: For those prioritizing budget, the latest generation Intel CPUs are a solid choice.

- GPU Preference for Certain Tasks: Tasks like image and video processing might still favor GPUs.

- Evolving Technology: The landscape is dynamic, with CPUs continually improving in capabilities.

Why GPUs Perform Better for AI Tasks

Parallel Processing Capabilities

GPUs are designed to handle multiple operations simultaneously. This parallel processing is vital for AI tasks, which often involve complex mathematical computations. Unlike traditional CPUs, which process tasks sequentially, GPUs can execute thousands of threads at once, drastically reducing computation time for AI algorithms.

Handling Large Datasets

AI and machine learning often require the processing of vast amounts of data. GPUs excel in this area due to their high bandwidth memory and large number of cores, which allow for faster and more efficient data handling. This capability is crucial in training deep learning models, where large datasets are a norm.

Specialized for Computation-Intensive Tasks

GPUs are optimized for computationally intensive tasks. They have a large number of arithmetic logic units (ALUs), enabling them to perform more calculations per second. This makes them particularly effective for the matrix and vector operations that are common in machine learning algorithms.

Accelerating Deep Learning Frameworks

Most deep learning frameworks are optimized for GPUs. Frameworks like TensorFlow, PyTorch, and CUDA provide libraries and tools specifically designed to leverage GPU architecture, further enhancing their performance in AI tasks.

Use Cases: Image and Speech Recognition

In applications such as image and speech recognition, AI image generation software, and similar domains, GPUs demonstrate remarkable efficiency. Their ability to quickly process and analyze pixels in images or audio data in speech makes them indispensable for these applications.

CPU Models for AI Inference

Now that we’ve established GPUs as the dominant force in training models, it’s worth exploring CPUs that stand out as solid contenders for AI inference.

Intel Xeon Processors

Intel’s Xeon series, particularly those with Advanced Matrix Extensions (AMX), are highly recommended for AI inference tasks. They offer enhanced performance capabilities, making them suitable for a variety of AI applications.

AMD EPYC Series

AMD’s EPYC processors are known for their high core and thread counts, making them a strong contender for AI inference, especially in tasks that require significant parallel processing.

ARM-Based Processors

ARM-based CPUs, with their efficiency and low power consumption, are increasingly becoming popular for edge AI inference, where power efficiency is a critical factor.

AI Models Suited for CPU vs GPU

With a selection of CPU models in hand, it’s time to identify AI models that could help close the performance gap with GPUs. While many AI models are designed with GPU optimization in mind, the difference in performance can vary significantly depending on the model.

Certain models, particularly those not heavily reliant on parallel processing, might perform comparably on CPUs. Exploring these models will shed light on situations where CPUs can not only compete but also potentially excel, offering a more cost-effective solution for AI inference.

AI Models for CPUs

- Decision Trees and Random Forests: Ideal for CPUs due to their sequential processing nature.

- Lightweight Neural Networks: Smaller, less complex neural networks can run efficiently on CPUs.

- CPU-Optimized Models: Models using techniques like quantization or pruning are tailored for CPU efficiency.

AI Models for GPUs

- Deep Learning Models: GPUs excel in training and running deep neural networks due to their parallel processing capabilities.

- Large-Scale Machine Learning Tasks: Tasks involving large datasets and complex computations are better handled by GPUs.

- Image and Video Processing Models: GPUs offer superior performance in handling high-dimensional data typical in image and video processing.

When CPU Costs Outweigh the Benefits of GPU Throughput

While GPUs offer high throughput, their cost can be prohibitive in certain scenarios. CPUs, with their lower cost and improved efficiency for specific tasks, can sometimes be the more prudent choice. It’s essential to weigh the cost-benefit ratio and understand the specific needs of your AI application before deciding.

Understanding CPU and GPU Cost Dynamics

- Initial Costs: GPUs generally have a higher initial cost compared to CPUs. This includes not just the purchase price but also the associated infrastructure like cooling systems and power supplies.

- Operational Costs: GPUs consume more power, leading to higher electricity bills. For long-term projects or operations, these costs can accumulate significantly, tilting the balance in favor of CPUs.

Specific Scenarios Favoring CPU Use

- Smaller Scale Projects: For startups or small to medium-sized projects where budget constraints are tighter, CPUs offer a more cost-effective solution.

- Low to Moderate Data Processing Needs: In cases where data processing requirements are not extensive, the high processing power of GPUs may not be fully utilized, making CPUs a more economical choice.

- Non-Critical Batch Processing: Applications involving batch processing where time is not a critical factor can benefit from the cost-efficiency of CPUs.

Evaluating the Trade-offs

- Performance vs. Cost Analysis: It’s crucial to conduct a detailed analysis of the performance needs against the budget. This involves considering the nature of the AI tasks, the expected volume of data processing, and the required speed of processing.

- Long-Term Implications: Consider the long-term implications of choosing CPUs over GPUs, including maintenance costs, scalability needs, and potential future upgrades.

Real-World Examples

- Case Study – Data Analysis in Small Businesses: Small businesses using AI for customer data analysis might opt for CPUs due to limited budgets and moderate data volumes.

- Scenario – Academic Research: Academic projects with limited funding but requiring AI computations can utilize CPUs effectively, especially when time is not a pressing factor.

Interested in GPU Servers and Clusters?

GPU Server Pricing

High-performance GPU hardware with detailed specs and transparent pricing.

Schedule a Consultation

Let’s discuss your GPU or AI needs and tailor a solution that fits your goals.

Private AI Labs

$50k in credits to accelerate your AI project in a secure, private environment.