Accelerate Your AI Projects with Confidence

Everyone’s exploring how to best leverage AI for their business and their customers. With the new OpenMetal Private AI Labs program, you can access private GPU servers and clusters tailored for your AI projects. By joining, you’ll receive up to $50,000 in usage credits to test, build, and scale your AI workloads. Whether you’re fine-tuning LLMs, running ML pipelines, or training deep learning models—OpenMetal gives you full access to bare metal GPUs on secure, private infrastructure.

No slicing. No noisy neighbors. Just raw power and privacy to move faster.

Why Join Private AI Labs?

$25K–$50K in Usage Credits: Offset your PoC and early scaling costs with generous monthly credits.

- Private, Bare Metal Access

No time slicing. Full control of your GPU with maximum performance and isolation. - Security & Compliance-Ready

Keep your data safe with private cloud infrastructure designed for regulated environments.

- Infrastructure Built for AI

NVIDIA A100, H100, and multi-GPU configurations. Custom RAM and NVMe to fit your needs. - Optional Cluster Configurations

Need 4–8 GPUs? We’ve got you covered. Configure your own private AI lab.

The Labs Program is Currently Available In:

OpenMetal US East Coast Data Center (Washington D.C. Metro)

All GPU hardware is custom-built and delivered within 8–10 weeks after order placement. Clusters may take up to 12 weeks.

More locations coming soon!

Credit Usage Structure

| Contract Term | Max Credit | Credit Usage Cap |

|---|---|---|

| 3-Year Commitment | $50,000 | Up to 20% of your monthly bill |

| 2-Year Commitment | $25,000 | Up to 30% of your monthly bill |

Credits are applied monthly and cannot exceed 30% of the total monthly invoice.

Who Should Apply?

- AI/ML teams looking to escape the constraints of public cloud GPUs

- Enterprises building confidential or compliance-sensitive models

- Startups running PoCs or fine-tuning large language models

- Researchers seeking consistent, high-performance GPU access

Eligibility Criteria

- Must be a company or team actively developing or running AI/ML workloads.

- Use case requires GPU acceleration (training, inferencing, fine-tuning, etc.).

- Must sign a 2- or 3-year contract to receive credits.

- Willingness to provide feedback and participate in customer success stories.

- Can be a current customer but not currently using OpenMetal GPU infrastructure.

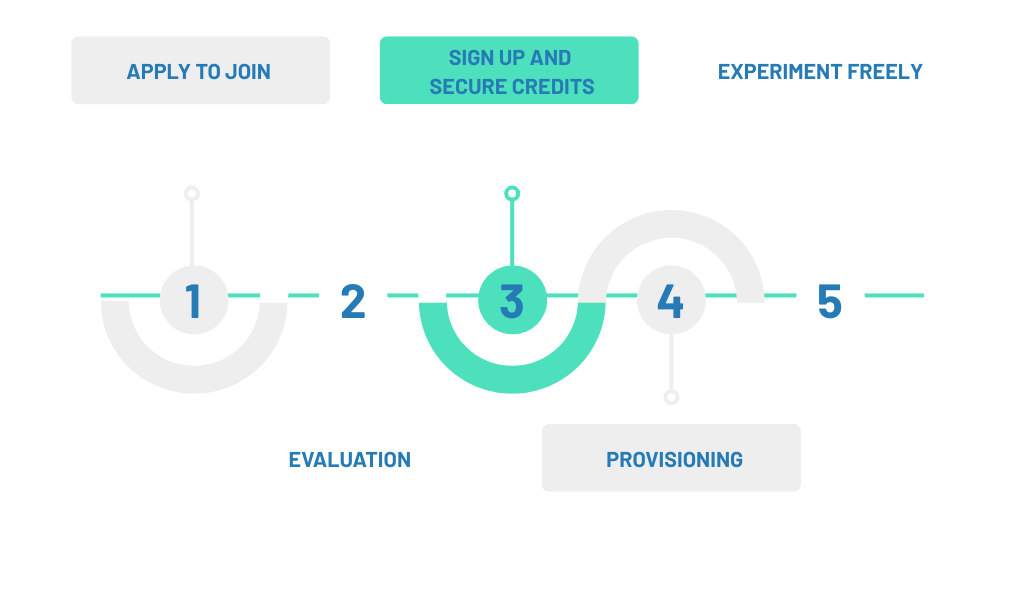

How the Program Works

OpenMetal GPU Servers and Clusters

The Private AI Labs Program was created to give easy and early access to enterprise servers by AI teams. These include fully customizable deployments ranging from large-scale 8x GPU setups to CPU-based inference. When applying for the program, refer to the hardware list below to indicate the hardware of of interest.

| GPU | GPU Memory | GPU Cores | CPU | Storage | Memory | Price | |||

|---|---|---|---|---|---|---|---|---|---|

X-Large The most complete AI hardware we offer. It's ideal for AI/ML training, high-throughput inference, and demanding compute workloads that push performance to the limit. | |||||||||

| 8X NVIDIA SXM5 H100 | 640 GB HBM3 | Cuda: 135168 Tensor: 4224 | 2x Intel Xeon Gold 6530 64C/128T 2.1/4.0Ghz | Up to 16 NVMe drives 2x 960GB Boot Disk | Up to 8TB, DDR5 5600MTs | Contact Us | Contact Us | ||

Large Perfect for mid-sized GPU workloads with maximum flexibility. These servers support up to 2x H100 GPUs, 2TB of memory, and 24 drives each! | |||||||||

| 2X NVIDIA H100 PCIe | 160 GB HBM3 | Cuda: 33792 Tensor: 1056 | 2x Intel Xeon Gold 6530 64C/128T 2.1/4.0Ghz | 1x 6.4TB NVMe 2x 960GB Boot Disk | 1024GB DDR5 4800Mhz | $4,608.00/mo eq. $6.31/hr | Contact Us | ||

| 1X NVIDIA H100 PCIe | 80 GB HBM3 | Cuda: 16896 Tensor: 528 | 2x Intel Xeon Gold 6530 64C/128T 2.1/4.0Ghz | 1x 6.4TB NVMe 2x 960GB Boot Disk | 1024GB DDR5 4800Mhz | $2,995.20/mo eq. $4.10/hr | Contact Us | ||

| 2X NVIDIA A100 80G | 160 GB HBM2e | Cuda: 13824 Tensor: 864 | 2x Intel Xeon Gold 6530 64C/128T 2.1/4.0Ghz | 1x 6.4TB NVMe 2x 960GB Boot Disk | 1024GB DDR5 4800Mhz | $3,087.36/mo eq. $4.23/hr | Contact Us | ||

| 1X NVIDIA A100 80G | 80 GB HBM2e | Cuda: 6912 Tensor: 432 | 2x Intel Xeon Gold 6530 64C/128T 2.1/4.0Ghz | 1x 6.4TB NVMe 2x 960GB Boot Disk | 1024GB DDR5 4800Mhz | $2,234.88/mo eq. $3.06/hr | Contact Us | ||

Medium Low cost GPU workloads. Less flexible than our large GPU deployments, but far more powerful than CPU inferencing. | |||||||||

| 1X NVIDIA A100 40G | 40 GB HBM2e | Cuda: 6912 Tensor: 432 | AMD EPYC 7272 12C/24T 2.9Ghz | 1TB NVMe | 256GB DDR4 3200MHz | $714.24/mo eq. $0.98/hr | Contact Us | ||

Pricing shown requires a 3-year agreement. Lower pricing may be available with longer commitments.

Final pricing will be confirmed by your sales representative and is subject to change.

Still Have Questions?

Schedule a Consultation

Get a deeper assessment of your use case scenario and discuss your unique requirements for your AI workloads before applying for the program.