Block Storage – 3 Ways, Same System

Block storage is available by default to your virtual machines (VMs) or containers from within your private Cloud Core. In addition to the default, there are several options that you can take advantage of depending on the performance, cost per GB, or data redundancy levels you need. Since this is a fully private cloud with your own control plane, you can modify OpenStack Cinder or Ceph to fit your needs.

Highly Available Network Block Storage

All private Cloud Cores come by default with Ceph configured to provide block storage that is backed by a triplicate copy of your data. Each server has at least 1 drive that is within a Ceph pool. The pool is then made available by Ceph to OpenStack for your VMs or containers to mount as block storage. In the event a server or drive becomes unavailable, your Ceph and the block storage will continue to work for your VMs using that storage.

With network block storage, live migration, the ability to move VMs between hardware nodes with 1 or 2 ping drops, is supported. This technology, though not often used, can be very valuable in maintaining a healthy cloud without disruptions to in VM workloads.

Single Drive High IOPS NVMe Block Storage

On Cloud Cores with more than 1 workload drive or on additional servers added to your cluster, you can choose the configuration of those additional drives. A popular choice to get the most speed out of your NVMe drives is to use a single drive directly. In this scenario, there is not redundancy, and so redundancy, if needed, would be handled at the software layer. A replicated database, Galera for example, runs great when it has high IOPS and handles its own replication on other single drive backed VMs.

Live migration is not supported when using drives directly on a specific hardware node. Migration can often be very fast with minimal disruption to the workload in the virtual machine, but this depends on the size of the storage being moved and how busy the VM is at that time.

RAID 1 High IOPS NVMe Block Storage

A variation of the single drive high IOPS NVMe block storage, the addition of a software RAID 1 above 2 drives brings in drive level redundancy with a small write penalty and a potential increase in read speeds.

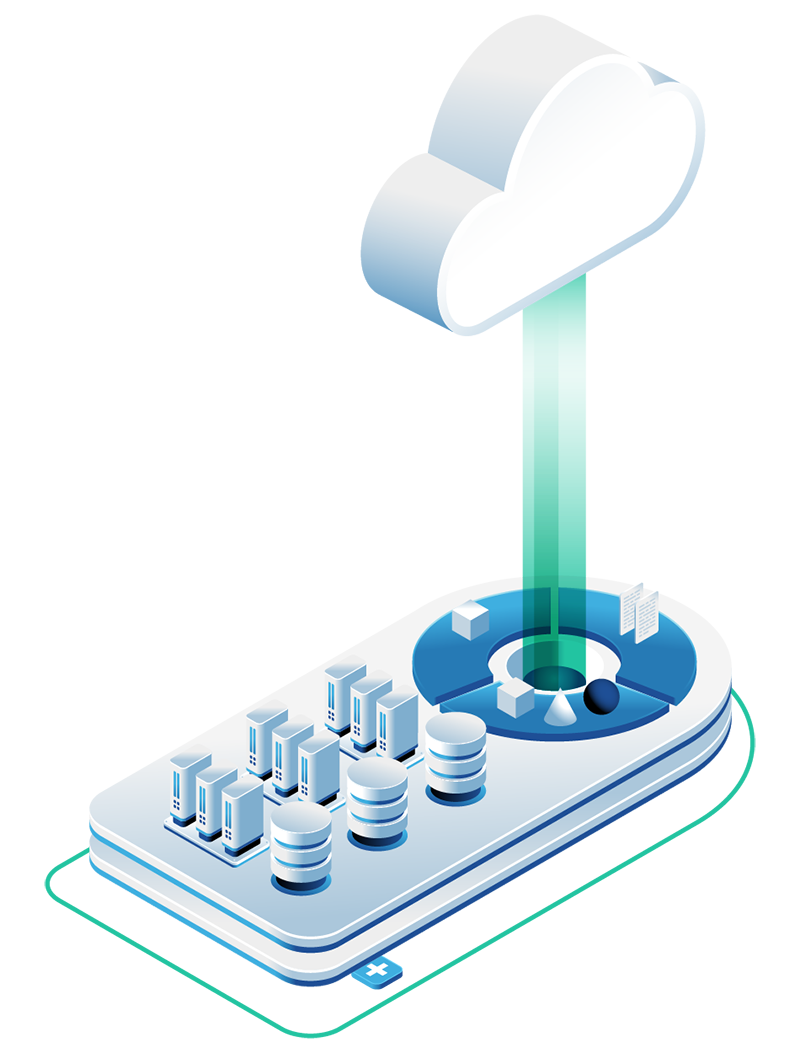

Network Block Storage from Ceph

All 3 servers provide one or more drivers, OSDs, joined into your hyper-converged Ceph cluster. The cluster replicates your data 3 times for data security and operational availability. Your cluster is considered highly available as it can continue serving as normal in the event that 1 cluster member or OSD fails. The performance of your block storage depends on if you have selected NVMe or SATA SSD for your private cloud core. Utilize your management dashboard to create VMs or containers while managing storage devices through API management.

Adding Block Storage to Your Private Cloud Core

In addition to what is provided from your initial private cloud core, you have several options for growing your block storage capacity:

Grow by adding a matching converged node that will increase your block storage, VM, and container capacity.

Create a separate storage cluster that can have different hardware and Ceph settings. The storage blended offers a balance of performance for both block and object storage. The storage large is typically for low-cost object storage but may be a good fit for block storage if write speeds do not need to be high.

With an agreement, a private Cloud Core Large can have additional disks added to the hardware and joined into the Ceph cluster.

Block Storage FAQs

More OpenMetal Platform Components

Compute

Build, modify, and scale faster with virtual machines, containers, and high-performance NVMe SSDs to support any workload requirements.