This discusses the sizing of an OpenMetal Cloud Core. An OpenMetal Cloud Core is a combination of OpenStack and Ceph hyper-converged onto 3 servers. See our OpenMetal private cloud pages. You can also review many of our configurations on Github if you are not a customer yet.

There are two major flavors of the hyper-converged OpenStack-powered OpenMetal private cloud core. The “Standard” and the “Small”. It is also possible to repurpose the “Compute Large” but that is for very large clusters and is not always available for automated deployment. For this article, we will cover deciding between Small and Standard and how to balance the resource needs of the Control Plane against the desire to have as much Compute and Storage as possible for workloads.

OpenStack Control Plane Components

The term Hyper-Converged is used here because all components necessary to have a private cloud are running on these 3 servers.

For more information see OpenStack Networking Essentials.

Of note, the networking components are highly available. For example, when creating a router, it will mean there are actually two routers created and one will be acting as a hot backup in case one hardware node fails. This does mean that sufficient resources must be set aside both for the main and the backup.

Routers

Switches

Firewalls

Cloud Management

Image Repository

API Servers

Access Management

User Clients, GUI and CLI

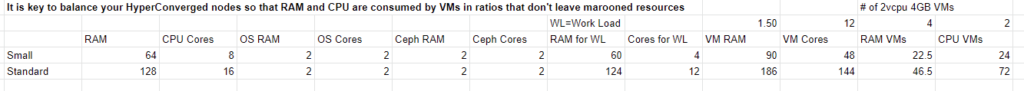

For the OpenStack control plane and Operating System, when using either the Small or Standard servers in a 3 server hyper-converged OpenStack-powered OpenMetal private cloud, we recommend reserving 2GB of RAM and 2 CPU cores. Note, a hyper-thread is considered a “core” as a standard convention in the public or private cloud market.

Hyper-Converged OpenStack and Ceph

In addition to the Control Plane items above, next the Compute and Storage are added. This gives the final system its “Hyper-Converged” moniker.

Ceph is running on all of the hyper-converged private cloud cluster members. This means the Ceph control systems, like the monitors, managers, and meta-data services plus the actual storage, are called OSDs in Ceph.

Of note, in the case of the Block, Object, and File Storage, Ceph has HA options. The default option in Ceph is to use a Replica of 3, so data exists on 3 hardware nodes at any given time. See our What is Ceph and Ceph Storage for more background.

Compute

HA Network Block Storage

Local Block Storage

Object Storage

Object Gateways

A replica of 2 is also popular and considered safe for most applications due to the high MTBF of the industry-leading NVMe drives in your private cloud.

For the Ceph Control Plane and OSDs, when using either the Small or Standard servers in a 3 server hyper-converged OpenMetal private cloud, we recommend reserving 1GB of RAM and 1 CPU core for the Ceph Control Plane Services and an additional 1GB of RAM and 1 CPU for each OSD. In this case, it is a total of 2GB of RAM and 2 CPUs since each cluster member has 1 primary storage drive.

Compute Available after Control Plane Reserves

Key to all public and private cloud cost and resource balancing is the known usage patterns of applications and hardware. For example, the vast majority of use cases for CPU power is typically much, much less than what the CPU can actually do. This varies, but 1/16 is a commonly quoted ratio. This means if just a single OS and application were using a CPU, it would, on average, leave 15/16 of the CPU to go to waste.

In the public and private cloud world, the standard procedure to avoid this waste of the underlying hardware is to introduce a variable of allowing 12 to 20 VMs to access the same “1 unit” of physical CPU resources. Your cloud is preconfigured at 12 but can be adjusted to 16. We do not recommend higher.

RAM is much more used by VMs so instead of a 12X multiplier, it has only a 1.5X usage ratio.

With those in mind, here is the calculation of the remaining resources that can be allocated to workloads. We have used a standard “2 vCPU/4GB RAM” VM for illustration.

This leaves you with up to 22 VMs per Small or 46 VMs per Standard at 100% usage. This is not recommended as full usage leads to unpredictable performance. At 85% usage, for all three servers, with a caveat that all workloads vary, you can expect 49 on Smalls and 108 on Standards. See our Private Cloud Pricing Calculator for more examples.

Size by Estimated Future Network Needs

Since all hyper-converged systems generally work the same for how Compute and Storage work, it actually comes down to limitation that the Control Plane may have. In the case of a Small or Standard, they are balanced when there are 3 to 6 or so identical servers in the cluster.

As the cluster moves from around 6 up to really 40 or so, there will be more network demands and this is where the Standards become the building block of choice. The larger CPUs and the faster NVMe drives are just one component. The key is actually the Smalls have 2gbits of physical network throughput and the Standards have 20gbits of physical network throughput.

With that, we typically recommend that if you can start with Standards, it is the best choice for easy scaling. Control Plan servers can be changed out, but that typically requires significant OpenStack and Ceph experience. It is likely to incur a professional service cost to you if that is outside of your experience.

As hardware nodes grow past 40 or so, additional Control Plane nodes may be needed so networks and routers can be selectively placed to decrease network concentration. Adding Control Plane nodes is less difficult than changing the overall “Core” cluster but may require assistance.

Migrating Workloads as Your Private Cloud Grows

A key item with hyper-converged clouds is to embrace the concept of scaling your cloud up and down as your “workloads” increase or decrease. In the case of a hyper-converged system, you are electing how much Compute and Storage is running on the “Core”.

When your workloads grow, spin up additional “Converged” Compute and Storage. The “Converged” unit does not contain the Control Plane and those hardware nodes are exclusively for workloads. As your overall cluster grows, the additional workloads will demand more of your Control Plane.

As your private cloud has matching CPUs within the cluster members and Ceph is used for the block storage, you can “live migrate” VMs from node to node. This means no discernible downtime for applications, usually just a few dropped ping packets. So if you see resource contention on the Control Plane servers, just migrate a few VMs off to a Converged server. For more background, see Converged vs Hyper-Converged.

Summary and Next Steps

Hope that helps. If you are still researching, this article is part of our series on How to create a private cloud. If you have questions about balancing Control Plane needs with Compute and Storage in a hyper-converged private cloud please ask them in the comments below or find me on LinkedIn. You might also be interested in a cost analysis – see Cost Tipping Points for Private vs Public Cloud.

If you are wondering why this is written from an OpenStack perspective – check out Why is OpenStack Important for Small Business.