Clouds can expand horizontally and vertically, by hardware or service type, and across the globe. Join with our team to leverage this new technology to open doors that traditional public cloud has tried to slam shut on you.

Understanding Cloud Architecture Options

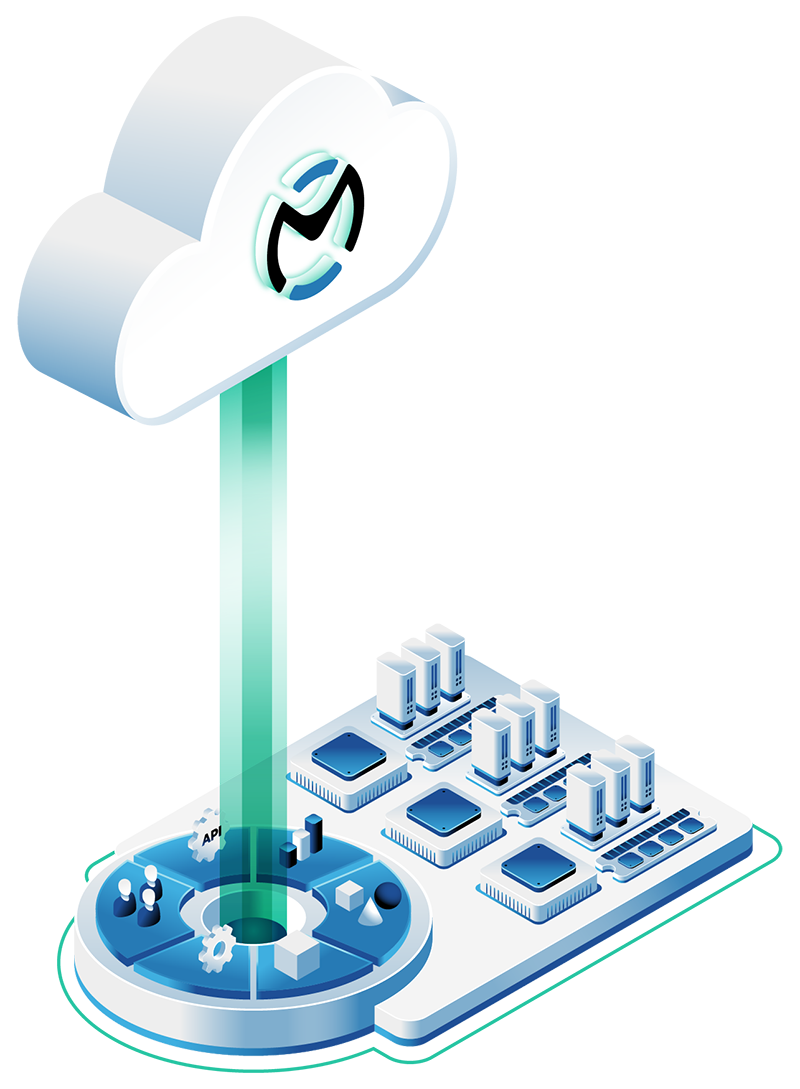

OpenMetal allows easy scaling so your choices are not permanent, and our team will assist you in these choices. The major building blocks of your OpenMetal cloud:

- Physical hardware automation and networking

- Server catalog of nodes/clusters available

- Cloud functionality provided by your Cloud Core

- Matching workloads to hardware resources

- Programs and ramps

- Hardware pricing/deployment cost calculator

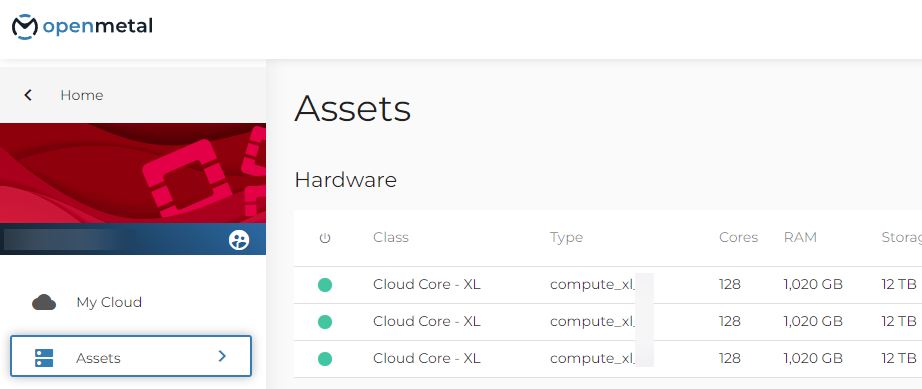

OpenMetal Central is our GUI that enables team management, spinning up and down clouds, adding hardware to specific clouds, and setting budget limits. It is also where you will find services that sit above the cloud like Datadog monitoring, attack surface reports, and access to our 24/7 support team.

We use Ansible to quickly and predictably add hardware to clusters. Once hardware is delivered into your network you can trigger our pre-built Kolla-Ansible and Ceph-Ansible playbooks. These playbooks are designed to run directly if you have not modified your cloud configuration or for you to use as a base in the event you have modified your cluster from our base.

Assigned VLANs. All of your physical servers live within a set of VLANs that are allocated to each cloud. Those VLANs are private to your cloud but can be shared across clouds. They have been configured to allow your cloud to run VXLAN within those VLANs. This allows your cloud to support virtual private networks.

IPv4 and Swip. The hardware nodes themselves have IPv4 for ease of updating and servicing. For IP addresses that will be used for VMs or other services, you can lease IP space from OpenMetal, 3rd party partners, or you can bring your own IP space. You may also be interested in our egress pricing.

Capacity and connectivity. OpenMetal is organized into pods. Each pod has 200gbit minimum connectivity. All V2 and later servers are connected at 20gbps through a LAG to separate ToRs or ToCs. Pods are also protected against DDOS with support for filtering up to 20gbps per target by default and higher with special agreements.

Storage and compute nodes (or converged nodes) are one of the most popular ways to grow your clouds. Each node will increase both your compute and storage at the same time. Unlike your Cloud Core, these servers do not handle any cloud management and will be focused on workloads only. The servers are identically matched so key features, like live migration of VMs, continue to be available between these servers and your Cloud Core.

Compute nodes are stand alone nodes that do not need to be matched with your Cloud Core or your converged nodes. The drives on your compute nodes are not typically joined with your network storage but instead setup as single drives or within a local raid. This is popular for applications that benefit from direct access to extremely high IOPS NVMe drives. Of note, VMs on compute nodes can continue using the network storage provided from your cloud.

Storage clusters are a stand alone cluster of 3 or more servers that provide network storage only. VMs on your cloud can use these resources for block storage, object storage, and file systems. The most common use is for large scale object storage. With a high ratio of NVMe cache disks you can expect high performance and the low cost of spinning media. Details and pricing. This is an advanced option our team will assist you with adding.

Converged clusters are a stand alone cluster of 3 or more servers that provide compute and network storage. The most common use is for workloads that have specific needs different from what your cloud has in it now but also follow a predictable compute versus storage ratio. Large clouds often have these since they are more efficient than stand alone compute when deployed in large scale. This is an advanced option our team will assist you with adding.

Bare metal servers are stand alone nodes that can either be joined with your cloud to share VLANs or can live in their own separate network. Access raw power as you need for your workloads or your customers. Any server available below can be used as a bare metal server. Each deployment of bare metal comes with several private VLANs and your IMPI access to allow advanced clustering including Hadoop, Ceph, ClickHouse, CloudStack, etc.

Cloud Cores provide cloud management and private network services for itself and for any expansion nodes within the same VLANs. Further, one Cloud Core can control other Cloud Cores and the expansion servers underneath those other Cloud Cores.

Optionally, bare metal can also be configured to allow the Cloud Core to manage iptables based firewalls on the bare metal.

The Cloud Cores are the building blocks for hosted private clouds and are hyper-converged (meaning they handle cloud management and supply compute and storage). As your overall cloud grows more resources will be used for cloud management. This will remain a small percentage of the overall cloud resources, but may require limiting the usage of the compute and storage supplied by the cloud core.

For full details visit Cloud Core features.

Hardware Resource Ratios

When growing your cloud with expansion nodes or clusters you have access to several different hardware configurations. These servers have certain ratios of CPU to RAM to disk space and disk I/O.

For clouds, having a good understanding of what your workloads need – are they RAM heavy, disk I/O heavy, do they use the CPU’s math processes, etc. will allow you to choose hardware that has more of what you need and less of what you don’t. This will yield the best economics for you.

To help illustrate this we will contrast one of our most popular servers, the XL V2, with its expanded disk space sibling, the XL6 V2, and its XL V2 for compute sibling.

In addition, servers with multiple drives can use those drives for NVMe direct storage or as part of a Ceph network storage cluster. For example, on the XL V2 with 4 drives you can use 2 drives per server as part of the Ceph network storage and use 2 as local NVMe and combine them under a RAID 1 for on node redundancy. Your cloud then has access to high available Ceph block storage and to ultra high IOPS local NVMe with node level redundancy.

Save Your SaaS’ Bottom Line

Don’t let the mega public clouds take your profits or your customers.

Hosting and Cloud Providers

Empower your unique offerings and lower all-in costs.

OpenStack Power Users

Move faster with validated OpenStack clouds delivered instantly.