Artificial Intelligence has taken the tech world by storm, and as enterprises and developers explore its capabilities, the conversation around Private AI has never been more critical. Todd Robinson, founder of OpenMetal, recently shared his insights on this topic at SCaLE 22x / OpenInfra Days 2025, shedding light on CPU vs. GPU inference, the importance of open-source AI, and how businesses can take control of their AI infrastructure.

The OpenMetal Story: Simplifying OpenStack for Private AI

Before diving into Private AI, it’s important to understand the foundation that OpenMetal is built upon. As a company that emerged from InMotion Hosting, OpenMetal has deep experience with OpenStack and Ceph. The team realized early on that while OpenStack is a powerful open-source cloud solution, its complexity was a major barrier for adoption.

“If you’re architecting OpenStack for the first time and you’ve never been an administrator of a really good OpenStack, you’re setting yourself up for some difficult days ahead.” – Todd Robinson

To address this, OpenMetal productized OpenStack in a way that makes it easy for businesses to spin up a highly available, hyper-converged cluster in minutes. This makes it a strong contender for organizations looking to deploy Private AI solutions while maintaining full control over their data and infrastructure.

What is Private AI?

At its core, Private AI is about running AI workloads on your own infrastructure instead of relying on third-party cloud providers. This gives businesses greater control over their data, enhances security, and allows for fine-tuned optimization.

Full Control Over AI Workloads

Private AI enables organizations to deploy the models they prefer—whether it’s Llama, DeepSeek, Mistral, or other open-source alternatives—without dependency on third-party SaaS solutions. By eliminating reliance on external platforms like OpenAI, Google Gemini, or Anthropic Claude, businesses can shape their AI systems to meet their unique needs without restrictions imposed by proprietary vendors.

Flexible Compute Choices: CPU vs. GPU Inference

Unlike traditional cloud-based AI services that push organizations toward expensive, high-performance GPUs, Private AI allows businesses to choose the best compute option based on their specific workloads. Whether leveraging high-end GPUs for demanding applications or using CPUs for cost-effective inference, organizations can balance performance, scalability, and budget. With advancements in CPU-based AI acceleration, even general-purpose processors can now deliver respectable inference speeds for many tasks.

Data Privacy & Security

One of the biggest advantages of Private AI is safeguarding sensitive data. Organizations dealing with proprietary research, financial models, healthcare data, or confidential business intelligence can maintain strict data security by processing AI workloads internally. Unlike public AI models that may collect and store user interactions, Private AI ensures that all computations stay within a controlled environment, reducing exposure risks.

Gaining a Fundamental Understanding of AI

By running Private AI, teams gain firsthand experience in optimizing AI models and understanding key principles such as:

- Model Resources vs. Capability vs. Cost – Balancing the computational requirements of different AI models to achieve optimal performance without overspending.

- Reinforcement Learning from Human Feedback (RLHF) – Fine-tuning models through iterative learning to align AI behavior with human preferences.

- Quantization (FP32 → INT8) – Improving efficiency by converting AI models from high-precision floating-point formats to lower-bit representations that require fewer computational resources.

- Distillation (Teacher LLM → Student LLM) – Reducing model size while maintaining effectiveness by training a smaller, faster model (student) to learn from a larger, more complex model (teacher).

- Mixture of Experts (MoE) – Enhancing AI performance by dynamically selecting specialized sub-models (experts) to handle different parts of a task.

“When you’re doing AI yourself, you gain a fundamental understanding that allows you to drive innovation at a faster pace.” – Todd Robinson

Beyond privacy and security, Private AI allows businesses to:

- Customize AI models to their specific needs

- Reduce reliance on third-party providers

- Optimize costs and performance

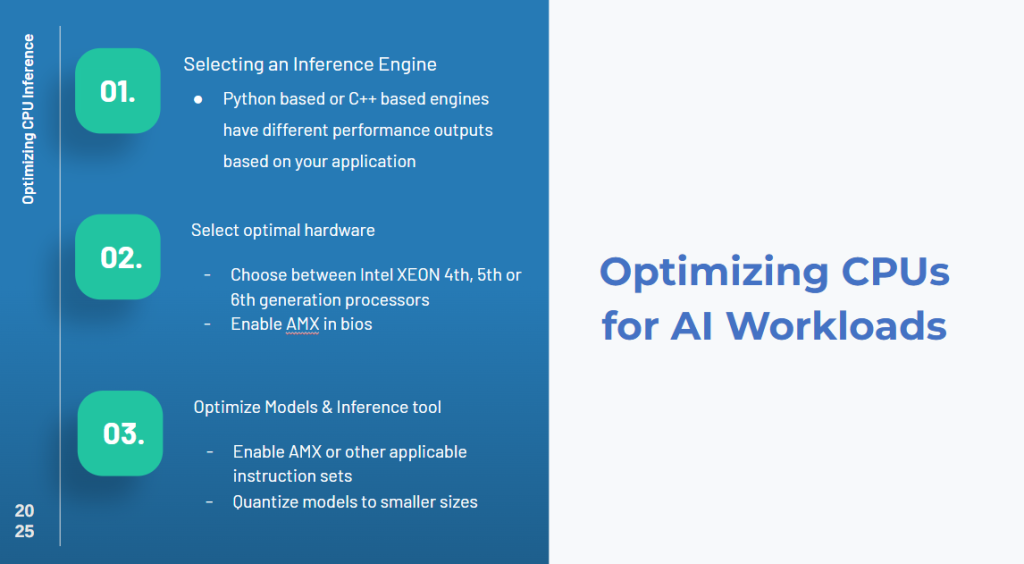

CPU vs. GPU Inference: What’s Changing?

Traditionally, GPUs have been the go-to choice for AI workloads, particularly for training large models. However, CPUs are becoming increasingly viable for AI inference due to advancements in hardware optimization.

GPUs: The Powerhouses of AI Training

GPUs excel at parallel processing, making them ideal for AI training. Todd highlighted the staggering costs associated with high-end GPUs like NVIDIA’s H100, which can cost upwards of $25,000 per card—without even factoring in the power-hungry infrastructure needed to run them.

While GPUs remain king for AI training, they are not always necessary for inference, where the AI model is simply making predictions rather than learning from data.

CPUs: A Rising Contender for AI Inference

With recent advancements, CPUs are becoming more capable of handling AI inference workloads efficiently. Intel’s 4th and 5th Gen Xeon processors, for example, have integrated accelerators such as TMUL (Tensor Processing Extensions), allowing them to process AI workloads more efficiently.

Key advantages of using CPUs for inference:

- CPUs are widely available and often underutilized in existing data centers

- They require significantly less power compared to GPUs

- They can handle many AI workloads at an acceptable speed for human-interactive applications

Benchmarking CPU Performance for AI Inference

Todd’s team ran tests on various Intel CPUs, comparing their performance with GPUs for AI inference. They found that modern CPUs can deliver between 30-50 tokens per second on optimized models, which is sufficient for applications like chatbots, document summarization, and basic code assistance.

“As an example, for AI applications like where humans need to interact with an LLM, 25 tokens per second is plenty. CPUs can now deliver that performance, making them a cost-effective option.” – Todd Robinson

Making AI More Accessible with Open Source

One of the key themes of Todd’s talk was the need to democratize AI. Right now, the AI industry is dominated by a few large players, making it difficult for smaller organizations to innovate on their own terms.

OpenMetal aims to bridge this gap by making AI infrastructure more accessible through open-source solutions like OpenStack. The goal is to empower businesses with the tools they need to deploy Private AI without being locked into expensive, proprietary cloud platforms.

The Future of Private AI

The AI landscape is evolving rapidly, and as models become more efficient, the need for massive GPU clusters may decrease. The ability to run AI inference workloads on CPUs could be a game-changer, allowing businesses to deploy AI solutions with lower costs and greater flexibility.

Key takeaways from Todd’s talk:

- Private AI puts control back into the hands of businesses

- CPUs are becoming increasingly viable for AI inference workloads

- Open-source solutions like OpenStack can help simplify AI infrastructure

- Businesses should start experimenting with AI now to gain a competitive edge

“AI doesn’t have to be the domain of billion-dollar companies. By leveraging open-source and private infrastructure, businesses of all sizes can take control of their AI future.” – Todd Robinson

For those looking to explore AI on their own terms, OpenMetal is rolling out new AI-focused products designed to simplify the deployment of AI infrastructure. Stay tuned for updates from OpenMetal as they continue to push the boundaries of Private AI.

Interested in GPU Servers and Clusters?

GPU Server Pricing

High-performance GPU hardware with detailed specs and transparent pricing.

Schedule a Consultation

Let’s discuss your GPU or AI needs and tailor a solution that fits your goals.

Private AI Labs

$50k in credits to accelerate your AI project in a secure, private environment.